Module 1: Introduction to AI, Machine Learning, and Neural Networks

Artificial Intelligence (AI) represents the frontier of technological advancement, continually reshaping how we interact with and understand the world around us. This module provides insights into the core concepts and technologies that underpin AI.

- Goal: Provide a foundational understanding of artificial intelligence, machine learning, and neural networks, and their relevance in today’s world.

- Objective: By the end of this module, learners will be able to define AI, machine learning, and neural networks, explain their basic principles, and recognize their applications in various domains.

We begin by defining AI and examining its key characteristics that allow systems to learn, reason, process data, and solve complex problems. The module then explores machine learning – a subset of AI focused on data-driven algorithms that can improve performance on specific tasks through experience.

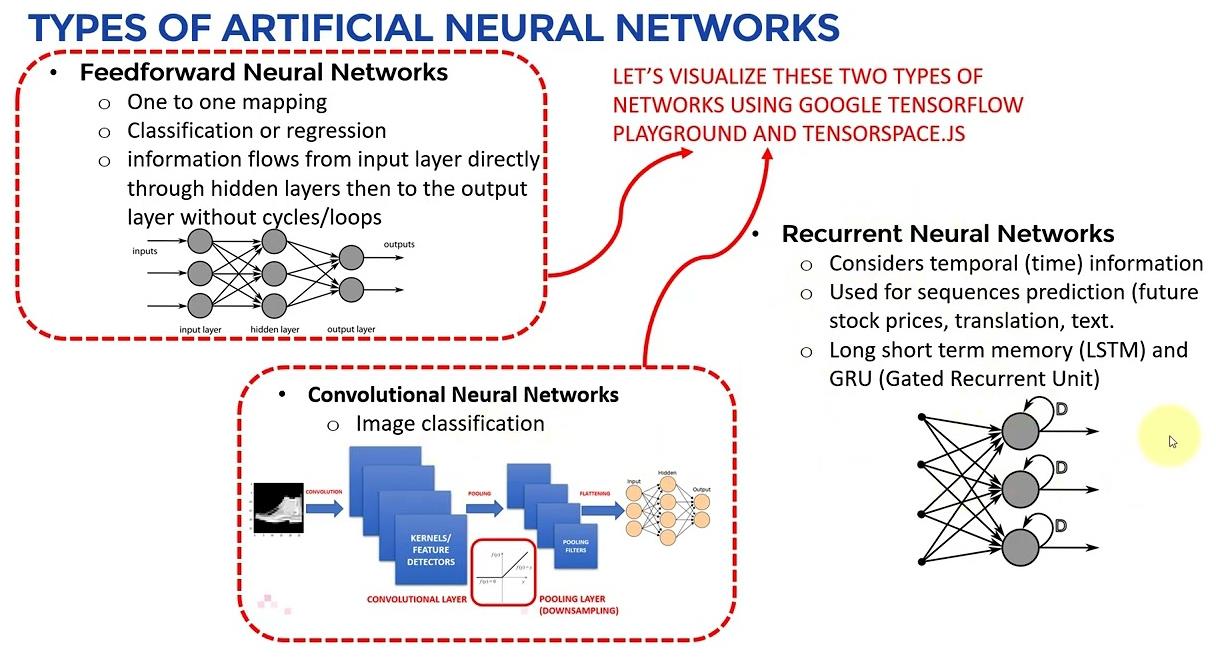

Neural networks, inspired by the human brain’s biological neural connections, are examined in detail. You’ll learn how these networks process information by adjusting connection strengths between nodes, as well as the different architectures like feedforward, recurrent, and convolutional networks designed for various applications.

The module highlights deep learning, a powerful technique utilizing multi-layered neural networks to automatically learn hierarchical data representations. You’ll see how deep learning provides significant advantages over traditional machine learning approaches through examples of breakthrough achievements.

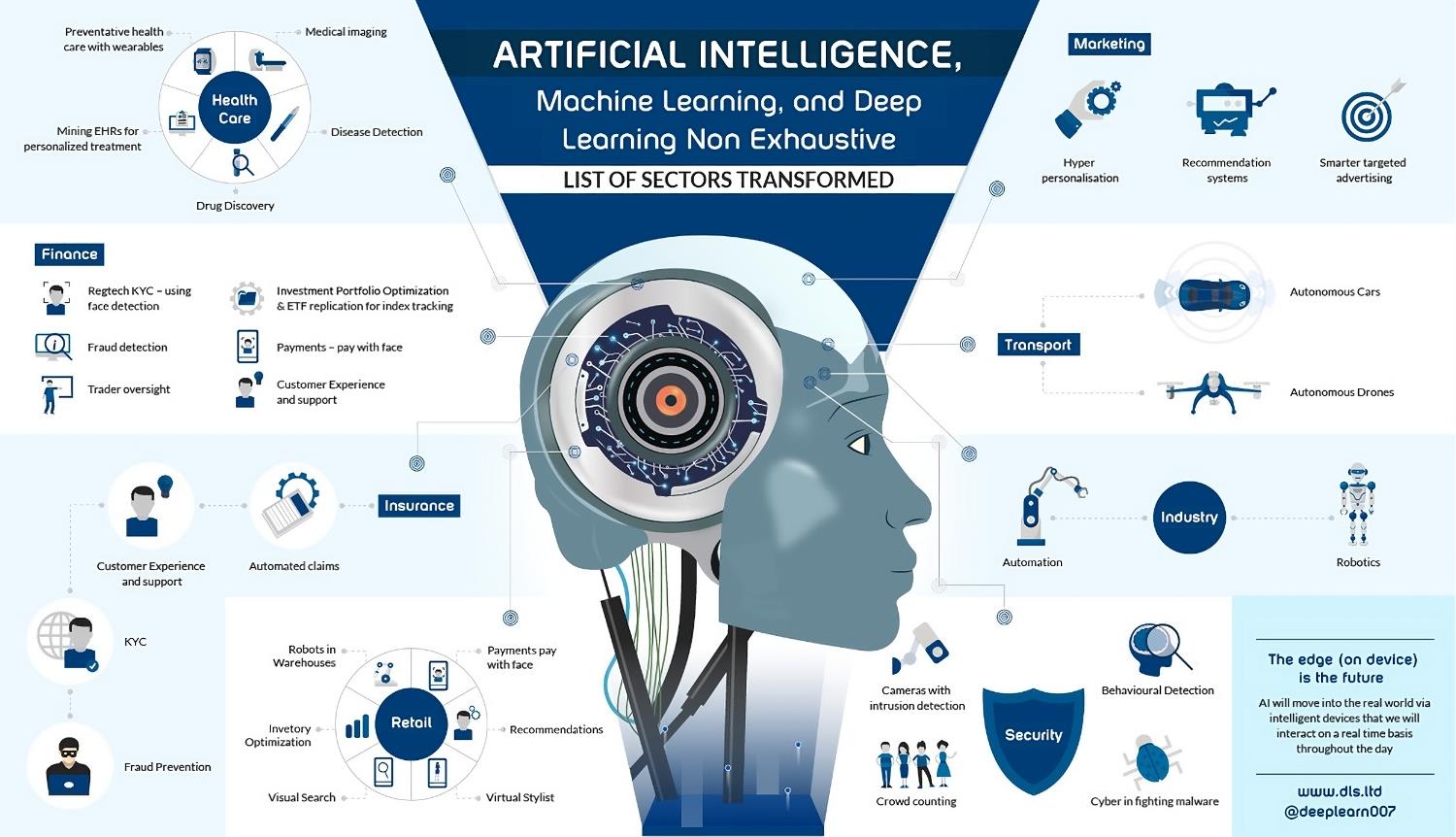

Finally, we’ll cover fascinating real-world AI applications across fields like computer vision, natural language processing, robotics, healthcare, and finance. The economic impact, ethical considerations, and challenges around developing these transformative technologies are also discussed.

- 1.1 What is Artificial Intelligence (AI)?

- 1.2 Introduction to Machine Learning

- 1.3 Neural Networks Fundamentals

- 1.4 Deep Learning and its Impact

- 1.5 Applications of AI, Machine Learning, and Neural Networks

- 1.6 Importance of AI in Today’s World

- 1.7 Summary

1.1 What is Artificial Intelligence (AI)?

Artificial Intelligence (AI) is a rapidly evolving field that focuses on creating intelligent machines capable of performing tasks that typically require human-like intelligence. At its core, AI aims to develop systems that can learn, reason, and adapt to solve complex problems and make decisions based on vast amounts of data.

1.1.1 Definition and key characteristics

AI can be defined as the development of computer systems that can perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and language translation. The key characteristics of AI include:

- Ability to learn and improve from experience

- Capacity to process and analyze large amounts of data

- Capability to recognize patterns and make decisions based on that information

- Aptitude for understanding and generating human-like language

- Potential for adapting to new situations and solving complex problems

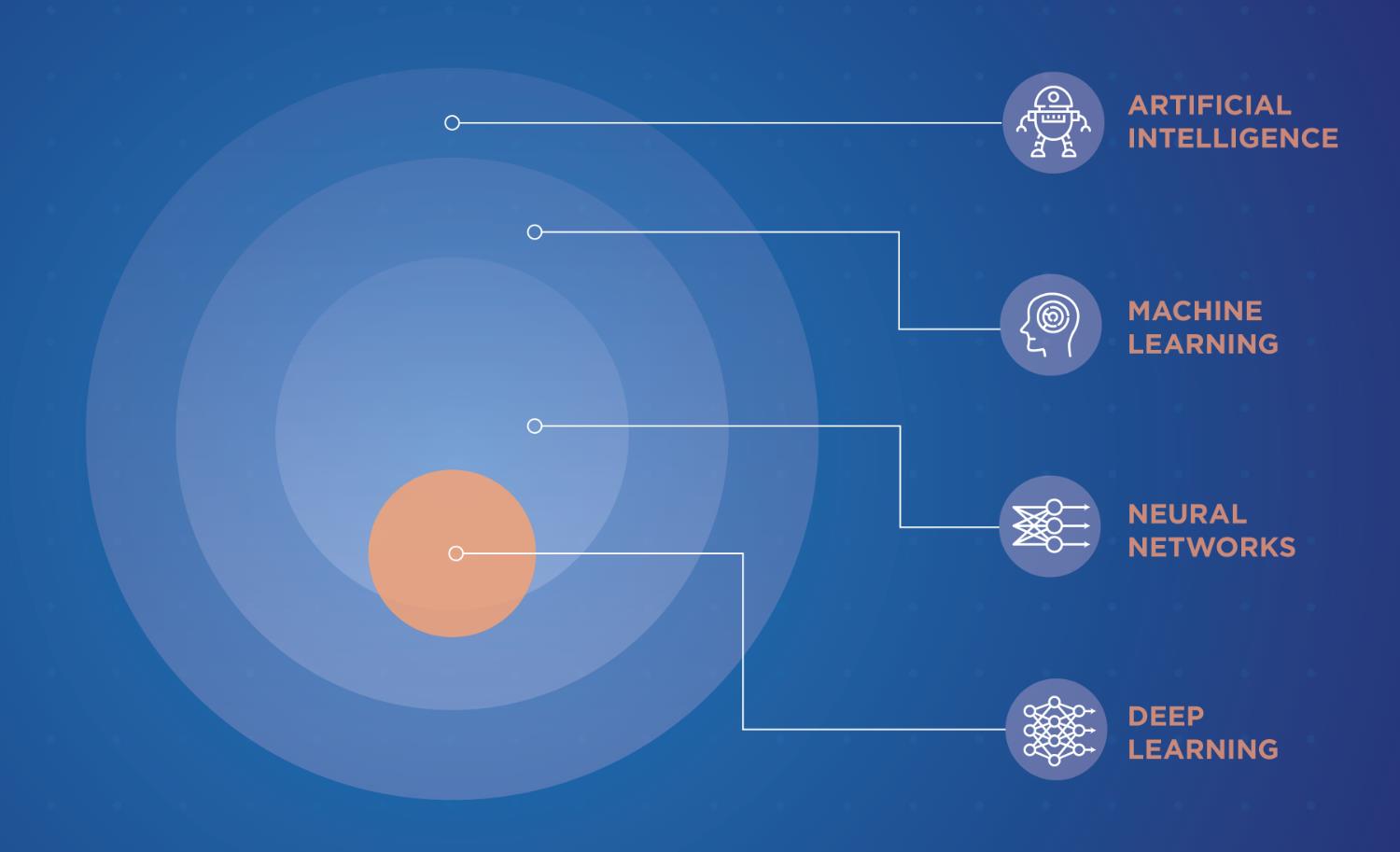

1.1.2 Differences between AI, machine learning, and deep learning

While AI is a broad field encompassing various approaches to creating intelligent machines, machine learning, and deep learning are specific subsets of AI:

- Machine Learning: A subset of AI that focuses on the development of algorithms that allow computers to learn from data without being explicitly programmed. Machine learning algorithms can automatically improve their performance on a specific task as they are exposed to more data.

- Deep Learning: A subset of machine learning that uses artificial neural networks with multiple layers to learn and make decisions. Deep learning models can automatically learn hierarchical representations of data, enabling them to tackle complex tasks such as image and speech recognition.

Additional reading:

Additional reading:

- “AI vs. Machine Learning vs. Deep Learning: What’s the Difference?” by IBM (https://www.ibm.com/cloud/blog/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks)

- “What is the Difference Between Artificial Intelligence, Machine Learning, and Deep Learning?” by NVIDIA (https://blogs.nvidia.com/blog/2016/07/29/whats-difference-artificial-intelligence-machine-learning-deep-learning-ai/)

1.1.3 Types of AI: capabilities and functionalities

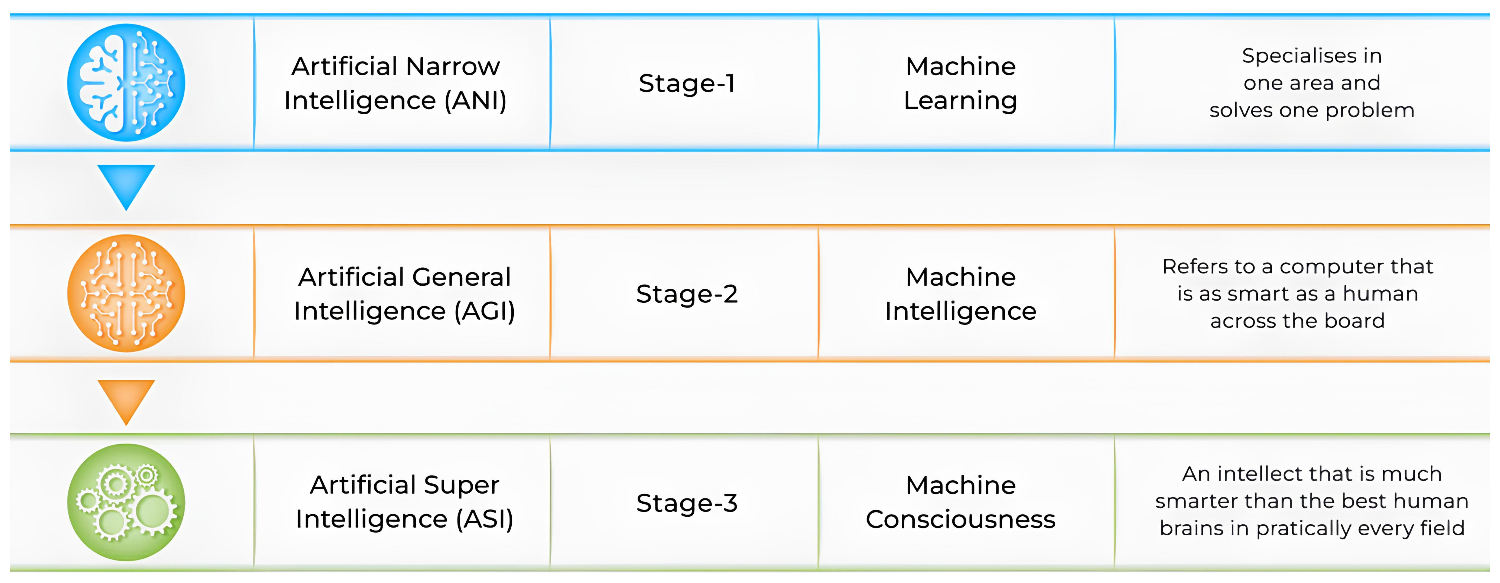

Capabilities:

- Narrow AI (weak AI): Designed to perform a specific task, such as playing chess or recognizing speech. Most current AI applications fall into this category.

- General AI (strong AI): Hypothetical AI that can perform any intellectual task that a human can. This level of AI has not been achieved yet.

- Superintelligent AI: A theoretical AI that surpasses human intelligence in all domains. This is a long-term goal of some AI researchers.

Functionalities:

- Reactive Machines: These are the most basic AI systems. They can only respond to the current situation or input they receive. However, they cannot learn from past experiences or remember anything. A good example of a reactive machine is Deep Blue, the famous computer program that beat a world champion at chess in 1997.

- Memory-Based Systems: Unlike reactive machines, these AI systems can use information from the past to make decisions. They have a limited form of memory that allows them to learn from previous data or experiences. Many virtual assistants, chatbots, and self-driving cars work using this type of AI.

Human-Like Understanding: Researchers are working on developing advanced AI that can understand human emotions, beliefs, and intentions, just like we do. This type of AI would not only process information but also comprehend the nuances of human behavior and psychology. It’s an exciting area of research, but we haven’t achieved this level of AI yet. - Self-Aware AI: This is a hypothetical and futuristic type of AI that would have its own consciousness, emotions, and self-awareness, similar to humans. Self-aware AI systems would understand their own existence, feelings, and beliefs, and might even develop desires and motivations of their own. However, this is still a theoretical concept and has not been realized in practice.

- Super-Intelligent AI: This is a speculative form of AI that would be smarter than humans in almost every way, including scientific creativity, general wisdom, and social skills. While this is an intriguing idea, it’s still a subject of ongoing research and discussion among scientists.

1.2 Introduction to Machine Learning

1.2.1 Definition and basic concepts

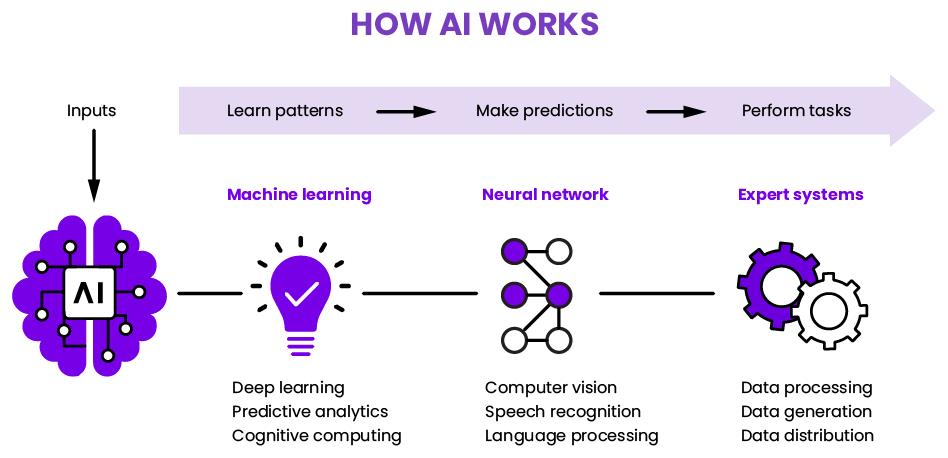

Machine learning is a method of creating AI systems that can learn and improve their performance on a specific task without being explicitly programmed. The basic concept behind machine learning is that algorithms can learn from data by identifying patterns and making decisions based on that information.

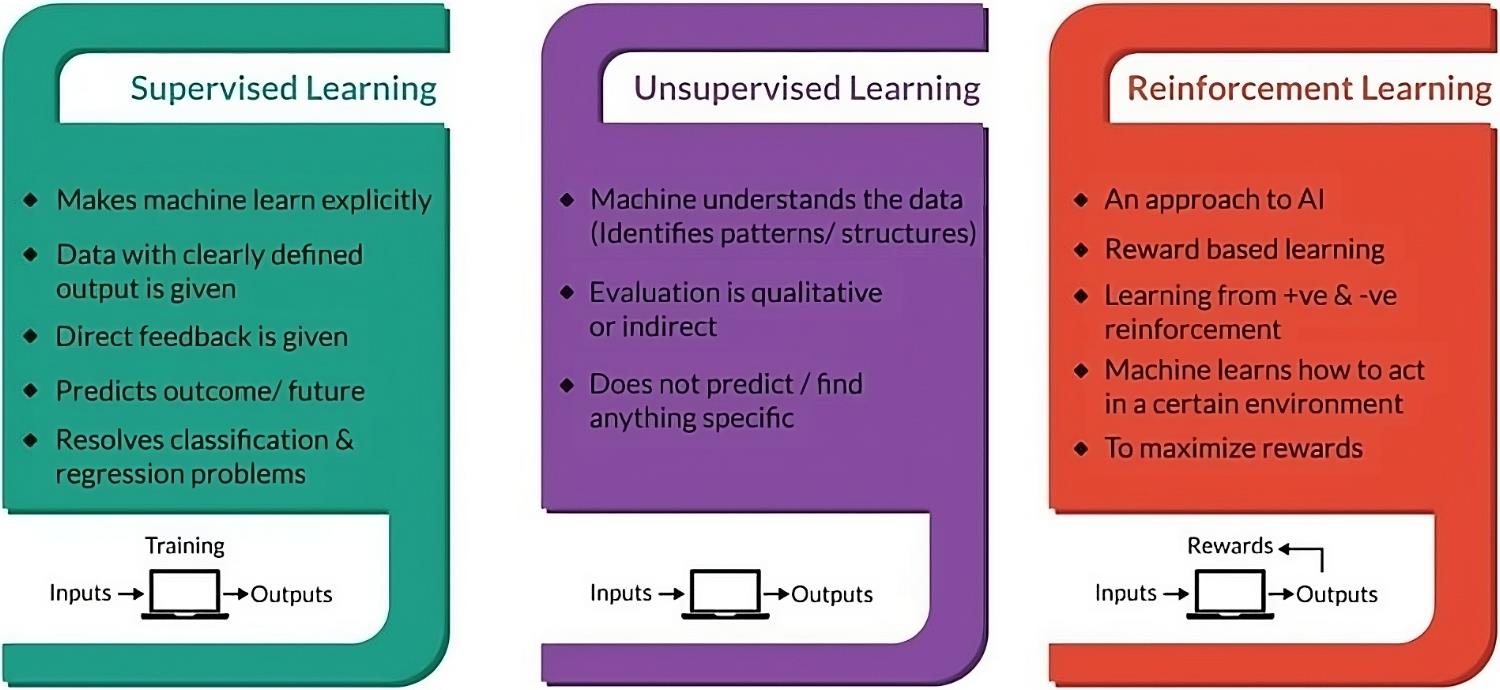

1.2.2 Supervised, unsupervised, and reinforcement learning

There are three main types of machine learning:

- Supervised learning: The algorithm learns from labeled data, where both input and output data are provided. The goal is to learn a function that maps input data to the correct output labels.

- Unsupervised learning: The algorithm learns from unlabeled data, where only input data is provided, with the goal of discovering hidden patterns or structures in the data.

- Reinforcement learning: The algorithm learns through interaction with an environment, receiving feedback in the form of rewards or punishments. The goal is to learn a policy that maximizes the cumulative reward.

Additional reading:

Additional reading:

- “Machine Learning Basics: An Illustrated Guide for Non-Technical Readers” by Vishal Maini (https://www.freecodecamp.org/news/machine-learning-basics-an-illustrated-guide-for-non-technical-readers-1e2f5b9a50e5/)

- “A Visual Introduction to Machine Learning” by R2D3 (http://www.r2d3.us/visual-intro-to-machine-learning-part-1/)

1.2.3 Real-world applications of machine learning

Machine learning has numerous applications in various domains, such as:

- Image and speech recognition (e.g., facial recognition, voice assistants)

- Natural language processing (e.g., sentiment analysis, language translation)

- Recommendation systems (e.g., Netflix, Amazon)

- Fraud detection (e.g., credit card fraud, insider trading)

- Medical diagnosis (e.g., cancer detection, drug discovery)

1.3 Neural Networks Fundamentals

1.3.1 Biological inspiration and structure of neural networks

Artificial neural networks (ANNs) are inspired by the structure and function of biological neural networks in the human brain. ANNs consist of interconnected nodes (neurons) organized in layers, with each node performing a simple computation and passing the result to the next layer.

1.3.2 How neural networks learn and process information

Neural networks learn by adjusting the strength of connections between neurons based on the input data and the desired output. This process is called training, and it involves feeding the network with many examples and using optimization algorithms to minimize the difference between the predicted and actual outputs.

1.3.3 Types of neural networks (e.g., feedforward, recurrent, convolutional)

There are several types of neural networks, each designed for specific tasks:

- Feedforward neural networks: The simplest type of ANN, where information flows in one direction from input to output. Suitable for tasks such as classification and regression.

- Recurrent neural networks (RNNs): ANNs with feedback connections, allowing information to persist and flow in loops. Suitable for tasks involving sequential data, such as language translation and speech recognition.

- Convolutional neural networks (CNNs): ANNs designed to process grid-like data, such as images. They use convolutional layers to learn local patterns and pooling layers to reduce dimensionality.

Additional reading:

Additional reading:

- “Neural Networks and Deep Learning” by Michael Nielsen (http://neuralnetworksanddeeplearning.com/)

- “A Beginner’s Guide to Neural Networks and Deep Learning” by Pathmind (https://pathmind.com/wiki/neural-network)

- “Types of Artificial Neural Networks & Deep Learning History by Prof. Ryan Ahmed

(https://youtu.be/25Qs7zPfLZo?si=hb2aHC9bwCMpWcXV)

1.4 Deep Learning and its Impact

Deep learning is a subfield of machine learning that focuses on training artificial neural networks with many layers (deep neural networks) to learn hierarchical representations of data. While traditional machine learning algorithms rely on manual feature engineering and shallow architectures, deep learning allows the network to automatically learn relevant features from raw data, enabling it to tackle more complex tasks and achieve state-of-the-art performance in various domains.

1.4.2 Key concepts and architectures in deep learning

Deep learning involves several key concepts and architectures that enable it to learn complex patterns and representations from data:

- Artificial Neural Networks (ANNs): The foundation of deep learning, ANNs are composed of interconnected nodes (neurons) organized in layers. Each neuron performs a simple computation on its inputs and passes the result to the next layer.

- Backpropagation: The algorithm used to train deep neural networks by adjusting the connection weights between neurons based on the difference between the predicted and actual outputs.

- Convolutional Neural Networks (CNNs): A type of deep learning architecture designed to process grid-like data, such as images. CNNs use convolutional layers to learn local patterns and pooling layers to reduce dimensionality, making them highly effective for tasks like image classification and object detection.

- Recurrent Neural Networks (RNNs): A type of deep learning architecture designed to process sequential data, such as text or time series. RNNs have feedback connections that allow information to persist across time steps, enabling them to learn temporal dependencies and perform tasks like language translation and speech recognition.

1.4.3 Advantages of deep learning over traditional machine learning

Deep learning has several advantages over traditional machine learning:

- Ability to learn from raw, unstructured data (e.g., images, text, audio)

- Capacity to learn more complex and abstract representations of data

- Improved performance on many tasks, such as image and speech recognition

- Reduced need for manual feature engineering

1.4.4 Breakthroughs and achievements in deep learning

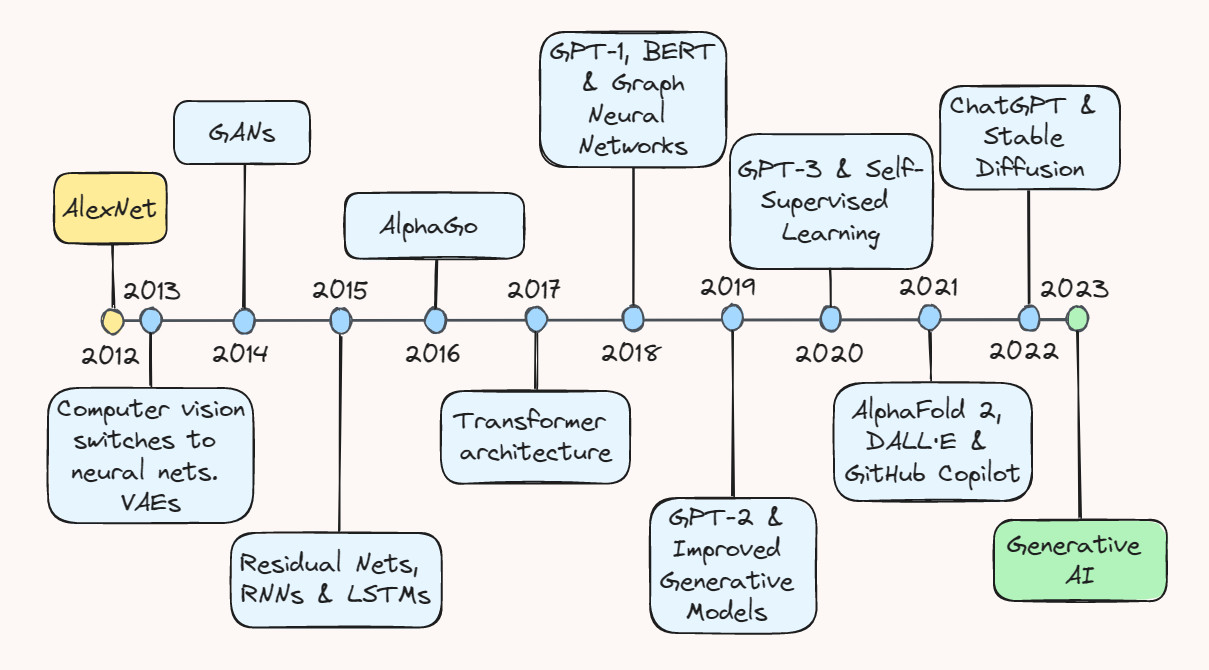

Deep learning has revolutionized various fields by achieving remarkable performance, often surpassing traditional machine learning methods and even human-level capabilities in certain tasks. Some notable achievements of deep learning include:

- Image Classification: Deep convolutional neural networks have demonstrated human-level or superhuman performance in identifying objects, scenes, and faces in images. Models like AlexNet, VGGNet, and ResNet have significantly advanced the field of computer vision by automating image classification tasks with unprecedented accuracy.

- Natural Language Processing (NLP): Deep learning models, such as the Transformer architecture and its variants (e.g., BERT, GPT), have transformed NLP tasks like language translation, sentiment analysis, and question answering. These models can learn contextual representations of words and generate human-like text, enabling more natural and efficient communication between humans and machines.

- Speech Recognition: Deep learning has dramatically improved the accuracy of speech recognition systems, enabling near-human-level performance in tasks like speech-to-text transcription and voice-based virtual assistants. Models like DeepSpeech and WaveNet have set new benchmarks in this domain, making it easier for computers to understand and respond to spoken language.

- Reinforcement Learning: Deep reinforcement learning algorithms have achieved remarkable success in complex tasks, such as playing strategic games like Go and chess, and controlling robotic systems. For example, models like AlphaGo and OpenAI Five have demonstrated the ability to learn and master intricate strategies through trial-and-error learning, often outperforming human experts.

1.4.5 Relevance of deep learning to prompting and language-based AI

Deep learning plays a crucial role in the development of language-based AI systems and the prompting techniques that will be the focus of this course. Large Language Models (LLMs), such as GPT and BERT, which are at the forefront of natural language processing and generation, are built upon deep learning architectures like the Transformer.

These deep learning-based LLMs have the ability to understand and generate human-like text, perform various language tasks, and respond to prompts in a coherent and contextually relevant manner. By learning from vast amounts of text data, LLMs can capture the nuances and intricacies of human language, enabling more natural and effective human-machine interaction through prompting.

Understanding the fundamentals of deep learning, its key concepts, and its impact on AI will provide learners with a solid foundation for exploring the capabilities and applications of LLMs and prompting techniques in the subsequent modules of this course.

Additional reading:

Additional reading:

- “Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville (https://www.deeplearningbook.org/)

“A Brief History of Deep Learning” by Catalin Voss (https://towardsdatascience.com/a-brief-history-of-deep-learning-9c1c1d81d58d) - “The Transformer: A Novel Neural Network Architecture for Language Understanding” by Jakob Uszkoreit (https://ai.googleblog.com/2017/08/transformer-novel-neural-network.html)

1.5 Applications of AI, Machine Learning, and Neural Networks

1.5.1 Computer vision and image recognition

Computer vision and image recognition are among the most prominent applications of AI, machine learning, and neural networks. These technologies enable computers to interpret and understand visual information from images and videos, opening up a wide range of possibilities in areas such as:

- Object Detection and Tracking: Identifying and tracking specific objects within images or video streams, with applications in areas such as autonomous vehicles, surveillance systems, and augmented reality.

- Facial Recognition: Recognizing and verifying individuals based on their facial features, used in security systems, photo tagging, and emotion detection.

- Medical Image Analysis: Assisting in the diagnosis and treatment of diseases by analyzing medical images such as X-rays, CT scans, and MRIs, helping to detect abnormalities and guide clinical decision-making.

1.5.2 Natural language processing and speech recognition

Natural language processing (NLP) and speech recognition are key areas where AI, machine learning, and neural networks are making significant strides, enabling computers to understand, interpret, and generate human language. Some notable applications include:

- Language Translation: Automating the translation of text or speech from one language to another, facilitating global communication and breaking down language barriers.

- Sentiment Analysis: Determining the emotional tone or opinion expressed in a piece of text, useful for understanding customer feedback, social media monitoring, and market research.

- Speech-to-Text and Text-to-Speech: Converting spoken words into written text and vice versa, enabling voice-based interfaces, transcription services, and accessibility features.

- Chatbots and Virtual Assistants: Developing conversational AI systems that can understand and respond to user queries, provide information, and assist with various tasks.

These applications will be explored in greater detail including key concepts, techniques, and real-world examples in the Natural Language Processing (NLP) module, and the Large Language Models (LLMs) module will delve into the advancements and capabilities of LLMs, which have revolutionized the field of language-based AI.

1.5.3 Robotics and autonomous systems

AI, machine learning, and neural networks are crucial components in the development of robotics and autonomous systems, enabling machines to perceive, reason, and act in complex environments. Some applications in this domain include:

- Industrial Robots: Enhancing manufacturing processes through intelligent automation, enabling robots to perform tasks such as assembly, welding, and quality control with increased efficiency and precision.

- Autonomous Vehicles: Developing self-driving cars, trucks, and drones that can navigate and make decisions based on real-time sensor data and machine learning algorithms, potentially revolutionizing transportation and logistics.

- Robotic Process Automation: Automating repetitive and rule-based tasks in various industries, such as data entry, invoicing, and customer support, using software robots that can interact with digital systems and applications.

1.5.4 Healthcare and medical diagnosis

AI, machine learning, and neural networks are transforming healthcare and medical diagnosis, assisting medical professionals in making more accurate and efficient decisions. Some applications include:

- Medical Image Analysis: Analyzing medical images such as X-rays, CT scans, and MRIs to detect abnormalities, classify diseases, and guide treatment planning, potentially improving diagnostic accuracy and patient outcomes.

- Personalized Medicine: Leveraging patient data and machine learning algorithms to develop tailored treatment plans based on an individual’s genetic profile, medical history, and lifestyle factors, enabling more targeted and effective therapies.

- Drug Discovery and Development: Accelerating the identification of new drug candidates and predicting their efficacy and safety using machine learning techniques, reducing the time and cost associated with traditional drug discovery methods.

1.5.5 Finance and fraud detection

AI, machine learning, and neural networks are playing an increasingly important role in the financial sector, helping to detect fraud, assess risk, and automate various processes. Some applications include:

- Fraud Detection: Analyzing transactional data and patterns to identify potential fraudulent activities in real-time, such as credit card fraud or money laundering, helping financial institutions prevent losses and protect customers.

- Credit Scoring and Loan Approval: Assessing the creditworthiness of individuals and businesses using machine learning algorithms that analyze financial data, employment history, and other relevant factors, streamlining the loan application process and reducing the risk of default.

- Algorithmic Trading: Developing trading strategies and executing trades based on real-time market data and machine learning models, potentially increasing the speed and efficiency of financial markets while managing risk.

1.6 Importance of AI in Today’s World

1.6.1 Transformative potential across industries

AI has the potential to revolutionize various industries, such as:

- Healthcare: Improving patient diagnosis, drug discovery, personalized medicine, and improved healthcare delivery and outcomes

- Safety and Security: Enhancing safety, efficiency, and accessibility through autonomous vehicles, cybersecurity, surveillance systems, and disaster response

- Personalization: Personalized experiences in entertainment, advertising, e-commerce, and tailored recommendations and services based on individual preferences

- Manufacturing: Optimizing production processes, reducing waste, and improving quality control

- Education: Personalizing learning experiences, automating grading, and providing intelligent tutoring

1.6.2 Economic and social implications

The widespread adoption of AI is expected to have significant economic and social implications, such as:

- Increased productivity and economic growth

- Job displacement and the need for reskilling and upskilling

- Widening the gap between AI-adopters and non-adopters

- Ethical concerns regarding privacy, bias, and accountability

1.6.3 Challenges and ethical considerations

The development and deployment of AI systems come with several challenges and ethical considerations, such as:

- Ensuring transparency and explainability of AI decision-making processes

- Addressing bias and discrimination in AI algorithms and datasets

- Protecting privacy and security of personal data used in AI systems

- Determining accountability and responsibility for AI-driven actions and decisions

Additional reading:

Additional reading:

- “The Future of AI: Opportunities and Challenges” by the European Commission (https://ec.europa.eu/digital-single-market/en/news/future-ai-opportunities-and-challenges)

- “Ethical Challenges in Artificial Intelligence” by the IEEE (https://ethicsinaction.ieee.org/ethical-challenges-in-artificial-intelligence/)

1.7 Summary

This introductory module provided a solid foundation for understanding Artificial Intelligence (AI), Machine Learning, and Neural Networks. Here are the key takeaways:

- AI Fundamentals: We learned the definition of AI and its key characteristics, such as learning from experience, processing data, recognizing patterns, and adapting to new situations. The different types of AI – narrow, general, and superintelligent – were also covered.

- Machine Learning: As a subset of AI, machine learning focuses on algorithms that can learn from data and improve performance on specific tasks. The three main types – supervised, unsupervised, and reinforcement learning – were explored.

- Neural Networks: Inspired by the human brain, neural networks process information by adjusting connection strengths between nodes. We understood different architectures like feedforward, recurrent, and convolutional networks designed for various applications.

- Deep Learning: This powerful technique, utilizing multi-layered neural networks, has achieved remarkable breakthroughs in areas like image classification, natural language processing, speech recognition, and reinforcement learning. Notable achievements like AlexNet, DeepSpeech, and AlphaGo were highlighted.

- AI Applications: The module showcased AI’s transformative impact across domains like computer vision, natural language processing, robotics, healthcare, and finance. Real-world examples demonstrated AI’s potential in areas such as object detection, facial recognition, language translation, and medical diagnosis.

- Importance and Implications: AI’s potential to revolutionize industries, drive economic growth, and its associated ethical considerations like transparency, bias, privacy, and accountability were discussed.

With this comprehensive introduction, you now have a solid grasp of AI, machine learning, and neural networks – their fundamentals, applications, and implications. You are well-equipped to explore more advanced concepts and techniques in subsequent modules.