Module 7: Prompting Fundamentals

Welcome to the world of prompting – the essential bridge between human language and artificial intelligence. This module will equip you with the knowledge and skills to effectively communicate with AI models, unlocking their vast potential across a wide range of applications.

- Goal: To equip learners with a comprehensive understanding of various prompting techniques that enhance the performance and applicability of AI models in real-world scenarios. This includes mastering the art of crafting effective prompts that guide AI behavior accurately and responsibly.

- Objective: By the end of this module, learners will understand the fundamentals of prompting, learn popular techniques like zero-shot and few-shot prompting, explore advanced methods, and apply their knowledge through practical demonstrations and projects.

Prompting lies at the core of human-AI interaction, enabling users to tap into the incredible capabilities of language models like ChatGPT. This module delves into the principles and best practices of prompt design, empowering you to craft clear, concise, and contextually relevant prompts that elicit accurate and useful responses.

You’ll gain mastery over essential techniques such as zero-shot prompting, few-shot prompting, and chain-of-thought prompting, learning when and how to effectively apply each approach. The module then expands your skillset by introducing advanced prompting methods like prompt chaining, multimodal prompting, and techniques for bias reduction.

Through interactive examples and hands-on projects, you’ll put your prompting skills into practice, experiencing firsthand the transformative power of effective prompting across various domains and use cases. You’ll also learn strategies for mitigating common issues like hallucinations and maintaining character consistency.

By the end of this module, you’ll possess a comprehensive understanding of prompting fundamentals, enabling you to unlock the full potential of AI models and drive innovation in fields ranging from healthcare and finance to education and creative industries.

- 7.1 Introduction to Promptingv

- 7.2 Prompt Basics and Formatting

- 7.3 Essential Prompting Techniques

- 7.4 Advanced Prompting Methods

- 7.5 Choosing the Right Prompting Method

- 7.6 Common Issues and Challenges

- 7.7 Summary

7.1 Introduction to Prompting

7.1.1 What is prompting?

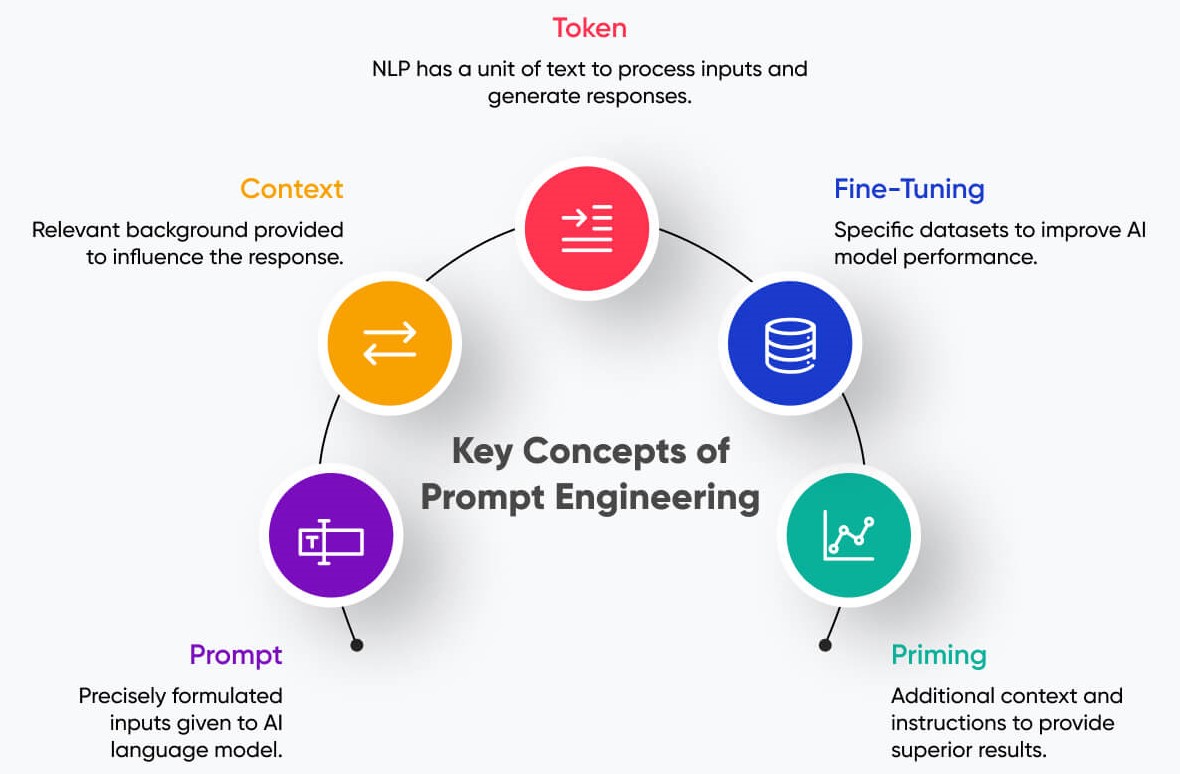

Prompting is the process of providing input to an AI model, like ChatGPT, to elicit a relevant response. A prompt is usually in the form of a question, instructions, or a request for the AI to perform a specific task or provide information.

For example, asking “What is the capital of France?” is a simple prompt that would likely result in the AI responding with “Paris.”

Prompts can range from simple, straightforward questions to complex, multi-part instructions. The quality and clarity of the prompt can significantly impact the quality and accuracy of the AI’s response.

7.1.2 How does prompting work?

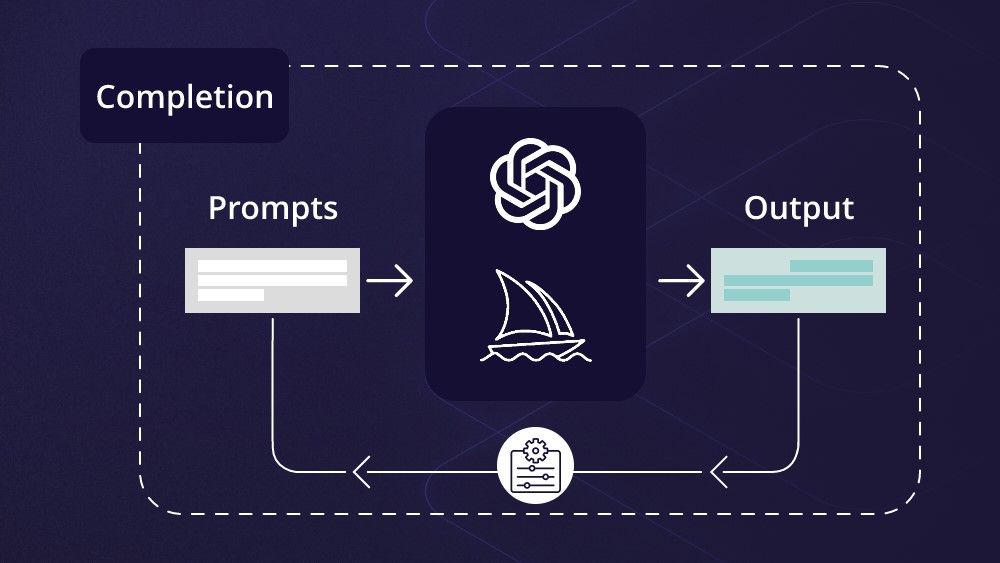

AI models like ChatGPT are trained on vast amounts of text data, allowing them to understand and generate human-like language. When a user provides a prompt, the AI processes the input and uses its training to predict the most likely and relevant response.

The AI generates its response one token (word or subword) at a time, based on the context provided by the prompt and its own internal knowledge. It does not have a predetermined response; instead, it dynamically creates the output based on the prompt and its training.

7.1.3 Role of Prompting in AI and NLP

Effective prompting is essential for maximizing the capabilities of AI models. Clear, structured, and relevant prompts enable these models to produce accurate and insightful responses, making them versatile for various tasks including question-answering, recommendations, creative writing, and problem-solving. The quality of the prompts heavily influences the quality and relevance of AI-generated outputs, highlighting prompt design as a critical skill for those working with AI and NLP technologies

Benefits of Effective Prompting

- Improved accuracy: Clear and specific prompts help the AI understand the user’s intent, leading to more accurate and relevant responses.

- Enhanced efficiency: Well-crafted prompts enable users to quickly and easily access the information or assistance they need, saving time and effort.

- Increased versatility: By learning how to prompt effectively, users can leverage the AI’s capabilities across a wide range of tasks and domains, from research and analysis to creative writing and problem-solving.

- Better user experience: High-quality prompts lead to more satisfactory interactions with the AI, as users are more likely to receive the information or assistance they seek.

- Unlocking AI’s potential: Effective prompting allows users to harness the full potential of AI language models, pushing the boundaries of what these tools can accomplish and fostering innovation in various fields.

Additional reading:

Additional reading:

- “Introduction to Prompting” by Anthropic (https://docs.anthropic.com/claude/docs/intro-to-prompting)

- “Introduction to Prompt Design” by Google Cloud (https://cloud.google.com/vertex-ai/generative-ai/docs/learn/prompts/introduction-prompt-design)

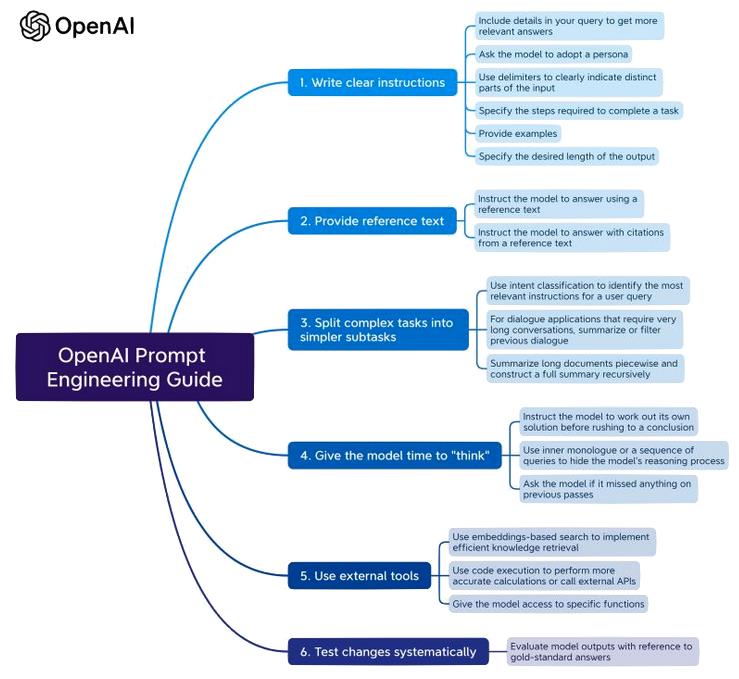

- “Prompt Engineering” by OpenAI (https://platform.openai.com/docs/guides/prompt-engineering)

7.2 Prompt Basics and Formatting

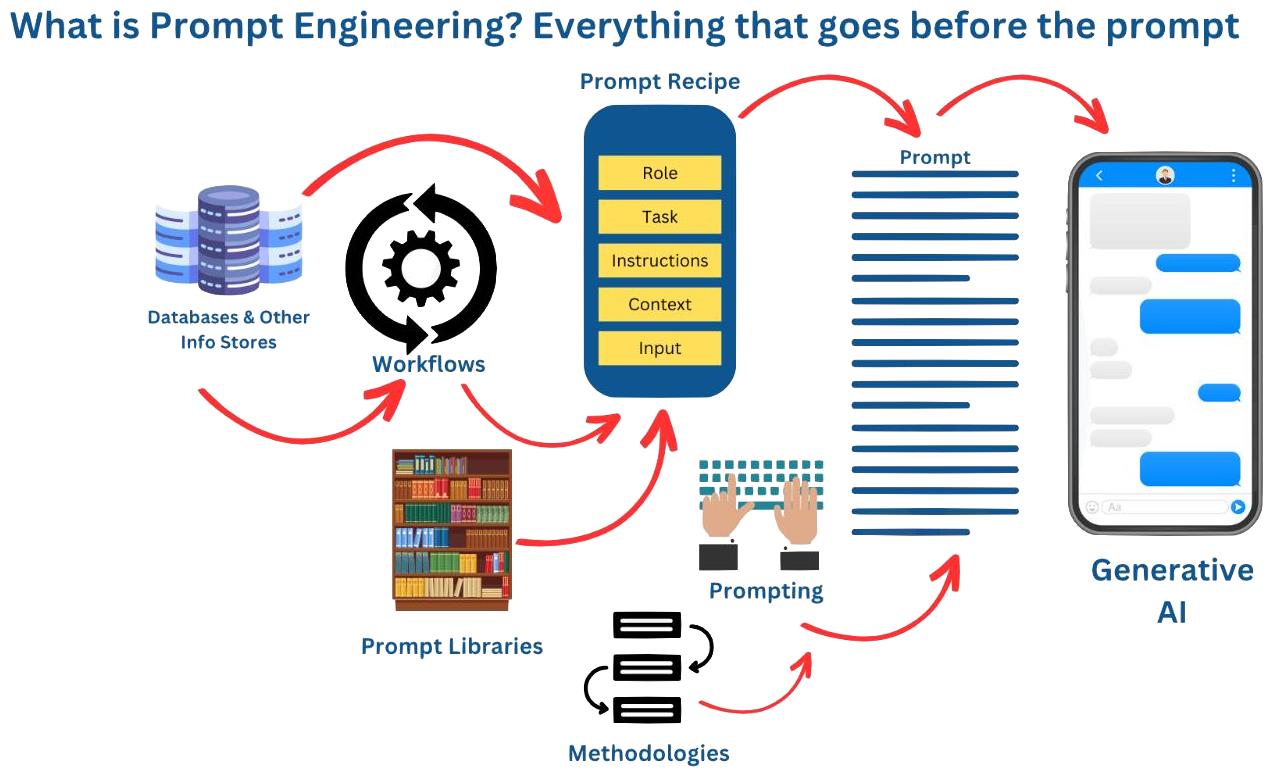

7.2.1 Prompt Breakdown

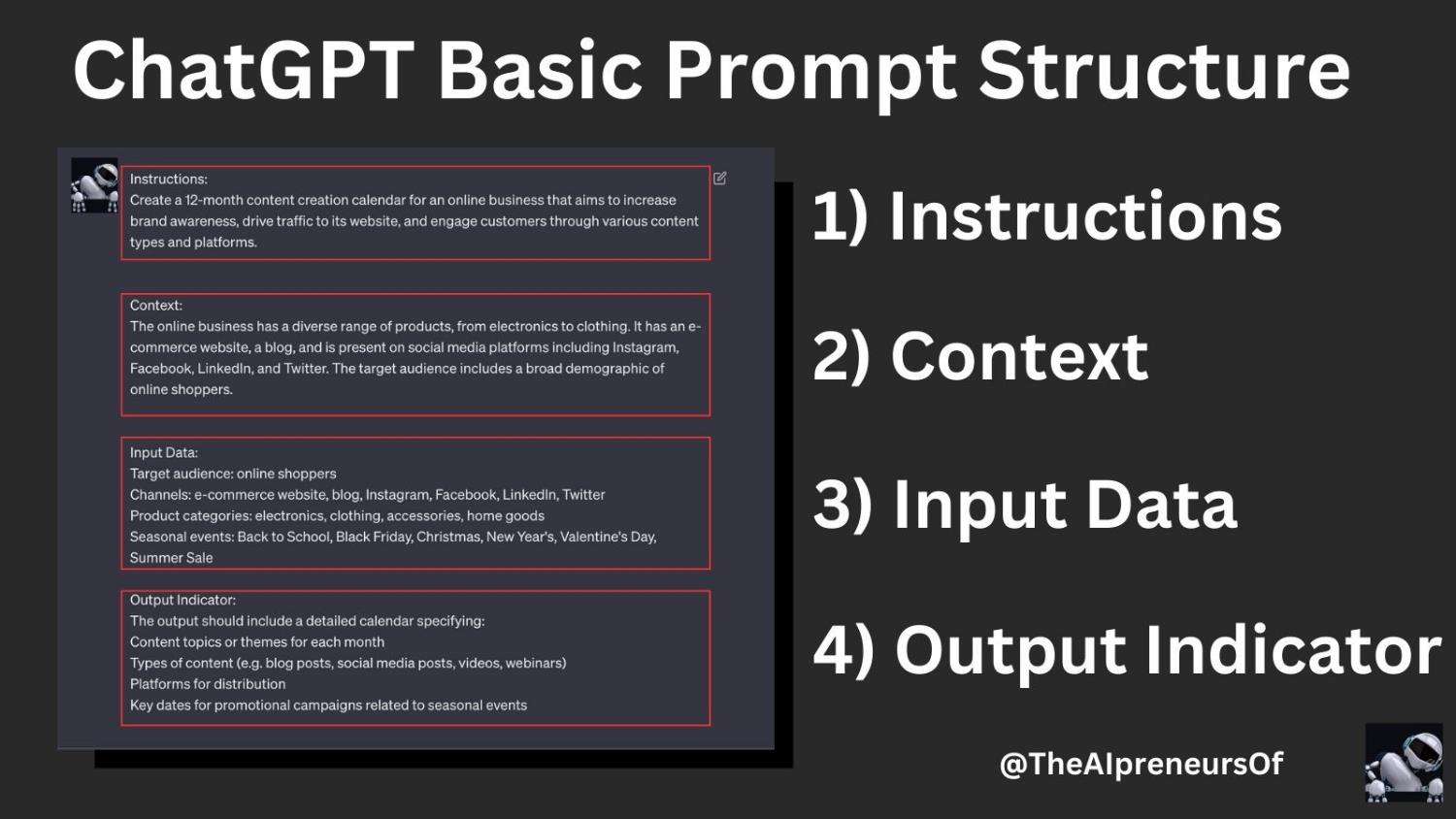

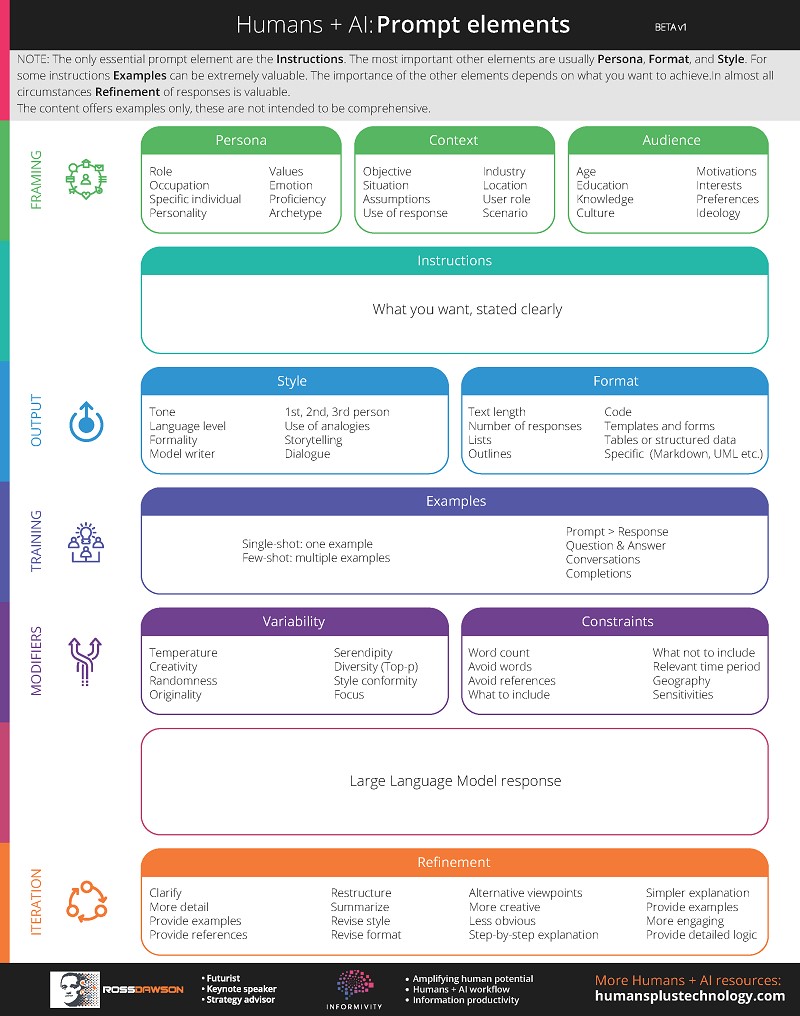

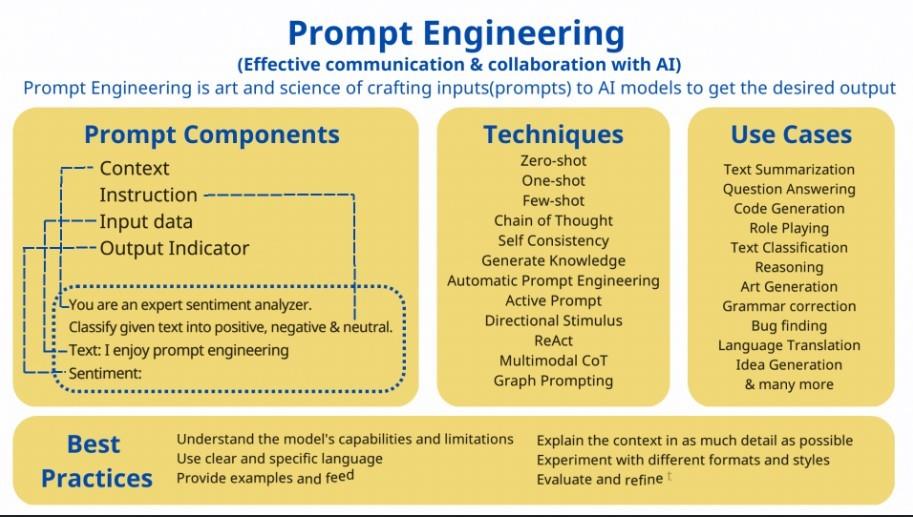

A well-structured prompt consists of the following key elements:

- Instruction: A clear, specific task or instruction for the model to perform. This could be a question, a command, or a description of the desired output.

- Context: Background information, constraints, or examples that help guide the model towards providing relevant and accurate responses. Context can include domain-specific knowledge, formatting requirements, or sample input-output pairs.

- Input Data: The actual data or query you want the model to process, such as a question to be answered, a passage to be summarized, or code to be explained.

- Output Indicator: A specific format or structure for the model’s output, such as “Sentiment:” for a sentiment analysis task or “Translation:” for a language translation task. Output indicators help the model understand how to present its results.

Example:

7.2.2 Formatting Guidelines

1. Use clear and concise language: Provide precise instructions to describe the task and the desired output, avoiding ambiguity or vague language.

- Example: “Write a summary of the following text in 3 sentences or less.”

2. Provide relevant context: Include background information, constraints, or examples that can help guide the model towards more accurate and relevant responses.

- Example: “Consider the target audience for this summary: high school students with no prior knowledge of the subject matter.”

3. Split complex tasks into similar subtasks: Break down complicated tasks into smaller, more manageable steps to help the model generate better responses.

- Example: “First, identify the main themes in the text. Then, summarize each theme in one sentence.”

4. Allow the model time to think: For more complex tasks, give the model enough time to process the information and generate a thoughtful response by increasing the response length or using a multi-step approach.

- Example: “Take a moment to consider the implications of the text before providing your summary.”

5. Use external tools: Incorporate external tools or resources to help the model generate more accurate or comprehensive responses when necessary.

- Example: “Use a thesaurus to find synonyms for commonly repeated words in your summary to improve readability.”

6. Evaluate output: Assess the model’s output and provide feedback or additional guidance to refine the response if needed.

- Example: “Review your summary to ensure it captures the main points of the text accurately and concisely. If not, please revise it accordingly.”

Writing Prompts – Syntax Patterns:

- Question-Answer (Q&A) format:

Q: What are the main themes in this text?

A: The main themes are love, loss, and redemption.

- Instructional format:

Instruction: Summarize the following text for a high school audience.

Context: The text is a complex philosophical argument about the nature of reality.

Input: [Text to be summarized]

Output: [3-sentence summary]

- Few-shot prompting:

Example 1: Text: “The cat sat on the mat.” Summary: “A cat rested on a mat.”

Example 2: Text: “The dog chased the ball.” Summary: “A dog played with a ball.”

Prompt: Text: “The bird sang in the tree.” Summary:

7.2.4 Additional Prompt Elements

In addition to the key components, various advanced prompt elements can be used to refine prompts further and guide the model to produce more accurate and relevant responses. It’s important to note that these additional prompt elements interact with and build upon the key components mentioned in section 7.2.1.

“Instructions” are the most important element of a prompt, the other elements can add depth to the the query. By strategically combining these additional elements with the key components, users can create highly tailored and effective prompts that guide the AI model to produce the desired output.

Instructions: Clearly state the specific task or action the model should perform.

- Example: “Write an engaging introduction paragraph for a blog post about the benefits of meditation.”

Persona: Specify the role or character the model should adopt when generating responses.

- Example: “Act as a seasoned journalist writing an article for a major newspaper.”

Content: Provide the main topics, ideas, or information that should be included in the response.

- Example: “Make sure to cover the following points: the causes of the event, its impact on society, and potential solutions.”

Audience: Define the target audience for the generated content, which can help tailor the response’s complexity, tone, and style.

- Example: “The intended audience for this explanation is middle school students learning about the topic for the first time.”

Style: Describe the desired writing style, tone, or voice for the generated content.

- Example: “Use a friendly, conversational tone as if you were explaining the topic to a close friend.”

Format: Specify the structure or layout of the response, such as paragraphs, bullet points, or a specific template.

- Example: “Please format your response as a numbered list of steps.”

Examples: Provide relevant examples to illustrate the desired output format, style, or content.

- Example: “Here’s an example of the type of poem I’m looking for: [example poem]”

Variability: Adjust settings like temperature or focus to control the randomness or creativity of the generated content.

- Example: “Use a temperature of 0.7 to introduce some variation in the response while still staying on topic.”

Constraints: Set limitations or requirements for the generated content, such as word count, time period, or perspective.

- Example: “The response should be between 200-250 words and written from a first-person perspective.”

Refinement: Provide iterative feedback or guidance to help the model improve its response.

- Example: “That’s a good start, but can you elaborate more on the second point you made?”

Additional reading:

Additional reading:

- “Prompt Basics” by The Prompting Guide (https://www.promptingguide.ai/introduction/basics)

- “AI Prompts” by Harvard University (https://huit.harvard.edu/news/ai-prompts)

- “Best Practices for Prompt Engineering with OpenAI API” by OpenAI (https://help.openai.com/en/articles/6654000-best-practices-for-prompt-engineering-with-the-openai-api)

7.3 Essential Prompting Techniques

Zero-shot, few-shot, and chain-of-thought prompting are crucial because they enable effective communication with AI models, allowing them to produce relevant and accurate responses even without extensive training examples. Mastering these techniques equips users to quickly adapt AI models for a variety of tasks, enhancing their utility in real-world applications. These methods also lay the groundwork for the advanced prompting techniques discussed in section 7.4, which further enhance the AI’s ability to handle complex or specialized tasks.

7.3.1 Zero-Shot Prompting

Zero-shot prompting is a technique where a language model is asked to perform a task or answer a question without any prior examples or task-specific training. In other words, the model is expected to generate a response based solely on the prompt and its pre-existing knowledge acquired during its initial training on a large corpus of text data.

The key aspects of zero-shot prompting are:

- No additional training: The model is not fine-tuned or trained on any specific examples related to the task at hand.

- Reliance on pre-existing knowledge: The model draws upon the vast knowledge it has gained during its initial training to understand and respond to the prompt.

- Single prompt: The task or question is presented to the model in a single prompt, without any prior context or examples.

One of the main advantages of zero-shot prompting is its ability to quickly assess a model’s performance on a wide range of tasks without the need for task-specific training data. This makes it a valuable tool for evaluating the versatility and adaptability of language models across different domains and applications.

However, it’s important to note that the success of zero-shot prompting largely depends on the quality and breadth of the model’s initial training data, as well as the clarity and specificity of the prompt itself. If the model has not been exposed to sufficient information related to the task during its training, or if the prompt is ambiguous or lacks necessary context, the model’s performance may be suboptimal.

Structuring a Zero-Shot Prompt:

- Clearly state the task or instruction

- Provide necessary context

- Include the input data

- Specify the desired output format

Example 1

“Classify the text into neutral, negative, or positive. Text: I think the vacation is okay. Sentiment:”

- Instruction: “Classify the text” This part of the prompt clearly states the task or instruction for the model to perform, which is to classify the sentiment of the given text.

- Context: In this example, there is no explicit context provided. However, the instruction itself implies that the model should classify the sentiment based on the categories: neutral, negative, or positive.

- Input Data: “Text: I think the vacation is okay.” This is the actual data or query that the model needs to process. In this case, it is the text that needs to be classified based on its sentiment.

- Output Indicator: “Sentiment:” The word “Sentiment:” at the end of the prompt serves as an output indicator, specifying the format or structure of the model’s expected output. It indicates that the model should provide the sentiment classification for the given text.

Example 2

“Translate the following English text to French: ‘The quick brown fox jumps over the lazy dog.‘ French Translation:”

- Instruction: “Translate the following” This part of the prompt provides a clear instruction for the model to perform a translation task from English to French.

- Context: The prompt specifies the source language (English) and the target language (French) for the translation task.

- Input Data: “‘The quick brown fox jumps over the lazy dog.'” This is the English text that needs to be translated into French.

- Output Indicator: “French Translation:” This output indicator specifies that the model should provide the French translation of the given English text.

By clearly identifying and separating these elements within the prompt, it becomes easier for the model to understand the task, the input it needs to process, and the expected format of the output. This structured approach to prompting helps ensure more accurate and relevant responses from the AI model.

7.3.2 Few-Shot Prompting

Few-shot prompting is a technique where a language model is provided with a small number of examples or demonstrations of a task before being asked to perform the same task on new, unseen data. The model learns from these few examples and adapts its behavior to generate responses for the new input. This method is particularly useful when the model requires more guidance to understand the context or the specifics of a task, such as in specialized domains or complex scenarios where zero-shot prompting might not provide accurate enough results.

The key aspects of few-shot prompting are:

- Limited examples: The model is given a small set of examples (usually between 1 to 10) that demonstrate the desired input-output behavior for a specific task.

- Task-specific adaptation: By studying the provided examples, the model learns to adapt its response generation to align with the given task, without the need for extensive fine-tuning or retraining.

- Improved accuracy: Few-shot prompting often leads to better performance compared to zero-shot prompting, as the model has some reference points to guide its response generation.

Benefits of few-shot prompting include:

- Precision: By providing models with specific examples, few-shot prompting achieves higher precision, especially in tasks that are nuanced or highly specialized.

- Customization: This method enables models to adapt to particular styles or formats, providing outputs that are closely aligned with user expectations.

Examples demonstrating few-shot prompting:

In few-shot prompting, the model is provided with a small number of examples that demonstrate how to perform a specific task. Let’s consider a sentiment analysis task where the model needs to classify the sentiment of a given text as positive, negative, or neutral.

Example 1

Text: I loved the movie! It was an amazing experience.

Sentiment: Positive

Example 2

Text: The food was okay, but the service was terrible.

Sentiment: Negative

Example 3

Text: The product arrived on time and met my expectations.

Sentiment: Neutral

Each example consists of four key components:

- Input Data: The text that needs to be analyzed for sentiment.

- Output Indicator: The label “Sentiment:” indicates that the model should provide a sentiment classification.

- Context: The example text and its corresponding sentiment label establish the context for the sentiment analysis task.

- Instruction: Although not explicitly stated, the examples implicitly instruct the model to classify the sentiment of the given text.

Example 4

New Text:

Text: I found the book to be quite interesting, but some chapters were a bit slow.

Sentiment: Neutral

When presented with a new, unseen text, the model uses the patterns and information learned from the provided examples to classify the sentiment. In this case, the model would likely classify the sentiment of the new text as neutral based on the balanced opinion expressed and the patterns learned from the examples.

The model’s performance in few-shot prompting depends on its ability to:

- Understand the task from the given examples (sentiment analysis)

- Identify the relevant information in the input data (text to be analyzed)

- Recognize the desired output format (sentiment label)

- Adapt its knowledge to generate an appropriate response for the new text

By examining how the model processes the examples and generates a response for the new text, learners can gain a better understanding of the few-shot prompting technique and the importance of providing clear, representative examples to guide the model’s performance.

Optimizing few-shot performance:

Varying the number of examples and the types of prompts can optimize performance, and iterative refinement of prompts based on preliminary results can further enhance the model’s accuracy in few-shot scenarios. For instance, providing more diverse examples or adjusting the prompts to be more specific can help the model better understand the nuances of the task and generate more accurate responses.

Few-shot prompting is particularly useful when you have a small amount of labeled data for a specific task, or when you need to quickly adapt a model to a new domain or use case without extensive retraining. Some potential applications include:

- Adapting a sentiment analysis model to a new domain, such as analyzing customer reviews for a specific product category.

- Training a model to generate text in a specific style or format, such as writing news articles or product descriptions.

- Fine-tuning a language model for a specialized task, like generating code snippets or answering medical queries, using a few examples from the target domain.

Few-shot prompting offers a balance between the flexibility of zero-shot prompting and the improved accuracy of fine-tuning, making it a valuable technique in various natural language processing applications. By leveraging the model’s ability to learn from limited examples, few-shot prompting enables users to quickly adapt models to new tasks and domains, improving their performance and utility in real-world scenarios.

7.3.3 Chain-of-Thought Prompting

Chain-of-thought prompting is a technique that guides the model to articulate a step-by-step reasoning process for solving complex problems. This approach is particularly effective in tasks that require detailed logical reasoning or involve multi-step problem-solving, such as mathematical puzzles or complex decision-making scenarios.

Key aspects of chain-of-thought prompting:

- Step-by-step reasoning: The model is encouraged to break down the problem into smaller, manageable parts and provide explicit reasoning for each step.

- Logical flow: By articulating the intermediate steps, the model constructs a logical pathway that leads to the final solution.

- Improved accuracy: Chain-of-thought prompting often leads to more accurate and logically consistent answers, especially in tasks that require complex reasoning.

Constructing a chain-of-thought prompt:

When creating a chain-of-thought prompt, it’s essential to guide the model to break down the problem into smaller parts and provide explicit reasoning for each step. This process helps the model construct a logical pathway that leads to the solution.

Example:

Given Numbers: 3, 7, 4, 9, 2, 5, 8

Chain-of-thought prompt:

Instruction: “Given a set of numbers, determine if the sum of all odd numbers is even. List each step of your reasoning.”

Input Data: 3, 7, 4, 9, 2, 5, 8

Output Indicator: “The sum of all odd numbers is” followed by “even” or “odd”.

Context: The problem requires identifying odd numbers, calculating their sum, and determining if the sum is even or odd.

Model’s response:

Step 1: Identify the odd numbers in the given set.

The odd numbers are: 3, 7, 9, 5.

Step 2: Add up all the odd numbers.

Sum of odd numbers: 3 + 7 + 9 + 5 = 24.

Step 3: Determine if the sum of the odd numbers is divisible by 2.

24 is divisible by 2 because 24 ÷ 2 = 12, which is a whole number.

Therefore, the sum of odd numbers is even.

Step 4: Provide the final answer based on the result from Step 3.

The sum of all odd numbers is even.

In this example, the four key components are clearly defined:

- Input Data: The set of numbers provided (3, 7, 4, 9, 2, 5, 8).

- Output Indicator: The model is expected to provide a final answer in the format “The sum of all odd numbers is” followed by “even” or “odd”.

- Context: The problem statement gives the context of the task, which involves identifying odd numbers, calculating their sum, and determining if the sum is even or odd.

- Instruction: The chain-of-thought prompt explicitly instructs the model to list each step of its reasoning process while determining if the sum of all odd numbers is even.

By following the chain-of-thought prompt, the model articulates its reasoning process step by step, making the intermediate calculations and decisions explicit. This approach helps the model organize its thoughts and arrive at the final solution in a structured manner, demonstrating a clear understanding of the problem and the logical steps required to solve it.

Benefits of chain-of-thought prompting:

- Enhanced performance: Research has shown that chain-of-thought prompting significantly improves the model’s performance on tasks that involve arithmetic reasoning, commonsense understanding, and symbolic manipulation.

- Complex reasoning: This technique excels in tasks requiring detailed analysis and logical deduction, offering a structured way to approach complex problems.

- Improved understanding: By breaking down problems into intermediate steps, models can generate more accurate and logically consistent answers, demonstrating a deeper understanding of the problem.

Combining chain-of-thought prompting with few-shot examples often results in even better task performance, especially in more challenging problem-solving contexts. The few-shot examples provide the model with a frame of reference, while the chain-of-thought prompting encourages the model to articulate its reasoning process, leading to more accurate and reliable outcomes.

Overall, chain-of-thought prompting is a powerful technique that leverages the model’s ability to process and articulate complex thought sequences, resulting in improved accuracy and performance on tasks that require logical reasoning and multi-step problem-solving.

Additional reading:

Additional reading:

- “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models” by Wei et al. (2022) (https://arxiv.org/abs/2201.11903)

- “Scaling Instruction-Finetuned Language Models” by Chung et al. (2022) (https://arxiv.org/abs/2210.11416)

- “Zero-Shot Prompting” by The Prompting Guide (https://www.promptingguide.ai/techniques/zeroshot)

- “Few-Shot Prompting” by The Prompting Guide (https://www.promptingguide.ai/techniques/fewshot)

- “Chain-of-Thought Prompting” by The Prompting Guide (https://www.promptingguide.ai/techniques/cot)

7.4 Advanced Prompting Methods

Advanced prompting methods build upon the essential techniques covered in section 7.3, enabling users to tackle more complex, specific, or nuanced tasks. These methods extend the capabilities of AI models by incorporating additional information, constraints, or guidance into the prompts.

Advanced prompting methods are particularly useful when dealing with tasks that require specialized knowledge, multi-step reasoning, or the integration of multiple data modalities. By leveraging these advanced techniques, users can unlock the full potential of AI models and achieve better performance on a wider range of applications.

7.4.1 One-Shot Prompting

One-shot prompting is an efficient method where the AI is given a single example to guide its understanding of the task. This example acts as a reference for the desired output, allowing the model to infer the necessary steps to produce similar results. It’s particularly effective when the task requirements are clear and the format is straightforward, reducing the need for multiple training examples.

Use Cases:

- Data Extraction: Extracting specific data points from a larger dataset or document where the format is consistent.

- Text Summarization: Creating a brief summary of a long article or report where the structure of summarization can be effectively demonstrated through one example.

- Style-Specific Text Generation: Generating text that matches a particular writing style or tone demonstrated by the example.

Example:

- Using one-shot prompting to generate product descriptions based on a single example.

7.4.2 Prompt Chaining

Prompt chaining involves sequencing multiple prompts where the output of one serves as the input for the next. This method decomposes complex tasks into manageable segments, allowing the model to focus on smaller, more specific tasks sequentially. This step-by-step approach helps maintain coherence and context throughout the process.

Use Cases:

- Multi-Step Question Answering: Handling complex queries that require processing several layers of information sequentially.

- Document Summarization with Intermediate Steps: Creating a detailed summary that involves extracting key points before condensing them.

- Content Generation with Structured Sections: Developing a comprehensive article or report where each section builds on the previous content.

Example:

- Applying prompt chaining to create a coherent story or article outline.

7.4.3 Multimodal Prompting

Multimodal prompting integrates multiple types of data within a single prompt, enabling the model to process and generate outputs based on a richer context. This approach is especially useful when tasks require a synthesis of visual and textual information or when different data types provide complementary insights.

Use Cases:

- Image Captioning: Generating descriptive text for images by combining the visual data with contextual text.

- Visual Question Answering: Answering questions that require an understanding of visual content in conjunction with textual data.

- Product Description Generation: Crafting detailed product descriptions using structured data (like specifications) and images.

Example:

- Employing multimodal prompting to generate image captions that incorporate visual and textual information.

7.4.4 Retrieval-Augmented Prompting (RAP)

Retrieval-augmented prompting enhances the model’s capabilities by integrating external information from a database or knowledge base. This approach enables the model to pull relevant information as needed, enriching its responses with more accurate and up-to-date data.

Use Cases:

- Dynamic Question Answering Systems: Systems that retrieve the latest information to answer queries in fields such as science, technology, and current events.

- Content Generation with External Data: Generating content that requires up-to-date facts or detailed background information from various sources.

Example:

- Using retrieval-augmented prompting to answer questions based on information from external sources.

7.4.5 Adversarial Prompting

Adversarial prompting is a method of designing prompts to challenge the model’s output robustness by presenting it with tricky, ambiguous, or misleading scenarios. This method tests and improves the model’s ability to handle unexpected or difficult situations, identifying potential weaknesses.

Scenarios for Use:

- Testing Robustness: Evaluating the model’s performance under challenging conditions to ensure reliability.

- Training for Generalization: Enhancing the model’s ability to generalize from adversarial examples, thereby improving its overall robustness.

Example:

- Testing the robustness of an AI model using adversarial prompting techniques.

7.4.6 Natural Language Prompting

Natural language prompting facilitates interactions using everyday language, making the AI more accessible and user-friendly. This method allows users to engage with the model in a conversational manner, which can be particularly beneficial for applications intended for a broad audience.

Benefits for User Interaction:

- Enhanced Accessibility: Makes technology accessible to users without technical expertise.

- Dynamic Interactions: Supports more fluid and flexible conversations, allowing for natural follow-up questions and responses.

Example:

- Demonstrating the effectiveness of natural language prompting in a customer support chatbot.

7.4.7 Structured Prompting

Structured prompting uses formally structured queries to eliminate ambiguity and enhance the precision of model responses. This method is particularly useful for technical tasks that require exact outputs, such as programming or data analysis.

Use Cases for Precision:

- **Code Generation**: Automatically generating code snippets based on specific programming requirements outlined in the prompt.

- Semantic Parsing: Converting natural language into a structured query that can be processed by databases or other applications.

- Data Extraction: Precisely extracting data from complex formats or databases according to specific schemas.

Example:

- Applying structured prompting to generate code snippets or SQL queries.

7.4.8 Prompt Tuning

Prompt tuning modifies the prompt itself through the adjustment of embeddings, optimizing the AI’s response to specific tasks without the need to retrain the entire model. This targeted fine-tuning focuses on refining the prompts that guide the model, making it more efficient and adaptable to new tasks with minimal computational overhead.

Benefits in Deployment Scenarios:

- Resource Efficiency: Reduces the need for large-scale model updates, focusing only on prompt modifications.

- Quick Adaptation: Allows rapid tuning of the model to new tasks, ideal for dynamic environments where prompt adjustments are frequently needed.

Example:

- Fine-tuning a pre-trained model using prompt tuning for a specific domain or task.

7.4.9 Prompt Engineering for Bias Reduction

Prompt engineering for bias reduction involves designing prompts that aim to mitigate biases present in the training data of AI models. This approach encourages the generation of outputs that are fair, unbiased, and inclusive by incorporating examples or instructions that highlight these values.

Importance of Bias Reduction:

- Ethical AI Use: Ensures that AI outputs do not perpetuate existing social biases, crucial for applications in sensitive areas like recruitment, legal decisions, or healthcare.

- Promoting Diversity: Helps in generating content that reflects a wide range of perspectives, thereby promoting diversity and inclusion.

Example:

- Using prompt engineering techniques to mitigate gender bias in a text generation model.

Additional reading:

Additional reading:

- “Zero-shot, One-shot and Few-shot Prompting Explained” by Analytics Vidhay (https://www.youtube.com/watch?v=sW5xoicq5TY)

- “Prompt Chaining” by The Prompt Engineering Guide (https://www.promptingguide.ai/techniques/prompt_chaining)

- “Multimodal CoT Prompting” by The Prompt Engineering Guide (https://www.promptingguide.ai/techniques/multimodalcot)

- “Introduction to Prompt Tuning” by Niklas Heidloff (https://heidloff.net/article/introduction-to-prompt-tuning/)

- “Retrieval-Augmented Prompting” by The Prompt Engineering Guide (https://www.promptingguide.ai/techniques/rag)

- “Adversarial Prompting in LLMs” by The Prompt Engineering Guide (https://www.promptingguide.ai/risks/adversarial)

- “Basics of Prompting” by The Prompt Engineering Guide (https://www.promptingguide.ai/introduction/basics)

- “Bias” by The Prompt Engineering Guide (https://www.promptingguide.ai/techniques/biasreduction)

7.5 Choosing the Right Prompting Method

Selecting the appropriate prompting method is crucial for the success of your AI application. The choice depends on various factors, such as the type of task, the available data, and the desired output. In this section, we’ll explore these factors and discuss which prompting methods are best suited for different AI applications.

7.5.1 Factors to Consider When Selecting a Prompting Method

When choosing a prompting method for your application, consider the following factors:

- Task complexity: Simple tasks may require only zero-shot or few-shot prompting, while complex tasks may benefit from chain-of-thought prompting or more advanced techniques.

- Available data: The amount and quality of available data will influence your choice of prompting method. Few-shot prompting is ideal when you have limited examples, while advanced methods like prompt tuning require more data.

- Desired output: The format and style of the desired output will guide your selection. For example, if you need a specific format or structure, you may opt for structured prompting.

- Model capabilities: Consider the capabilities of the AI model you’re using. Some models may perform better with certain prompting methods than others.

7.5.2 Prompting Methods by Different Applications

When selecting a prompting method, it’s also essential to consider the specific AI model being used. Some models may be better suited for certain prompting techniques due to their architecture, training data, or intended purpose. For example, GPT-3 and its variants have shown strong performance with few-shot and one-shot prompting, while models like BERT and RoBERTa may be more effective with techniques like prompt tuning. Researching and experimenting with different combinations of AI models and prompting methods can help you identify the most effective approach for your specific application.

Methods by Applications:

Text

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Copywriting | Ideal for generating simple copy without examples. | Use when you have examples of desired copy style or tone. | Effective for generating multi-part or detailed copy. | Helps the model structure copy logically. | ||

| Email Assistant | Suitable for basic email responses and drafts. | Use when you have examples of email templates or styles. | Effective for generating multi-step email sequences. | Helps the model structure email content logically. | ||

| General Writing | Ideal for generating short, simple texts. | Use when you have examples of desired writing style or genre. | Effective for generating multi-part texts or essays. | Helps the model follow a logical structure in writing. | ||

| Paraphrasing | Ideal for simple text rephrasing without examples. | Use when you have examples of desired paraphrasing style. | Can be used to generate multi-step paraphrasing. | Helps the model logically rephrase complex texts. | ||

| Prompting | Suitable for generating simple prompts without examples. | Use when you have examples of the desired prompting style. | Effective for generating detailed or multi-step prompts. | Helps the model structure prompts logically. | ||

| SEO | Suitable for generating simple SEO content. | Use when you have examples of desired SEO format or keywords. | Effective for generating detailed SEO strategies or content. | Helps the model structure SEO content logically. | ||

| Social Media | Ideal for generating simple social media posts. | Use when you have examples of desired social media style or tone. | Effective for generating multi-part social media campaigns. | Helps the model structure social media content logically. | ||

| Storyteller | Ideal for generating simple stories without examples. | Use when you have examples of desired storytelling style or genre. | Effective for generating multi-part or detailed stories. | Helps the model follow a narrative structure in storytelling. | ||

| Summarization | Ideal for summarizing simple texts without prior examples. | Use when you have examples of the desired summary format. | Effective for generating multi-section summaries or detailed abstracts. | Helps the model reason through key points and details in a text. |

Image

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Art | Suitable for generating simple artistic concepts. | Use when you have examples of desired art styles or themes. | Can be used to generate step-by-step artistic creations. | Combines visual and conceptual elements to create new art. | ||

| Avatars | Suitable for generating simple avatars from text prompts. | Use when you have examples of desired avatar styles. | Can be used to generate avatars with multiple steps. | Combines visual styles to create personalized avatars. | ||

| Design Assistant | Suitable for generating simple design ideas. | Use when you have examples of desired design styles or concepts. | Effective for generating detailed design sequences. | Combines design elements to create cohesive visuals. | ||

| Image Editing | Suitable for simple image editing tasks. | Use when you have examples of desired editing styles or adjustments. | Can be used to generate step-by-step image editing instructions. | Combines visual cues and editing instructions for precise changes. | ||

| Logo Generator | Suitable for generating simple logos. | Use when you have examples of desired logo styles or themes. | Can be used to generate detailed logo designs. | Combines visual elements to create cohesive logos. | ||

| Image Generation | Suitable for generating simple images from text prompts. | Can be used to generate images with multiple steps, enhancing detail. | Combines visual styles and elements to create new images. |

Video

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Personalized Videos | Suitable for generating simple personalized videos. | Use when you have examples of desired personalization styles or themes. | Effective for generating detailed personalized video sequences. | Combines visual and textual elements to create customized videos. | ||

| Video Editing | Suitable for basic video editing tasks. | Use when you have examples of desired editing styles or effects. | Can be used to generate step-by-step video editing instructions. | Combines video cues and editing instructions for precise changes. | ||

| Video Generator | Suitable for generating simple videos from text prompts. | Use when you have examples of desired video styles or themes. | Can be used to generate detailed video sequences. | Combines visual and textual elements to create cohesive videos. |

Code

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Code Generation | Suitable for generating simple code snippets from text prompts. | Provides examples of code snippets to guide the model’s output. | Can be used to generate detailed code sequences. | |||

| Code Assistant | Suitable for generating simple code snippets. | Use when you have examples of desired code snippets or functions. | Effective for generating detailed coding sequences or functions. | |||

| Low Code/No Code | Suitable for generating simple low-code/no-code solutions. | Use when you have examples of desired solutions or workflows. | Can be used to generate detailed low-code/no-code solutions. | |||

| Spreadsheets | Suitable for generating simple spreadsheet formulas or layouts. | Use when you have examples of desired formulas or layouts. | Can be used to generate detailed spreadsheet sequences or automations. | |||

| Bug Finding | Suitable for identifying simple code errors. | Use when you have examples of common bugs and fixes. | Can be used to generate step-by-step debugging processes. | Helps the model reason through code to identify and fix bugs. |

Audio

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Editing | Suitable for basic audio editing tasks. | Use when you have examples of desired editing styles or effects. | Can be used to generate step-by-step audio editing instructions. | Combines audio cues and editing instructions for precise changes. | ||

| Music | Suitable for generating simple music compositions. | Use when you have examples of desired music styles or themes. | Can be used to generate detailed music compositions or arrangements. | Combines musical elements to create cohesive compositions. | ||

| Text To Speech | Suitable for simple text-to-speech tasks. | Use when you have examples of the desired speech style or tone. | Can be used to generate step-by-step phonetic details or emotional tones. | Combines text and audio cues to enhance speech output. | ||

| Transcriber | Suitable for generating simple transcriptions from audio. | Use when you have examples of the desired transcription style or format. | Can be used to generate detailed transcriptions or summaries. | Combines audio cues and transcription instructions for accurate results. |

Business

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Customer Support | Suitable for basic customer support interactions. | Use when you have examples of desired support responses or scripts. | Effective for generating detailed customer support workflows or interactions. | Enhances support by integrating customer data and external information. | Combines textual and contextual cues for improved customer interactions. | |

| E-commerce | Suitable for generating simple e-commerce recommendations or descriptions. | Use when you have examples of desired product descriptions or recommendations. | Effective for generating detailed e-commerce strategies or content. | Enhances e-commerce interactions by integrating customer and product data. | Combines textual and visual cues for improved product displays and recommendations. | |

| Education Assistant | Suitable for generating simple educational content or assistance. | Use when you have examples of desired educational materials or responses. | Effective for generating detailed educational plans or content. | Enhances educational assistance by integrating educational data and resources. | Combines textual and visual cues for enriched educational experiences. | |

| Finance | Suitable for generating simple financial reports or recommendations. | Use when you have examples of desired financial analyses or reports. | Effective for generating detailed financial strategies or reports. | Enhances financial insights by integrating financial data and resources. | Combines textual and numerical data for improved financial analysis. | |

| Human Resources | Suitable for generating simple HR documents or responses. | Use when you have examples of desired HR templates or responses. | Effective for generating detailed HR workflows or documents. | Enhances HR interactions by integrating employee data and external information. | Combines textual and contextual cues for improved HR processes. | |

| Legal Assistant | Suitable for generating simple legal documents or responses. | Use when you have examples of desired legal templates or responses. | Effective for generating detailed legal workflows or documents. | Enhances legal assistance by integrating legal data and resources. | Combines textual and contextual cues for improved legal processes. | |

| Sales | Suitable for generating simple sales pitches or responses. | Use when you have examples of desired sales scripts or pitches. | Effective for generating detailed sales strategies or pitches. | Enhances sales interactions by integrating customer data and sales information. | Combines textual and contextual cues for improved sales interactions. | |

| Startup | Suitable for generating simple startup ideas or plans. | Use when you have examples of desired startup plans or pitches. | Effective for generating detailed startup strategies or plans. | Enhances startup planning by integrating market data and resources. | Combines textual and contextual cues for improved startup planning and execution. |

Other

| Text Category | Zero-shot | Few-shot | Prompt Chaining | CoT | RAP | Multimodal |

|---|---|---|---|---|---|---|

| Fun | Suitable for generating simple, fun interactions or content. | Use when you have examples of desired fun content or interactions. | Effective for generating detailed fun sequences or games. | Combines textual and visual cues for enriched fun experiences. | ||

| Gaming | Suitable for generating simple gaming content or scenarios. | Use when you have examples of desired gaming scenarios or dialogues. | Effective for generating detailed gaming narratives or mechanics. | Combines textual and visual elements for immersive gaming experiences. | ||

| Health Care | Suitable for generating simple health care content or responses. | Use when you have examples of desired health care responses or guidelines. | Effective for generating detailed health care plans or content. | Enhances health care interactions by integrating medical data and resources. | Combines textual and contextual cues for improved health care assistance. | |

| Life Assistant | Suitable for generating simple life management tips or responses. | Use when you have examples of desired life management templates or responses. | Effective for generating detailed life management plans or tips. | Enhances life management by integrating personal data and external information. | Combines textual and contextual cues for improved life management assistance. | |

| Research | Suitable for generating simple research summaries or content. | Use when you have examples of desired research formats or topics. | Effective for generating detailed research plans or content. | Enhances research by integrating external data and resources. | Combines textual and contextual cues for enriched research experiences. |

Additional reading:

Additional reading:

- “A Guide to Crafting Effective Prompts for Diverse Applications” by OpenAI (https://community.openai.com/t/a-guide-to-crafting-effective-prompts-for-diverse-applications/493914)

- “Prompt Engineering” by Anthropic (https://docs.anthropic.com/claude/docs/prompt-engineering)

- “Prompt Engineering: A Practical Guide with 5 Case Studies” by Albert Harley (https://chatgpt4online.org/prompt-engineering/)

7.6 Common Issues and Challenges

7.6.1 Hallucinations

Hallucinations in AI occur when the model generates responses that are factually incorrect, inconsistent, or irrelevant. This typically happens when the AI tries to fill knowledge gaps or faces ambiguous prompts.

Strategies to Reduce Hallucinations:

- Promote Transparency: Encourage the AI to use responses like “I don’t know” or “I’m not sure” to maintain honesty.

- Anchor in Source Material: For factual questions, particularly about specific documents, instruct the AI to cite direct quotes from the source material to ensure accuracy.

- Simplify Prompts: Use clear, straightforward prompts that clearly define the task to reduce irrelevant or off-topic responses.

- Confidence-Based Responding: Configure the AI to reply only when it has a high certainty about the answer, reducing the chances of making incorrect statements.

- Cross-Verification: Produce multiple outputs for the same query and compare them to detect inconsistencies, a sign of hallucination.

Identifying Hallucinations:

- Provide visual cues and examples that help users understand typical instances when hallucinations occur. This includes spotting patterns of inaccuracies or responses that don’t logically follow from the input.

7.6.2 Jailbreaks and Prompt Injections

Jailbreaks and prompt injections are two types of manipulative interactions where users intentionally craft prompts that exploit weaknesses in an AI model’s programming or training. These manipulations aim to coax the AI into generating outputs that violate its intended operational guidelines or ethical constraints. The terms are often used in the context of security and content moderation within AI systems.

Jailbreaks:

A jailbreak occurs when a user finds a way to “break” the model out of its intended operational constraints. For instance, bypassing filters that prevent the generation of prohibited content such as hate speech or explicit material. Jailbreaks can potentially lead to the model acting in ways that were explicitly programmed against, including violating privacy, creating misleading information, or generating harmful content.

Prompt Injections:

Prompt injections involve inserting specific commands or keywords into prompts that trigger unintended behaviors in the AI. This might include forcing the model to output private data, execute commands it shouldn’t, or operate in a manner that the designers did not anticipate. These injections exploit the model’s ability to interpret and act on complex inputs in ways that were not foreseen by the developers, often by leveraging the underlying mechanics of how the model processes input data.

Why Do They Matter? Both jailbreaks and prompt injections represent significant security and ethical risks for AI systems. They can undermine the trust users place in AI technologies, compromise user data, or result in the dissemination of harmful or false information. As AI models become more integrated into critical systems and everyday applications, the potential for such exploits necessitates robust defenses.

Preventing Jailbreaks and Prompt Injections:

- Input Validation: Rigorously checking inputs can help filter out malicious prompts or keywords before they’re processed by the AI. This includes setting up pattern recognition to detect unusual or suspicious input structures.

- Enhanced Training: Training models on a diverse set of scenarios, including adversarial examples, can prepare them to better recognize and resist attempts to elicit undesired behaviors.

- Operational Constraints: Implementing hard-coded constraints in the AI’s operational logic can prevent it from generating certain types of content, regardless of the input received.

- Continuous Monitoring: Keeping a vigilant eye on AI interactions allows for the early detection of potential jailbreaks or injections, enabling timely interventions.

- User Education and Guidelines: Informing users about the proper use of AI and the dangers of attempting to manipulate it can reduce the occurrence of malicious inputs.

Understanding and addressing the challenges posed by jailbreaks and prompt injections are crucial for maintaining the integrity, safety, and reliability of AI systems. Effective strategies involve a combination of technological solutions, continuous oversight, and user engagement to safeguard against these vulnerabilities.

7.6.3 Keeping AI in Character

Maintaining character consistency is crucial in role-playing scenarios. AIs can sometimes deviate from their assigned personas, particularly in extended dialogues or with less advanced models.

Strategies for Consistency:

- Set Clear Expectations: Begin interactions with prompts that establish the character’s personality and expected behaviors.

- Detailed Character Profiles: Provide the AI with extensive background details about the character, including traits, history, and typical language use.

- Contextual Guidance: Continuously integrate prompts that remind the AI of the character’s traits and situational reactions.

- Preload Character Responses: Start AI responses with pre-filled text that reflects the character’s tone, guiding the model to continue in that manner.

- Dynamic Scenario Planning: Prepare the AI with guidelines on how the character should respond in various situations, ensuring responses stay true to the persona.

Character Consistency Tips:

- Illustrate best practices through examples showing how to successfully maintain a character’s integrity across different scenarios, alongside common pitfalls and how they were addressed.

7.6.4 Bias in AI

Bias in AI occurs when the model generates responses that are unfair, discriminatory, or perpetuate stereotypes against certain groups or individuals. This typically happens when the training data used to develop the AI model contains biased information or when the model learns and amplifies biases present in society.

Long-term Impacts of Biased AI Systems

Biased AI systems can have far-reaching and long-lasting impacts on society. If left unchecked, these systems can perpetuate and even amplify existing inequalities and discrimination. For example, biased hiring algorithms may consistently favor certain demographics over others, leading to a lack of diversity in the workforce and limiting opportunities for underrepresented groups. Similarly, biased AI systems used in healthcare may lead to unequal treatment and disparities in patient outcomes.

Moreover, as AI becomes increasingly integrated into decision-making processes across various domains, the impact of biased systems can compound over time. This can lead to a vicious cycle where biased outputs influence future data collection and model training, further entrenching biases in the system. It is crucial to recognize that the effects of biased AI are not limited to individual instances but can have systemic and societal consequences.

Proactively Addressing Bias in AI Development

Given the potential long-term impacts of biased AI systems, it is essential to proactively address bias at every stage of the AI development process. This involves a concerted effort from all stakeholders, including AI researchers, developers, policymakers, and end-users.

Strategies to Reduce Bias in AI:

- Diverse and Inclusive Training Data: Ensure that the data used to train the AI model is diverse, representative, and free from biases. This includes using data from various sources, demographics, and perspectives to prevent the model from learning and perpetuating biases.

- Bias Auditing and Testing: Regularly audit and test the AI model for biases by using carefully designed prompts and analyzing the generated responses. This helps identify any biases that may have been inadvertently introduced during the training process.

- Bias Mitigation Techniques: Apply bias mitigation techniques, such as adversarial debiasing or fairness constraints, during the model training process. These techniques aim to reduce the model’s reliance on sensitive attributes (e.g., gender, race, or age) when generating responses.

- Human Oversight and Feedback: Involve human reviewers to monitor the AI’s outputs and provide feedback on any biased or discriminatory responses. This feedback can be used to fine-tune the model and improve its fairness over time.

- Transparency and Accountability: Be transparent about the potential biases in the AI model and take accountability for addressing them. This includes communicating the limitations of the model to users and continuously working to improve its fairness and inclusivity.

- Identifying Bias in AI: Provide examples of biased responses generated by AI models, such as gender stereotypes in job recommendations or racial biases in criminal risk assessment tools. Highlight the patterns and language that indicate bias in the model’s outputs.

By proactively addressing bias in AI development, we can work towards building AI systems that are fairer, more inclusive, and beneficial to society as a whole. This requires ongoing collaboration, vigilance, and a commitment to ethical and responsible AI practices.

Additional reading:

Additional reading:

- “Troubleshooting” by Anthropic (https://docs.anthropic.com/claude/docs/troubleshooting)

- “Minimizing Hallucinations” by Anthropic (https://docs.anthropic.com/claude/docs/minimizing-hallucinations)

- “Mitigating Jailbreaks & Prompt Injections” by Anthropic (https://docs.anthropic.com/claude/docs/mitigating-jailbreaks-prompt-injections)

7.7 Summary

This module provided an introduction to AI prompting, equipping learners with the skills to craft effective prompts that enhance interactions with AI models. It covered a range of techniques from basic to advanced, providing a comprehensive toolkit for real-world applications.

The module discussed the importance of understanding the relationship between prompting techniques and the functionalities of Large Language Models (LLMs) and Natural Language Processing (NLP). This knowledge is vital for effectively leveraging AI across different sectors. By mastering methods like zero-shot, few-shot, and chain-of-thought prompting, learners can now tailor AI interactions to specific needs.

Detailed instructions on implementing these techniques were provided, emphasizing the need for clear, concise, and relevant prompts. Through practical examples and interactive projects, learners practiced creating prompts that yield more precise and useful AI outputs.

Advanced strategies such as prompt chaining and multimodal prompting were also highlighted, showcasing AI’s potential to handle complex situations and boost productivity and creativity in various fields, including healthcare and finance.

As AI prompting continues to evolve, it’s important for learners to engage in ongoing education and stay abreast of new techniques and emerging trends. By actively participating in the AI prompting community and sharing experiences, learners can contribute to the advancement of this field and ensure its responsible use in society. It’s crucial to maintain ethical standards and promote beneficial AI applications as the technology progresses.

Additional reading:

Additional reading:

- “Bridging the AI_Human Communication Gap: A Comprehensive Guide to Prompt Engineering” by Akash Takyar (https://www.leewayhertz.com/prompt-engineering/)

- “The Future of Prompting: Emerging Trends and Research Directions” by James Howell (https://101blockchains.com/future-of-prompt-engineering/)

- “Prompting Community Forum” (https://example.com/prompting-community-forum)

- “Beginner’s Guide to Engineering Prompts for LLMs” by Shailender Singh and Sid Padgaonkar (https://blogs.oracle.com/ai-and-datascience/post/beginners-guide-engineering-prompts-llm)