Module 4: Large Language Models (LLMs) and their Applications

Large Language Models (LLMs) represent a groundbreaking advancement in Natural Language Processing, revolutionizing how machines understand, generate, and interact with human language. This module explores the frontier of this transformative technology.

- Goal: Introduce learners to large language models (LLMs), their architecture, training techniques, and applications in natural language processing and generation.

- Objective: By the end of this module, learners will be able to explain the concept of LLMs, understand their capabilities and limitations, and recognize their potential applications across various domains.

This module begins by defining LLMs and contrasting them with traditional language models. You’ll gain insights into their key architectures like transformers that enable capturing context and long-range dependencies.

We’ll cover training paradigms including pre-training on vast text corpora and fine-tuning for specific tasks. You’ll understand computational challenges in scaling LLMs and approaches like prompt engineering that facilitate adaptation.

The module dives into LLM capabilities showcased through real-world applications like text generation, question-answering, summarization, dialogue systems, and content creation across industries.

While exploring their potential, we’ll also examine LLM limitations – bias/fairness concerns, hallucinations, computational costs, interpretability challenges, and mitigation strategies.

Notable models like GPT, BERT, and their impact will be highlighted, along with a look at future directions – multimodal integration, knowledge enhancement, and novel applications.

By the end, you’ll grasp LLM architectures, training techniques, capabilities, limitations, and their transformative potential. You’ll appreciate ethical considerations for the responsible development of these powerful language models.

- 4.1 Introduction to Large Language Models (LLMs)

- 4.2 Architecture and Training

- 4.3 Capabilities and Applications

- 4.4 Limitations and Challenges

- 4.5 Notable LLMs and their Impact

- 4.6 Summary

4.1 Introduction to Large Language Models (LLMs)

Large Language Models (LLMs) have emerged as a groundbreaking development in the field of Natural Language Processing (NLP), revolutionizing the way machines understand, generate, and interact with human language. These powerful models have opened up new possibilities for AI-powered language applications and have become a central focus of research and industry interest.

4.1.1 Large Language Models vs. Natural Language Processing

In the previous module, we explored the fundamentals of Natural Language Processing (NLP), a broad field that encompasses various techniques and approaches for enabling machines to understand and generate human language. While Large Language Models (LLMs) are a significant advancement in the field of NLP, they represent a specific type of model architecture with distinct characteristics and capabilities.

Natural Language Processing: A Comprehensive Field

NLP is a multidisciplinary field that draws from computer science, linguistics, and cognitive science. It involves a wide range of tasks and techniques, such as:

- Text preprocessing and tokenization

- Part-of-speech tagging and named entity recognition

- Syntactic and semantic analysis

- Sentiment analysis and opinion mining

- Machine translation and text summarization

- Information retrieval and question-answering

Traditional NLP approaches often rely on a combination of rule-based systems, statistical models, and earlier neural network architectures and have applications across numerous domains, including customer service, content analysis, healthcare, finance, and more.

Large Language Models: A Powerful NLP Modeling Approach

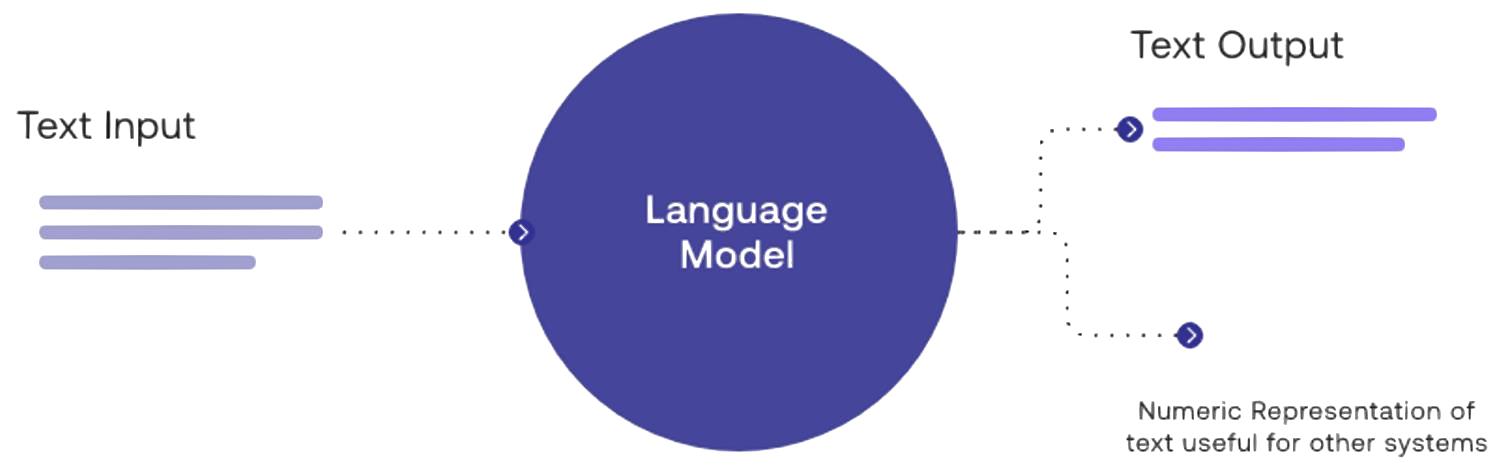

Large Language Models, on the other hand, are a specific type of neural network architecture designed for language modeling tasks. They are characterized by their massive size, with billions of parameters, and their ability to capture complex linguistic patterns through self-supervised pre-training on vast amounts of text data.

While LLMs are a significant advancement in NLP, they are not synonymous with the entire field. Instead, they represent a powerful modeling approach that can be applied to various NLP tasks, such as text generation, machine translation, question answering, and more.

LLMs have demonstrated remarkable capabilities in understanding and generating human-like language, but they often rely on many of the underlying NLP techniques and concepts, such as tokenization, parsing, and semantic analysis. Additionally, LLMs require task-specific fine-tuning or prompting to adapt their general language knowledge to specific applications.

The easiest way to remember:

- NLP is a field of artificial intelligence (AI) that allows computers to understand, interpret, generate, and manipulate human language.

- LLMs are a type of artificial intelligence (AI) program, a specific type of model architecture, that has revolutionized certain aspects of NLP, particularly in language modeling and generation. This allows LLMs to mimic human intelligence and create new combinations of text that mimic natural language.

4.1.2 Definition and Key Characteristics of LLMs

Large Language Models are deep learning models with a vast number of parameters, often ranging from millions to billions, that are trained on massive amounts of text data to learn the intricacies of human language. The key characteristics of LLMs include:

- Size and complexity: LLMs are significantly larger and more complex than traditional language models, with deep architectures and numerous layers that enable them to capture rich linguistic patterns and nuances.

- Unsupervised pre-training: LLMs are typically pre-trained on large, unlabeled text corpora using self-supervised learning objectives, allowing them to learn general language representations without the need for manual annotation.

- Versatility and adaptability: Once pre-trained, LLMs can be fine-tuned on smaller, task-specific datasets to adapt their knowledge to various downstream NLP tasks, such as text classification, question answering, and language generation.

- Contextual understanding: LLMs have the ability to understand and generate language in context, capturing long-range dependencies and coherence across extended passages of text.

Additional reading:

Additional reading:

- “Language Models are Few-Shot Learners” by Brown et al. (https://arxiv.org/abs/2005.14165)

4.1.3 Comparison with traditional language models

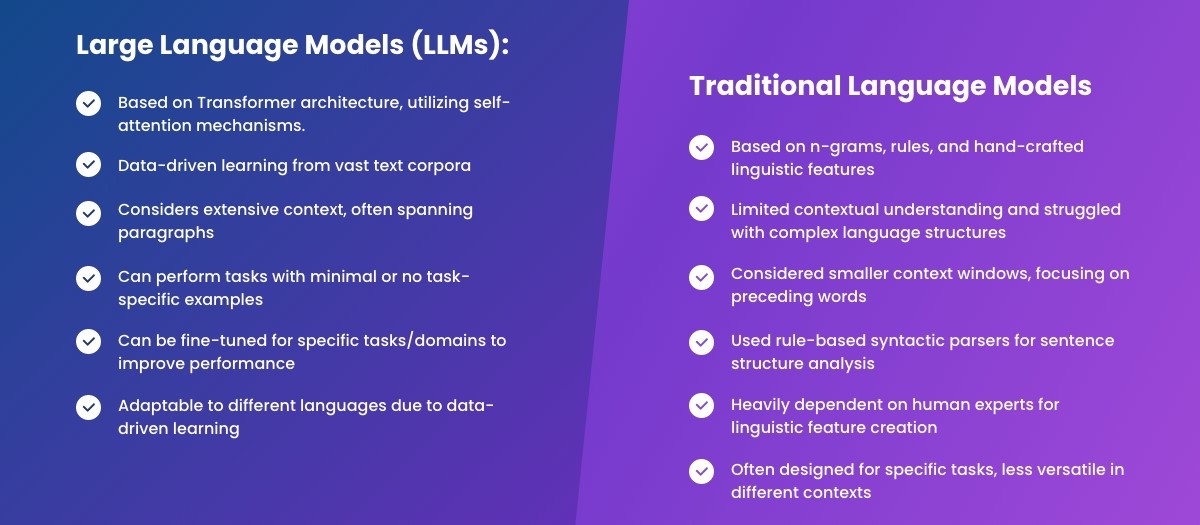

LLMs differ from traditional language models in several key aspects:

- Scale and capacity: Traditional language models, such as n-gram models or shallow neural networks, have a limited capacity to capture complex language patterns and often struggle with long-range dependencies. In contrast, LLMs, with their deep architectures and vast number of parameters, can learn rich, hierarchical representations of language that enable more sophisticated understanding and generation.

- Training paradigm: While traditional language models are typically trained on smaller, task-specific datasets using supervised learning, LLMs are pre-trained on massive, unlabeled text corpora using self-supervised learning objectives. This unsupervised pre-training allows LLMs to learn general language representations that can be transferred to various downstream tasks.

- Generalization and transfer learning: LLMs have shown remarkable ability to generalize their knowledge to new tasks and domains, often with minimal fine-tuning. This transfer learning capability enables LLMs to achieve state-of-the-art performance on a wide range of NLP benchmarks and real-world applications.

4.1.4 Significance of LLMs in advancing NLP capabilities

The development of LLMs has marked a significant milestone in the advancement of NLP capabilities, pushing the boundaries of what machines can achieve in language understanding and generation. Some of the key contributions of LLMs include:

- Enhanced language understanding: LLMs have greatly improved the ability of machines to understand and interpret human language, capturing subtle nuances, ambiguity, and context-dependent meanings. This has enabled more accurate and reliable performance on tasks such as sentiment analysis, named entity recognition, and natural language inference.

- Human-like language generation: LLMs have demonstrated the ability to generate human-like text that is coherent, fluent, and contextually appropriate. This has opened up new possibilities for applications such as dialogue systems, content creation, and creative writing assistance.

- Multilingual and cross-lingual capabilities: Many LLMs are trained on multilingual text corpora, enabling them to understand and generate language across multiple languages. This has facilitated the development of cross-lingual NLP applications, such as machine translation and multilingual question-answering.

- Enabling few-shot and zero-shot learning: LLMs have shown the ability to perform tasks with limited or no task-specific training data, a capability known as few-shot or zero-shot learning. This has significant implications for low-resource languages and domains, where labeled data is scarce.

Additional reading:

Additional reading:

- “A Survey of Deep Learning for Scientific Discovery” by Raghu and Schmidt (https://arxiv.org/abs/2003.11755)

The recent advancement of LLMs extends beyond their technical capabilities, as they have also sparked important discussions around the ethical implications, societal impact, and responsible development of powerful language technologies. As LLMs continue to advance, they are expected to play a central role in shaping the future of NLP and AI more broadly.

4.2 Architecture and Training

The architecture and training techniques used in Large Language Models (LLMs) are fundamental to their ability to capture the complexities of human language and achieve state-of-the-art performance on a wide range of NLP tasks.

4.2.1 Transformer-based architectures (e.g., GPT, BERT)

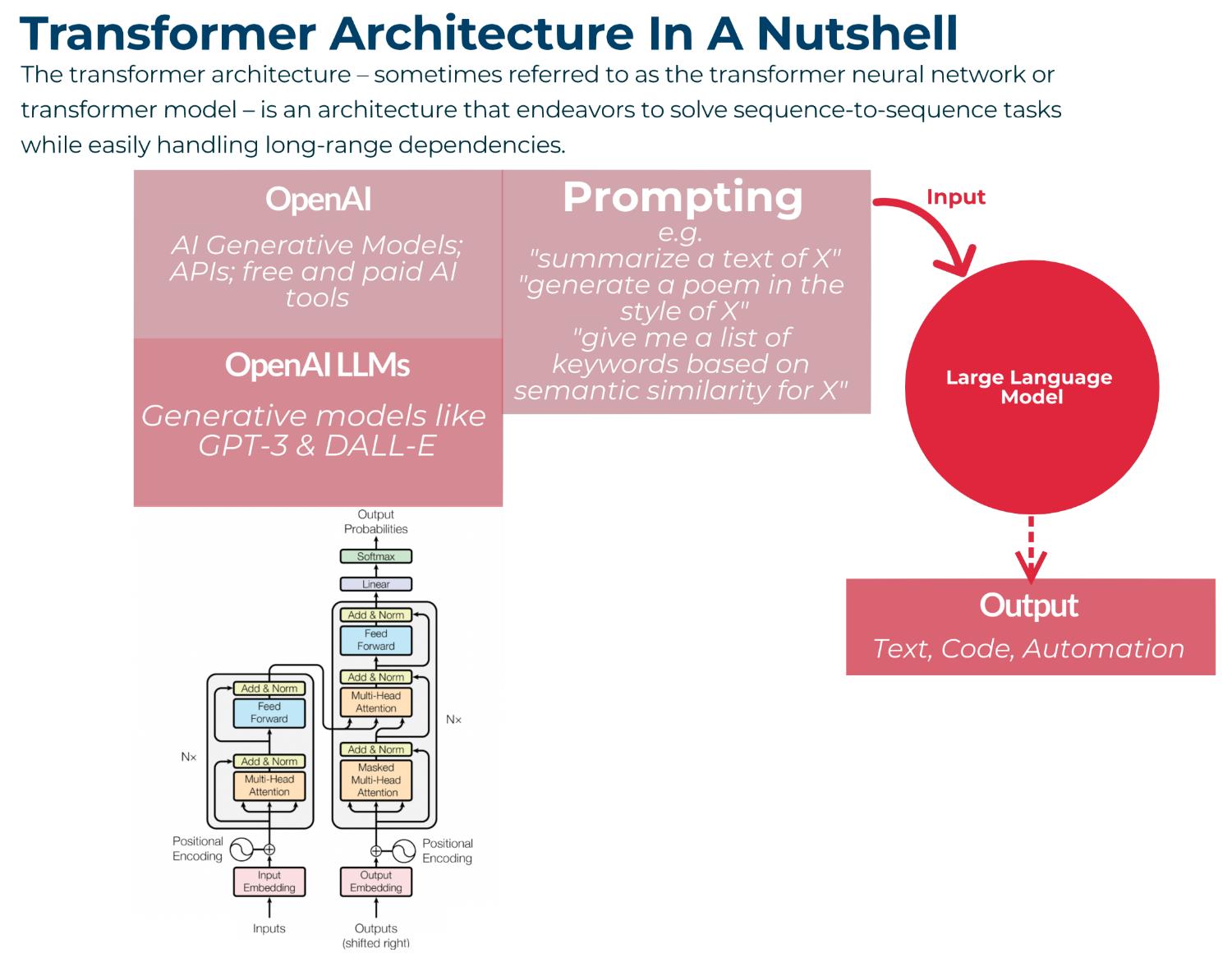

The transformer architecture, introduced in the seminal paper “Attention Is All You Need” by Vaswani et al. (2017), has become the backbone of most modern LLMs. Transformers rely on a self-attention mechanism that allows the model to weigh the importance of different words in a sequence when processing each word, enabling it to capture long-range dependencies and contextual information more effectively than previous architectures, such as recurrent neural networks (RNNs).

Two of the most prominent transformer-based LLMs are:

- GPT (Generative Pre-trained Transformer): Developed by OpenAI, the GPT series (GPT-1, GPT-2, GPT-3) are autoregressive language models that are trained to predict the next word in a sequence given the previous words. GPT models have shown remarkable performance in language generation tasks, such as text completion, dialogue response generation, and creative writing.

- BERT (Bidirectional Encoder Representations from Transformers): Developed by Google, BERT is a bidirectional transformer model that is trained on two tasks: masked language modeling (predicting randomly masked words in a sequence) and next sentence prediction (predicting whether two sentences follow each other). BERT has achieved state-of-the-art results on a wide range of NLP tasks, particularly those involving language understanding, such as question answering, sentiment analysis, and named entity recognition.

Additional reading:

Additional reading:

- “Attention Is All You Need” by Vaswani et al. (https://arxiv.org/abs/1706.03762)

4.2.2 Self-attention mechanism and its role in LLMs

The self-attention mechanism is a key component of transformer-based LLMs, allowing the model to attend to different parts of the input sequence when processing each word. In self-attention, each word in the sequence is represented by three learned vectors: a query vector, a key vector, and a value vector. The attention score between two words is computed as the dot product of the query vector of one word and the key vector of the other word, normalized by a scaling factor. These attention scores determine the weights assigned to each word’s value vector when computing the output representation for a given word.

The self-attention mechanism offers several advantages over previous architectures:

- Long-range dependencies: By allowing each word to attend to all other words in the sequence, self-attention can effectively capture long-range dependencies and contextual information, which is crucial for understanding and generating coherent language.

- Parallelization: Unlike RNNs, which process words sequentially, the self-attention mechanism can be computed in parallel for all words in the sequence, enabling faster training and inference times.

- Interpretability: The attention scores provide a degree of interpretability, allowing researchers to visualize and analyze which parts of the input sequence the model is attending to when making predictions.

4.2.3 Scaling up LLMs: model size and computational requirements

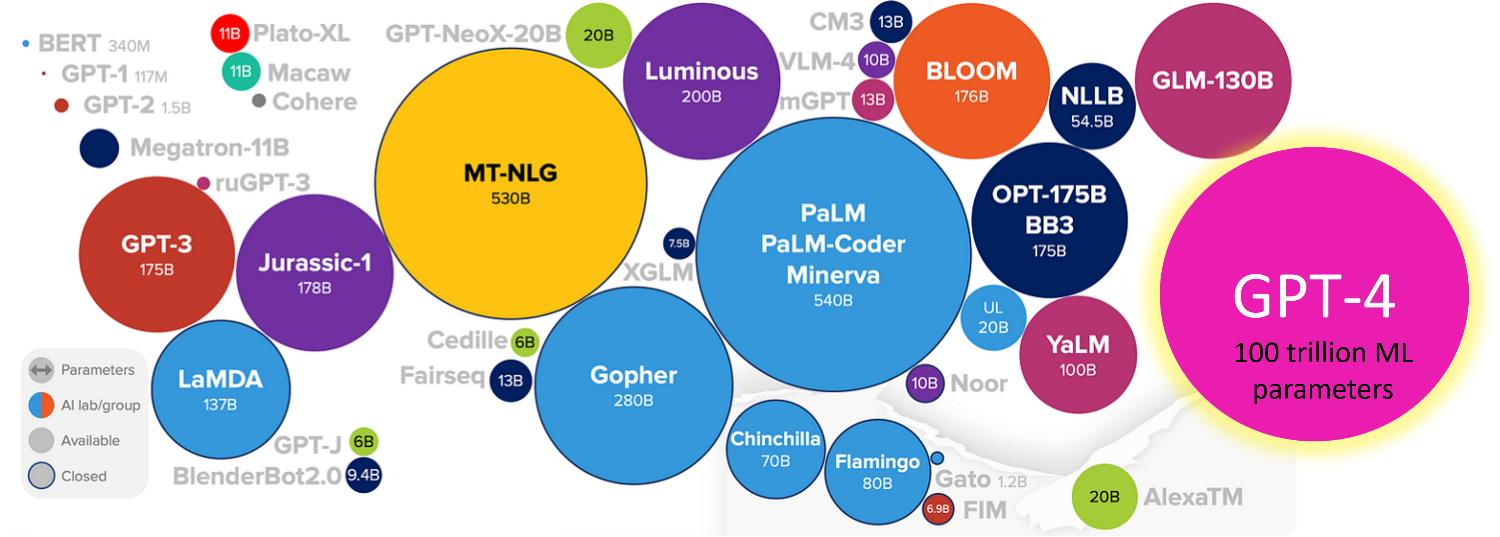

One of the key factors driving the success of LLMs is their scale, both in terms of model size (number of parameters) and the amount of training data. Larger models trained on more data have consistently shown better performance on a wide range of NLP tasks, a phenomenon known as the “scaling laws” of language models.

However, scaling up LLMs comes with significant computational requirements, both during training and inference. Training state-of-the-art LLMs can require hundreds of petaflops of computation and consume vast amounts of energy, raising concerns about the environmental impact and accessibility of these models.

Some approaches to address the computational challenges of LLMs include:

- Model parallelism: Splitting the model across multiple GPUs or TPUs to enable the training of larger models.

- Data parallelism: Distributing the training data across multiple devices to speed up training.

- Mixed-precision training: Using lower-precision floating-point representations (e.g., FP16) to reduce memory usage and speed up computation.

- Knowledge distillation: Transferring the knowledge from a large, pre-trained model to a smaller, more efficient model that can be deployed with lower computational requirements.

4.2.4 Pre-training and fine-tuning techniques

Pre-training and fine-tuning are fundamental techniques in LLM training:

Pre-training

Pre-training involves training the model on a large, general-purpose text corpus using self-supervised learning objectives, such as masked language modeling (MLM), next sentence prediction (NSP), and permutation language modeling (PLM). This allows the model to learn general language representations that can be transferred to various downstream tasks.

- Masked Language Modeling (MLM): In this objective, a portion of the input tokens are randomly masked, and the model is trained to predict the original tokens based on the surrounding context. This allows the model to learn bidirectional representations of language.

- Next Sentence Prediction (NSP): This objective involves training the model to predict whether two sentences follow each other in the original text. This helps the model learn to understand the relationships between sentences and capture coherence.

- Permutation Language Modeling (PLM): In this objective, the model is trained to predict the original order of a randomly permuted sequence of words. This helps the model learn to understand the semantic and syntactic structure of language.

Fine Tuning

Fine-tuning involves adapting the pre-trained LLM to a specific task by training it on a smaller, task-specific labeled dataset. During fine-tuning, the model’s parameters are updated to optimize performance on the target task while leveraging the general language knowledge acquired during pre-training.

The pre-training and fine-tuning paradigm has several advantages, including transfer learning, improved performance, and the ability to perform few-shot and zero-shot learning.

4.2.5 Few-shot, zero-shot, and one-shot learning

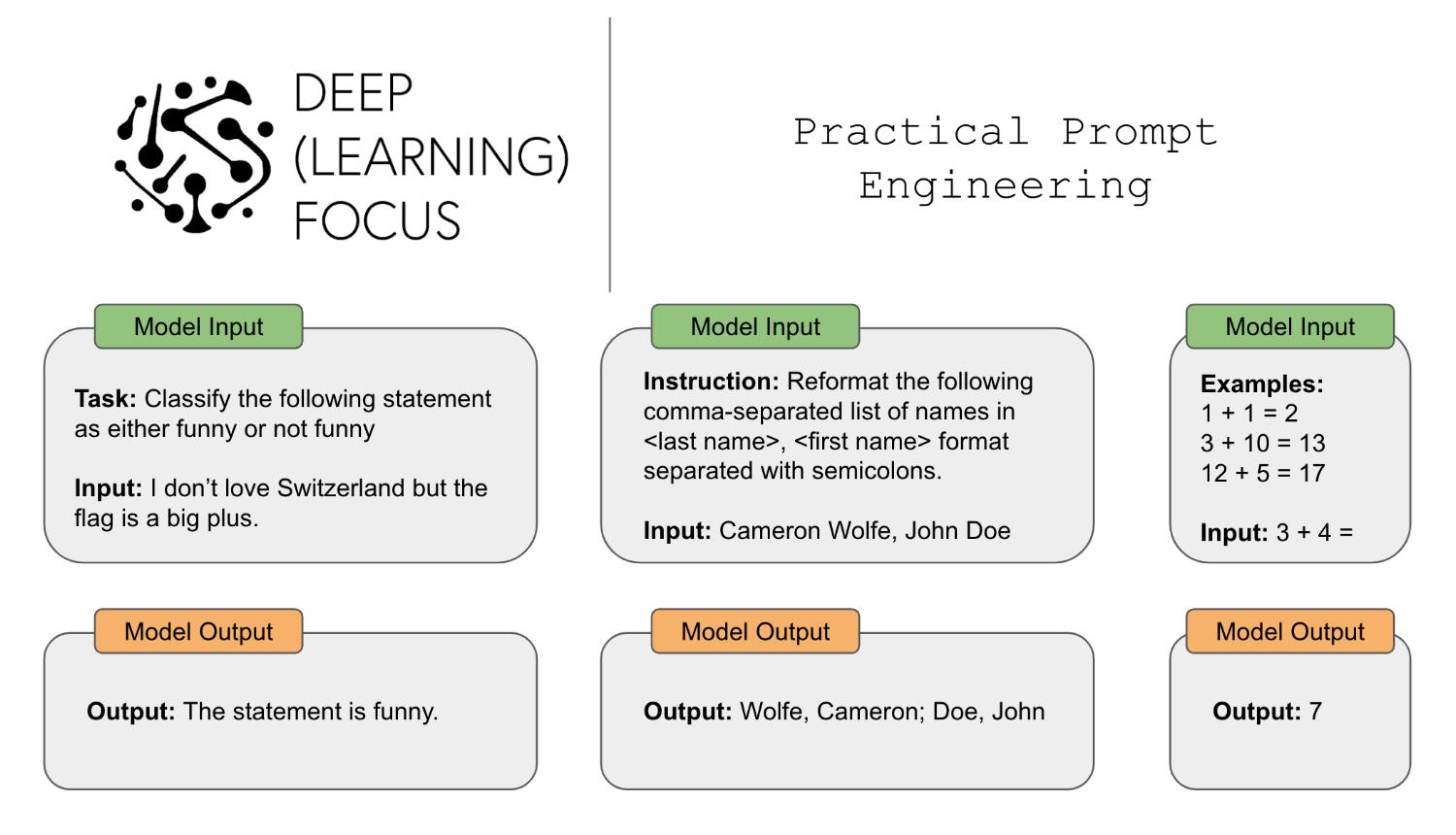

Zero-shot, one-shot, and few-shot learning are techniques that enable LLMs to perform tasks with minimal or no task-specific training data.

- Few-shot Learning: Involves providing the model with a small number of examples in the prompt, which the model can use to understand the task and generate responses. This allows LLMs to adapt to new tasks with just a few examples, leveraging the knowledge gained during pre-training.

- Zero-shot Learning: Involves providing the model without any examples and expecting it to perform, based solely on the instructions provided in the prompt. This demonstrates the model’s ability to understand and follow instructions based on its general language understanding capabilities.

- One-shot Learning: Involves providing the model with a single example in the prompt, which the model can use as a reference to understand the task and generate responses. This demonstrates the model’s ability to grasp the context and requirements of a task with minimal input, showcasing its adaptability and efficiency.

Example:

OpenAI has developed a technique called “in-context learning” which allows their GPT models to perform tasks with minimal or no task-specific training data. The model can understand the task and generate appropriate responses by providing a few examples in the prompt, enabling a wide range of applications without extensive fine-tuning.

By understanding and applying these techniques and approaches, you can harness the full potential of LLMs and develop more efficient, adaptable, and powerful language-based AI systems.

4.2.6 Prompt engineering

Prompt engineering is a crucial technique in working with LLMs, involving the design and optimization of prompts to elicit desired behaviors and outputs from the models. Effective prompt engineering requires understanding the capabilities and limitations of LLMs and crafting prompts that provide the necessary context, instructions, and examples for the model to perform a specific task or generate a particular type of output.

Prompt engineering is closely related to few-shot and zero-shot learning, as these techniques rely heavily on well-designed prompts to guide the model toward the desired behavior.

While this module provides an overview of prompt engineering, a separate module, and live workshop are dedicated to exploring this topic in greater depth, providing students with hands-on experience and demonstrating various prompting methods.

4.2.7 Cross-task generalization and transfer learning

Cross-task generalization and transfer learning are key techniques that enable LLMs to adapt to new tasks and domains with minimal additional training data.

- Cross-task generalization refers to the ability of advanced LLMs to perform well on tasks they have not been explicitly trained on. This is achieved through the models’ ability to learn and internalize general language understanding and reasoning skills during pre-training.

- Transfer learning involves pre-training LLMs on large, general-purpose text corpora and then fine-tuning them on smaller, task-specific datasets. This approach allows LLMs to leverage the knowledge gained during pre-training and adapt it to specific applications, reducing the need for extensive labeled data.

The combination of cross-task generalization and transfer learning enables LLMs to be versatile and adaptable, with applications spanning various industries and domains.

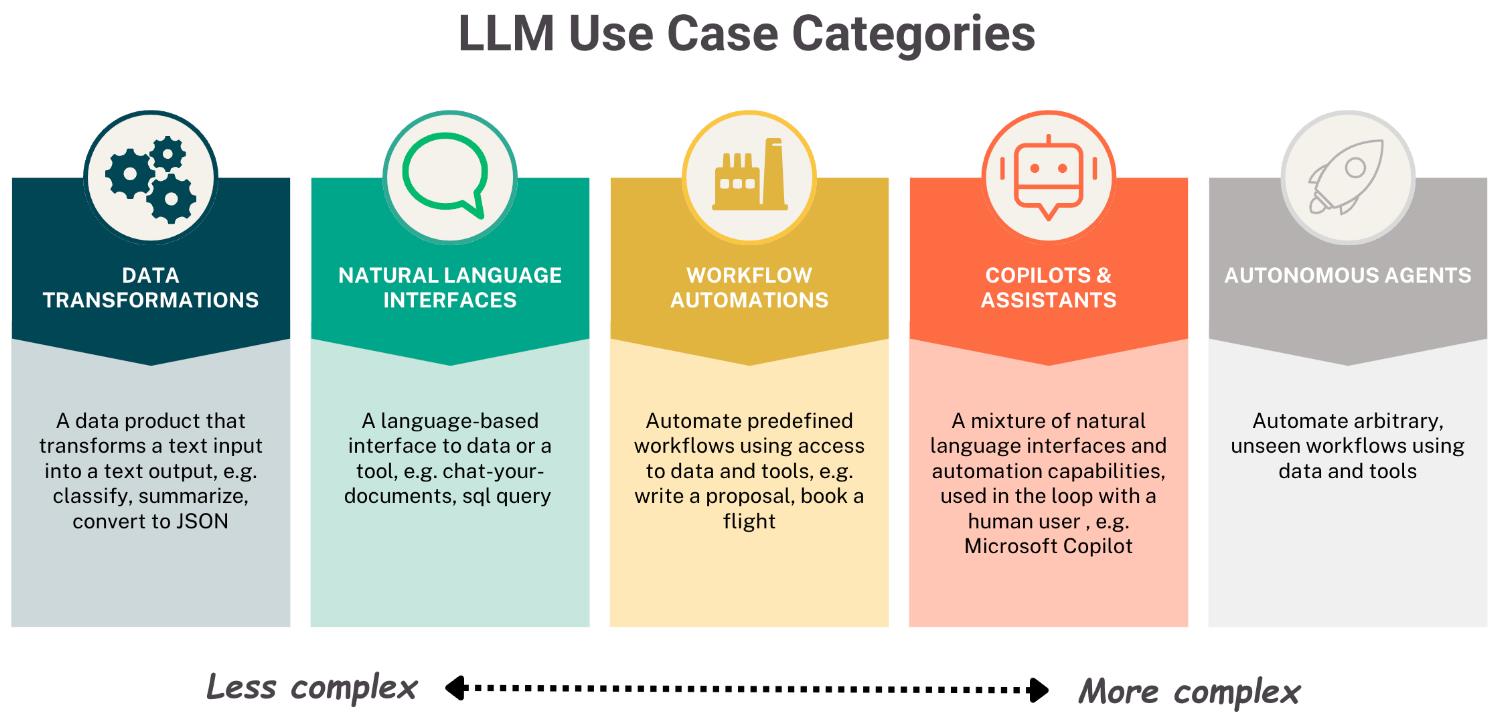

4.3 Capabilities and Applications

The capabilities of Large Language Models (LLMs) extend beyond traditional NLP tasks, enabling a wide range of applications that leverage their ability to understand and generate human-like language. In this section, we will explore some of the key capabilities and applications of LLMs, highlighting their potential to transform various domains through specific real-world examples and case studies.

4.3.1 Text Generation and Completion

One of the most prominent capabilities of LLMs is their ability to generate coherent and contextually relevant text. By learning from vast amounts of text data, LLMs can predict the most likely next word or sequence of words given a prompt or context, enabling them to generate human-like text that continues the given input.

- Creative writing assistance: LLMs can be used to generate story continuations, poetry, or even entire articles based on a given prompt or theme, assisting writers in overcoming writer’s block and sparking new ideas.

- Content creation: LLMs can help in generating product descriptions, news articles, or marketing copy, reducing the time and effort required for content creation while maintaining quality and consistency.

- Dialogue response generation: In conversational AI systems, LLMs can generate contextually appropriate responses to user queries, enabling more natural and engaging interactions.

Example:

OpenAI’s GPT-3 has been used by the company Fable Studio to create an interactive storytelling experience called “Lucy.” Users can engage in a dialogue with the AI-powered character Lucy, who responds to their input and generates a unique story based on the conversation.

4.3.2 Question Answering and Information Retrieval

LLMs have shown remarkable performance in question-answering tasks, where the model is required to provide accurate answers to questions based on a given context or knowledge base. By understanding the context and reasoning over the available information, LLMs can generate human-like answers that demonstrate a deep understanding of the subject matter.

- Virtual assistants and chatbots: LLMs can power conversational AI systems that can answer user queries, provide recommendations, and assist with various tasks, enhancing user experience and productivity.

- Knowledge base querying: LLMs can be used to query structured and unstructured knowledge bases, enabling users to access relevant information quickly and easily.

- Educational and tutorial systems: LLMs can be integrated into educational platforms to provide personalized guidance, answer student questions, and offer explanations on complex topics.

Example:

AI research company Anthropic has developed an AI assistant called Claude, which uses advanced language models to answer questions, provide explanations, and assist with various tasks. Claude has been deployed in several applications, including a customer support chatbot for the meditation app Headspace.

4.3.3 Text Summarization and Paraphrasing

LLMs have the ability to understand and distill the key information from long passages of text, enabling them to generate concise summaries that capture the main ideas and essential details. This capability is particularly valuable in scenarios where quick information retrieval and comprehension are crucial.

- News and article summarization: LLMs can automatically generate summaries of news articles, research papers, or lengthy reports, allowing users to quickly grasp the main points without reading the entire document.

- Meeting and lecture notes: LLMs can summarize transcripts of meetings, lectures, or conference calls, highlighting the key takeaways and action items for participants.

- Text simplification and translation: LLMs can paraphrase complex text into simpler language, making it more accessible to a wider audience, or translate text from one language to another while preserving the original meaning.

Example:

The legal technology company Casetext uses LLMs to automatically summarize legal documents, such as court opinions and briefs. This allows legal professionals to quickly grasp the key points of lengthy documents, saving time and improving efficiency.

4.3.4 Dialogue Systems and Chatbots

LLMs have revolutionized the development of dialogue systems and chatbots, enabling more natural and context-aware conversations between humans and machines. Understanding the user’s intent, maintaining context across multiple turns, and generating human-like responses LLMs are engaging in more meaningful and productive interactions.

- Customer support and service: LLMs can power chatbots that handle customer inquiries, provide information, and assist with troubleshooting, reducing the workload on human support staff and improving customer satisfaction.

- Mental health and therapy: Conversational AI systems powered by LLMs can provide emotional support, offer coping strategies, and guide users through mental health challenges, complementing traditional therapy methods.

- Language learning and practice: LLMs can engage learners in natural conversations, provide feedback on grammar and pronunciation, and adapt to the learner’s level, enhancing the language learning experience.

Example:

Mental health platform Woebot uses an AI-powered chatbot to provide cognitive behavioral therapy (CBT) and support to users. The chatbot, powered by advanced language models, engages in personalized conversations, offers coping strategies, and helps users track their mood and progress.

4.3.5 Creative Writing and Content Generation

LLMs have shown impressive capabilities in creative writing tasks, such as poetry generation, storytelling, and even code generation. By learning the patterns, styles, and structures of various forms of creative expression, LLMs can generate novel and engaging content that often surpasses human-level quality.

- Automated journalism: LLMs can generate news articles, reports, and even entire books based on structured data and templates, streamlining the content creation process and enabling personalized content delivery.

- Creative ideation and brainstorming: LLMs can assist writers, artists, and designers in generating new ideas, concepts, and inspiration by providing prompts, suggestions, and variations on a given theme.

- Code generation and completion: LLMs trained on large codebases can generate code snippets, complete functions, and even entire programs based on natural language descriptions, accelerating software development and reducing the burden on programmers.

Example:

The AI-powered writing assistant Jasper.ai uses LLMs to help content creators generate high-quality articles, social media posts, and marketing copy. The tool can adapt to different writing styles and genres, providing users with creative ideas and suggestions to overcome writer’s block and improve their content.

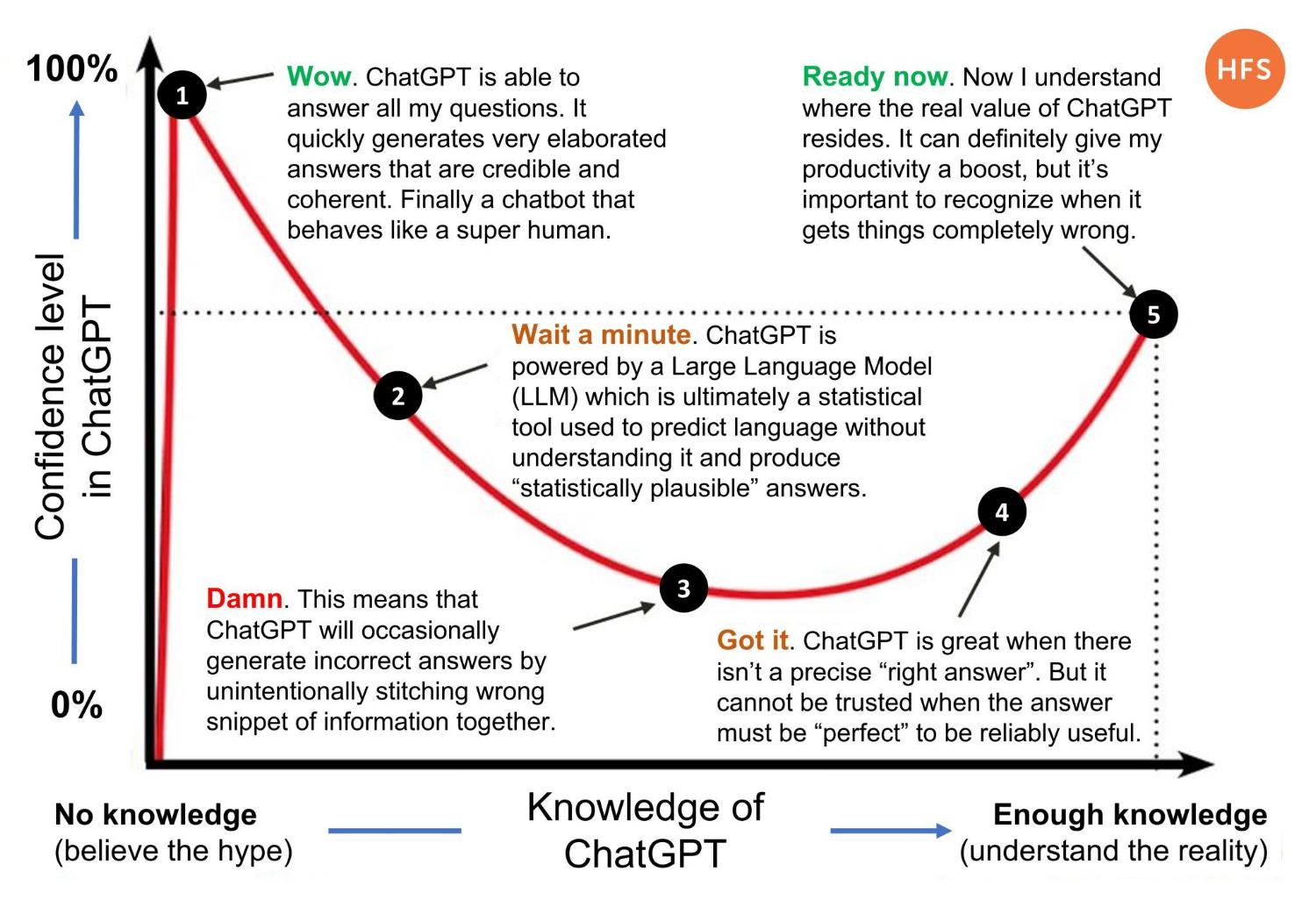

4.4 Limitations and Challenges

Despite the remarkable capabilities and applications of Large Language Models (LLMs), several limitations and challenges need to be addressed to ensure their responsible development and deployment. In this section, we will explore some of the key issues surrounding LLMs, including bias and fairness, hallucination and factual inaccuracies, computational cost and environmental impact, and interpretability and explainability.

4.4.1 Bias and Fairness in LLMs

One of the primary concerns with LLMs is their potential to perpetuate and amplify biases present in the training data. As LLMs learn from vast amounts of text data gathered from the internet and other sources, they can inadvertently pick up and reproduce societal biases related to gender, race, ethnicity, age, and other protected attributes. This can lead to unfair and discriminatory outcomes in applications such as job candidate screening, content moderation, and personal assistants.

Addressing bias and ensuring fairness in LLMs requires a multi-faceted approach, including:

- Diversifying training data: Ensuring that the training data used for LLMs is diverse, representative, and inclusive of different perspectives and demographics.

- Bias detection and mitigation: Developing techniques to identify and quantify biases in LLMs and implementing strategies to mitigate their impact, such as data preprocessing, model regularization, and post-processing adjustments.

- Fairness evaluation and auditing: Regularly assessing the fairness and non-discrimination properties of LLMs across different subgroups and contexts, and conducting independent audits to identify potential biases and harms.

- Transparency and accountability: Encouraging transparency in the development and deployment of LLMs, including the disclosure of training data sources, model architectures, and performance metrics, and establishing accountability mechanisms to address bias-related harms.

Additional reading:

Additional reading:

- “Towards Debiasing Sentence Representations” by Liang et al. (https://arxiv.org/abs/2007.08100)

4.4.2 Hallucination and Factual Inaccuracies

Another challenge with LLMs is their propensity to generate plausible but factually incorrect or inconsistent text, a phenomenon known as hallucination. While LLMs can produce fluent and coherent language, they may sometimes generate statements that are not supported by the input data or external knowledge, leading to the spread of misinformation and erroneous conclusions.

Addressing hallucination and factual inaccuracies in LLMs requires:

- Improved fact-checking and verification: Developing techniques to cross-reference the generated text against reliable sources of information and flag potential inaccuracies or inconsistencies.

- Knowledge grounding and integration: Incorporating external knowledge bases and structured data into the training and inference process of LLMs to provide additional context and constraints on the generated text.

- Human oversight and intervention: Implementing human-in-the-loop systems that allow for manual review and correction of the generated text, especially in high-stakes applications such as news reporting or medical advice.

- Uncertainty estimation and calibration: Developing methods to estimate the uncertainty and confidence of LLMs in their generated text, and calibrating their outputs to align with the true likelihood of correctness.

Additional reading:

Additional reading:

- “Evaluating Factuality in Generation with Dependency-level Entailment” by Goyal and Durrett (https://arxiv.org/abs/2010.03001)

4.4.3 Computational cost and environmental impact

Training and deploying state-of-the-art LLMs requires significant computational resources, often involving hundreds or thousands of GPUs or TPUs running for several weeks or months. This has raised concerns about the financial cost and environmental impact of developing and using these models, as the energy consumption and carbon footprint associated with large-scale AI training can be substantial.

Addressing the computational cost and environmental impact of LLMs requires:

- Efficient model architectures and training techniques: Developing more parameter-efficient and computationally efficient model architectures and training techniques that can reduce the computational burden of LLMs without sacrificing performance.

- Hardware optimization and co-design: Optimizing hardware and software infrastructure for AI workloads, and co-designing models and hardware to improve efficiency and reduce energy consumption.

- Sustainable AI practices: Encouraging the use of renewable energy sources for AI workloads, and promoting sustainable practices in data center operations and waste management.

- Collaborative and shared resources: Fostering collaboration and resource sharing among AI researchers and practitioners to reduce duplicated efforts and optimize the use of computational resources.

Additional reading:

Additional reading:

- “Energy and Policy Considerations for Deep Learning in NLP” by Strubell et al. (https://arxiv.org/abs/1906.02243)

4.4.4 Interpretability and Explainability of LLMs

The inner workings of LLMs can be difficult to interpret and explain, as they involve complex neural network architectures with millions or billions of parameters. This lack of transparency can hinder the trust and accountability of LLMs, especially in high-stakes applications where the reasoning behind the model’s outputs needs to be clearly understood and validated.

Addressing the interpretability and explainability of LLMs requires:

- Developing interpretable and explainable models: Designing LLMs with built-in interpretability and explainability mechanisms, such as attention visualization, feature attribution, and concept activation vectors, can provide insights into the model’s decision-making process.

- Post-hoc explanation techniques: Developing techniques to generate human-understandable explanations of the model’s outputs, such as natural language explanations, counterfactual examples, and rule extraction.

- Human-centered evaluation and validation: Engaging stakeholders and domain experts in the evaluation and validation of LLMs, and incorporating their feedback and insights into the model development and deployment process.

- Regulatory and ethical frameworks: Establishing regulatory and ethical frameworks that mandate transparency, accountability, and explainability in the development and use of LLMs, and ensuring compliance with these frameworks through regular audits and assessments.

Additional reading:

Additional reading:

- “Towards Explainable NLP: A Generative Explanation Framework for Text Classification” by Liu et al. (https://arxiv.org/abs/1911.03186)

As LLMs continue to advance and become more widely adopted, addressing these limitations and challenges will be crucial to ensure their responsible and beneficial use. This will require ongoing collaboration among researchers, practitioners, policymakers, and society at large to develop technical solutions, establish best practices, and promote a culture of transparency and accountability in the development and deployment of these powerful technologies.

4.5 Notable LLMs and their Impact

The field of Large Language Models (LLMs) has witnessed remarkable progress in recent years, with several notable models pushing the boundaries of language understanding and generation. In this section, we will explore some of the most prominent LLMs and their impact on the field of natural language processing (NLP) and beyond.

4.5.1 GPT series (GPT-2, GPT-3, GPT-4, and GPT-5)

The GPT (Generative Pre-trained Transformer) series, developed by OpenAI, has been at the forefront of LLM research and development. These models have demonstrated impressive language generation capabilities and have set new benchmarks in various NLP tasks.

- GPT-2 (2019): GPT-2 was a significant milestone in LLM development, with 1.5 billion parameters and the ability to generate coherent and contextually relevant text. Its release sparked important discussions about the potential misuse of language models and the need for responsible AI development.

- GPT-3 (2020): GPT-3, with 175 billion parameters, further pushed the boundaries of language modeling. It demonstrated remarkable few-shot and zero-shot learning capabilities, enabling it to perform tasks with minimal or no task-specific fine-tuning. GPT-3 has been applied to a wide range of applications, from creative writing and code generation to question answering and language translation.

- GPT-4 (2023): GPT-4, with 1.7 trillion parameters, is made up of eight models, each with 220 billion parameters. These models are connected by a Mixture of Experts (MoE) is reportedly six times larger than GPT-3. It was built using deep learning techniques and can process up to 25,000 words at once, which is eight times more than GPT-3.

- GPT-5 (future): OpenAI has announced the development of GPT-5, with 2-5 trillion parameters, the model is expected to deliver outputs that are not only more reliable but also significantly more accurate compared to its predecessors. The core focus of these enhancements is to minimize errors, bolster consistency across various tasks, and refine the model’s ability to interact with users in more sophisticated and nuanced ways.

- OpenAI Future: OpenAI has registered trademarks for GPT-6 and GPT-7, suggesting that OpenAI is not only planning but has already laid the groundwork for these next-generation AI models. OpenAI also has an unreleased model named Sora (text to video) which hints at OpenAI’s broader ambitions to enhance AI’s multimodal capabilities.

Additional reading:

Additional reading:

- “Language Models are Few-Shot Learners” by Brown et al. (https://arxiv.org/abs/2005.14165)

4.5.2 BERT and its variants (RoBERTa, ALBERT)

BERT (Bidirectional Encoder Representations from Transformers), developed by Google, has revolutionized the field of NLP by enabling bidirectional language understanding and achieving state-of-the-art performance on a wide range of tasks.

- BERT (2018): BERT introduced the concept of bidirectional pre-training, allowing the model to learn from both left and right contexts simultaneously. This approach has led to significant improvements in tasks such as question answering, natural language inference, and named entity recognition.

- RoBERTa (2019): RoBERTa (Robustly Optimized BERT Pretraining Approach), developed by Facebook, is an optimized version of BERT that achieves even better performance by training on larger datasets, using dynamic masking, and tuning the hyperparameters more carefully.

- ALBERT (2019): ALBERT (A Lite BERT) is a more parameter-efficient version of BERT that achieves similar performance with significantly fewer parameters. This is achieved through cross-layer parameter sharing and factorized embedding parameterization, making ALBERT more computationally efficient and easier to deploy in resource-constrained environments.

Additional reading:

Additional reading:

- “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” by Devlin et al. (https://arxiv.org/abs/1810.04805)

4.5.3 T5 and other multi-task LLMs

T5 (Text-to-Text Transfer Transformer), developed by Google, is a unified framework that treats all NLP tasks as text-to-text problems, enabling the model to be fine-tuned on a wide range of tasks with a single architecture.

- T5 (2020): T5 has demonstrated state-of-the-art performance on a variety of NLP tasks, including question answering, language translation, and summarization. By framing all tasks as text-to-text problems, T5 can be easily adapted to new tasks and domains, making it a versatile and powerful tool for NLP researchers and practitioners.

Other notable multi-task LLMs include:

- XLNet (2019): Developed by Google and Carnegie Mellon University, XLNet combines the strengths of autoregressive and autoencoding models, achieving state-of-the-art performance on various NLP tasks.

- ELECTRA (2020): Developed by Google, ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately) is a more sample-efficient pre-training approach that learns to distinguish real input tokens from generated replacements, achieving competitive performance with less computational cost.

Additional reading:

Additional reading:

“Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer” by Raffel et al. (https://arxiv.org/abs/1910.10683)

4.5.4 Future directions and potential breakthroughs in LLMs

The field of LLMs is rapidly evolving, with new architectures, training techniques, and applications emerging at an unprecedented pace. Some of the future directions and potential breakthroughs in LLMs include:

- Multimodal LLMs: Integrating language models with other modalities, such as vision and speech, to enable more comprehensive and contextual understanding of the world.

- Knowledge-enhanced LLMs: Incorporating structured and unstructured knowledge into LLMs to improve their factual accuracy, consistency, and reasoning capabilities.

- Efficient and sustainable LLMs: Developing more computationally efficient and environmentally sustainable approaches to training and deploying LLMs, such as model compression, quantization, and energy-efficient hardware.

- Explainable and trustworthy LLMs: Advancing techniques for interpreting and explaining the behavior of LLMs, and establishing frameworks for ensuring their trustworthiness, fairness, and alignment with human values.

- Novel applications and use cases: Exploring new applications and use cases for LLMs, such as personalized education, mental health support, and scientific discovery, and developing domain-specific LLMs tailored to the unique needs and challenges of different industries and communities.

As the field of LLMs continues to advance, it is essential for researchers, practitioners, and policymakers to collaborate and engage in multidisciplinary dialogues to ensure the responsible development and deployment of these powerful technologies. By addressing the limitations and challenges of LLMs and leveraging their capabilities for positive impact, we can unlock the full potential of language-based AI and create a future where humans and machines can communicate and collaborate more effectively than ever before.

4.6 Summary

In this module, we explored the capabilities of Large Language Models (LLMs) and their transformative impact on Natural Language Processing (NLP) and beyond. Here are the key takeaways:

- LLM Fundamentals: We learned that LLMs are deep learning models with vast numbers of parameters, trained on massive text data to capture the intricacies of human language. Their key characteristics include size and complexity, unsupervised pre-training, versatility, and contextual understanding.

- Architecture and Training: LLMs leverage transformer architectures with self-attention mechanisms to effectively capture long-range dependencies. Their training involves pre-training on large corpora, followed by fine-tuning on specific tasks, enabling transfer learning and few-shot capabilities.

- Capabilities and Applications: LLMs excel in tasks like text generation, question answering, summarization, dialogue systems, and content creation. Their human-like language understanding and generation open up new possibilities across industries, from customer support and education to creative writing and code generation.

- Limitations and Challenges: While powerful, LLMs face limitations, including bias and fairness concerns, hallucinations and factual inaccuracies, computational costs, and interpretability challenges. Addressing these issues is crucial for responsible development and deployment.

- Notable Models and Impact: We explored groundbreaking LLMs like GPT, BERT, and T5, which have pushed the boundaries of NLP and demonstrated the potential of these models in various applications.

- Future Directions: The field of LLMs is rapidly evolving, with future directions including multimodal integration, knowledge enhancement, and novel applications in areas like personalized education, mental health support, and scientific discovery.

As LLMs continue to advance, it’s essential to collaborate across disciplines, addressing ethical considerations and ensuring responsible development of these powerful language technologies. By understanding LLMs’ capabilities, limitations, and potential, we can harness their power to create intelligent, efficient, and empowering language-based applications that enhance our lives and work.