Module 9: Ethical & Security Considerations

In this module, we’ll explore the crucial role of ethics and security in the development and use of AI technologies in our daily lives. As AI becomes increasingly integrated into various aspects of our lives, it’s essential to understand the potential benefits, challenges, and risks associated with these powerful tools.

- Goal: The goal of this module is to equip learners with a comprehensive understanding of the ethical and security considerations surrounding everyday AI, enabling them to make informed decisions and actively participate in shaping the responsible development and deployment of AI technologies.

- Objective: By the end of this module, learners will understand the seven key requirements for trustworthy AI, identify and mitigate privacy and security risks associated with AI prompting, recognize the potential benefits and challenges of AI for individuals and society, adopt best practices for protecting personal data and maintaining security when interacting with AI systems, and engage in informed discussions about the ethical and secure development and deployment of AI technologies.

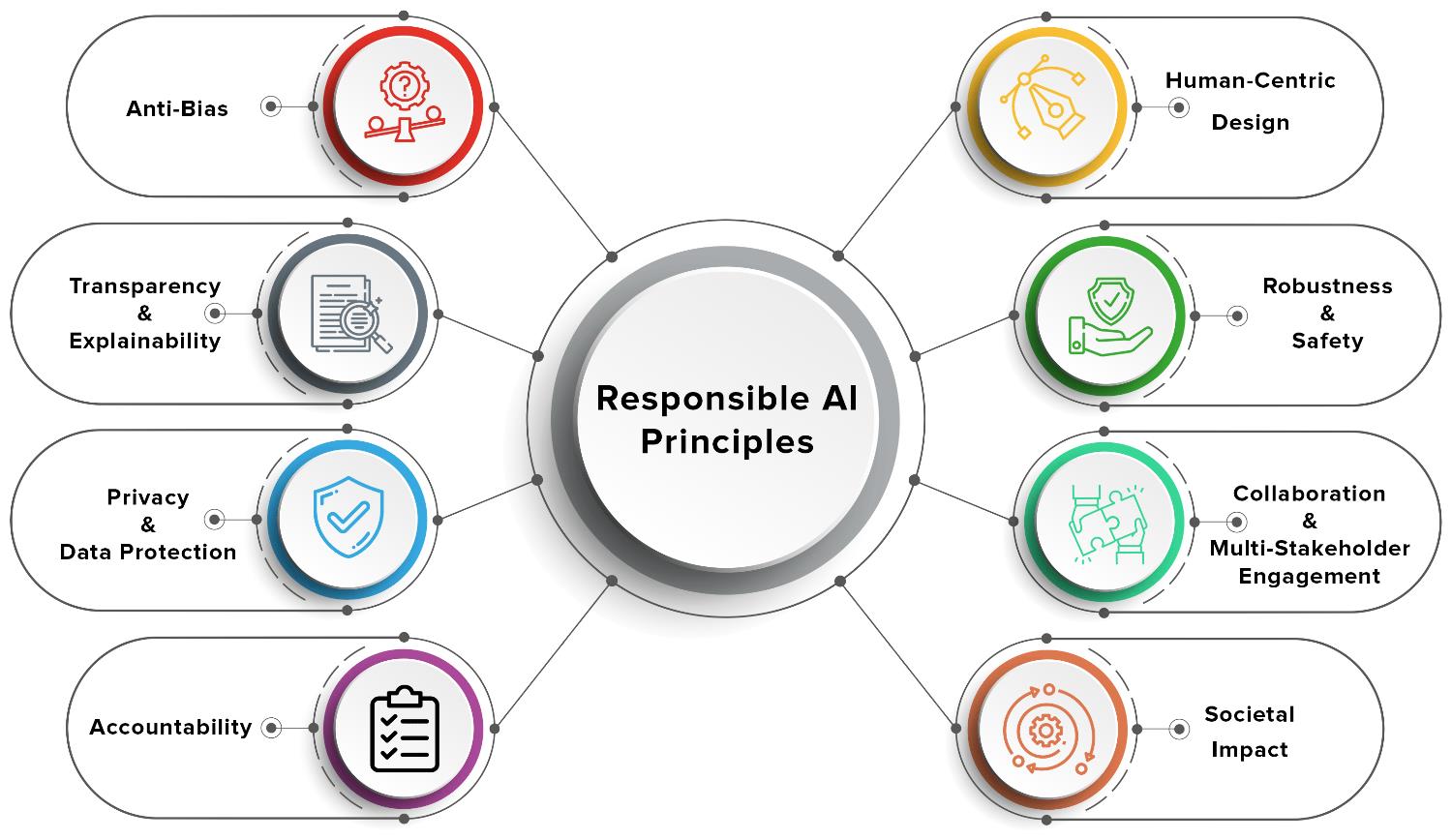

You’ll gain insights into the five pillars of AI ethics, privacy and security risks associated with AI prompting, and the impact of AI on individuals and society. Through real-world examples and practical guidance, learners will develop a deep understanding of the ethical and security considerations surrounding everyday AI and be empowered to actively participate in shaping the responsible development and deployment of these transformative technologies.

- 9.1 The 7 Key Requirements for Trustworthy AI

- 9.2 AI Ethics for Everyday Users

- 9.3 Prompting and Privacy Concerns

- 9.4 Prompting and Security Challenges

- 9.5 AI’s Impact on Individuals and Everyday Life

- 9.6 Summary

9.1 The 7 Key Requirements for Trustworthy AI

The High-Level Expert Group on AI presented Ethics Guidelines for Trustworthy AI, which include seven key requirements to ensure AI systems are trustworthy:

- Human Agency and Oversight: AI should empower human decision-making and support fundamental rights. Mechanisms like human-in-the-loop, human-on-the-loop, and human-in-command ensure proper oversight.

- Technical Robustness and Safety: AI must be secure, reliable, and resilient, with fallback plans to prevent and minimize harm.

- Privacy and Data Governance: AI should respect privacy and data protection, ensuring high-quality data governance and legitimate data access.

- Transparency: AI systems should be transparent, with traceability and explainability for their decisions. Users must be aware they are interacting with AI and informed of its capabilities and limitations.

- Diversity, Non-discrimination, and Fairness: AI should avoid unfair bias, be accessible to all, and involve stakeholders throughout its lifecycle.

- Societal and Environmental Well-being: AI should benefit all people, be sustainable, environmentally friendly, and consider its social impact.

- Accountability: There should be mechanisms for accountability, including auditability and redress options.

Additional reading:

Additional reading:

- European Commission: Ethics Guidelines for Trustworthy AI (https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai)

- DOD Release: DOD Adopts Ethical Principles for Artificial Intelligence (https://www.defense.gov/News/Releases/Release/Article/2091996/dod-adopts-ethical-principles-for-artificial-intelligence/)

9.2 AI Ethics for Everyday Users

As AI becomes more prevalent, understanding its ethical implications is crucial. Everyday users must recognize potential biases, demand transparency, hold developers accountable, protect their privacy, and advocate for beneficial AI.

Key Ethical Principles

Building on the seven key requirements, key ethical principles include:

- Fairness and Bias: Avoid discrimination by using diverse datasets and regular audits.

- Trust and Transparency: Provide clear explanations for AI decisions.

- Accountability: Establish responsibility and mechanisms for redress.

- Privacy and Security: Protect personal data and adhere to privacy regulations.

9.3 Prompting and Privacy Concerns

AI prompting, the process of interacting with AI systems through natural language inputs, has become increasingly popular. However, it raises significant privacy concerns, as users may inadvertently share sensitive personal information.

Potential Risks of Sharing Sensitive Information During Prompting

When engaging in AI prompting, users may unknowingly reveal sensitive personal information, such as their location, financial details, health status, or political views. This information can be exploited by malicious actors or used for purposes beyond the user’s original intent.

- Identity Theft and Fraud: Malicious actors may use personal information obtained through AI prompting to impersonate users or commit fraudulent activities.

- Targeted Advertising and Manipulation: Companies may use personal information gathered through AI prompting to deliver targeted advertisements or manipulate user behavior.

- Reputational Damage: Sensitive information shared during AI prompting may be used to damage a user’s reputation or cause social and professional harm.

- Surveillance and Profiling: Governments or organizations may use AI prompting data to surveil and profile individuals or groups without their consent.

Best Practices for Protecting Personal Data

To mitigate the privacy risks associated with AI prompting, users can adopt several best practices for protecting their personal data.

- Be Cautious About Sharing Sensitive Information: Users should be mindful of the information they share during AI prompting and avoid revealing unnecessary personal details.

- Use Privacy-Focused AI Platforms: When possible, users should opt for AI platforms that prioritize privacy and have strong data protection measures in place.

- Review Privacy Policies and Settings: Users should carefully review the privacy policies of AI platforms and adjust their privacy settings to limit the collection and sharing of personal data.

- Employ Privacy-Enhancing Techniques: Users can utilize techniques such as data minimization, anonymization, and encryption to protect their personal information when interacting with AI systems.

Additional reading:

Additional reading:

- Reuters: The privacy paradox with AI by Gai Sher and Ariela Benchlouch (https://www.reuters.com/legal/legalindustry/privacy-paradox-with-ai-2023-10-31/)

- The National Law Review: Under the GDPR, What Information Should an Organization that Transmits Personal Data in AI Prompts Put in Its Privacy Notice? by David A. Zetoony (https://natlawreview.com/article/under-gdpr-what-information-should-organization-transmits-personal-data-ai-prompts)

- University of Illinois: Privacy Considerations for Generative AI by Dana Mancuso (https://cybersecurity.illinois.edu/privacy-considerations-for-generative-ai/)

- Wired: How to Use Generative AI Tools While Still Protecting Your Privacy by David Nield (https://www.wired.com/story/how-to-use-ai-tools-protect-privacy/)

- Generative AI – Privacy Risks & Challenges by Fieldfisher Data & Privacy Team (https://youtu.be/MWyatCRbet4)

9.4 Prompting and Security Challenges

In addition to privacy concerns, AI prompting also poses significant security challenges that everyday users should be aware of. As AI systems become more sophisticated and widely used, they become increasingly attractive targets for malicious actors seeking to exploit vulnerabilities or manipulate outcomes.

Security Risks using AI

AI platforms, such as OpenAI, Anthropic, and Google, which offer prompting capabilities, may be vulnerable to various security risks.

- Data Breaches: AI platforms may be targeted by hackers seeking to steal sensitive user data or proprietary information.

- Model Poisoning: Malicious actors may attempt to manipulate AI models by injecting false or misleading data during the training process.

- Adversarial Attacks: AI systems may be vulnerable to adversarial examples, which are inputs specifically designed to fool or mislead the model.

- API Exploitation: Attackers may exploit vulnerabilities in AI platform APIs to gain unauthorized access or manipulate system behavior.

How to Minimize Security Risks

To minimize security risks when using AI prompting platforms, everyday users can follow several best practices.

- Use Strong, Unique Passwords and Enable Two-Factor Authentication: This helps protect accounts from unauthorized access.

- Be Cautious When Sharing Sensitive Information: Only share sensitive information on secure, trusted platforms.

- Keep Software and Security Protocols Up to Date: Regular updates help protect against known vulnerabilities.

- Monitor Accounts for Unusual Activity: Report any suspicious behavior to platform providers.

- Educate Themselves About Common Security Threats: Stay informed about security threats and how to recognize and avoid them.

Additional reading:

Additional reading:

- Responsible Prompting for Responsible AI. Mastering The PROMPT Framework for Informed AI Use by Kevin Hanegan (https://www.turningdataintowisdom.com/responsible-ai-prompting/)

- GoDaddy: Risks of AI and practicing responsible use by Amy K (https://www.godaddy.com/resources/skills/risks-of-ai-and-practicing-responsible-use)

9.5 AI’s Impact on Individuals and Everyday Life

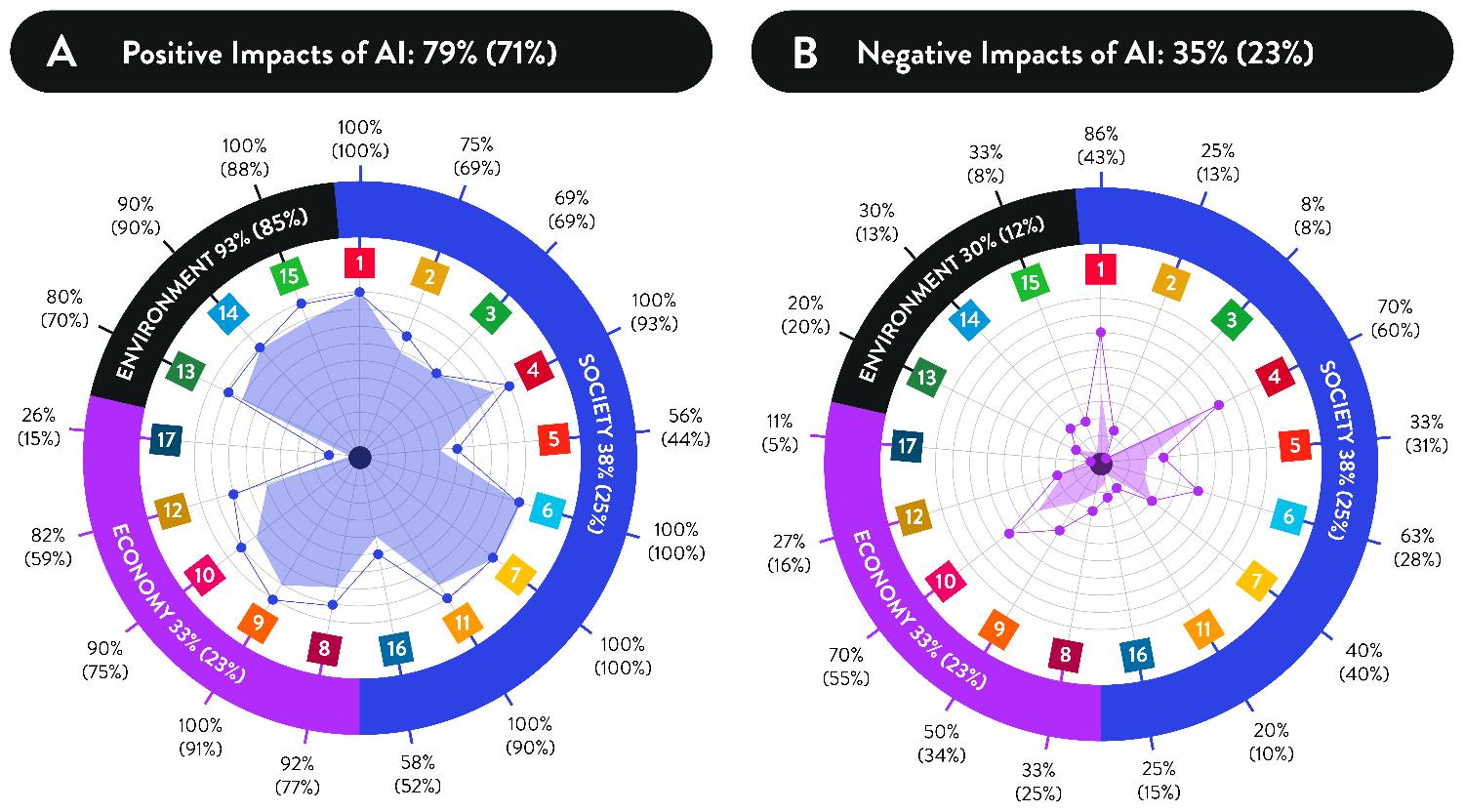

As AI technologies continue to advance and become more integrated into our daily lives, it is essential to consider the profound impact they may have on individuals and society as a whole.

9.5.1 The Positive Potential of AI

Enhanced Productivity, Creativity, and Decision-Making AI has the potential to significantly enhance productivity, creativity, and decision-making in various domains:

- Workplace: AI-powered tools can streamline workflows, automate repetitive tasks, and provide real-time analytics to support data-driven decisions.

- Creative Fields: AI can assist with ideation, content generation, and personalized recommendations, enabling individuals to explore new possibilities and push creative boundaries.

- Personal Life: AI can help individuals make better-informed decisions by providing personalized insights, recommendations, and risk assessments based on their unique circumstances.

- Improved Mental Health and Well-being: AI applications in mental health, such as chatbots providing cognitive behavioral therapy, can offer immediate support and interventions, potentially improving mental health outcomes and increasing access to mental health resources.

- Environmental Monitoring and Conservation: AI is pivotal in tracking and analyzing environmental changes, predicting natural disasters, and aiding conservation efforts. By processing large datasets from various sensors, AI can provide early warnings and insights that help mitigate environmental impacts and protect ecosystems.

- Accessibility Enhancements: AI significantly improves accessibility for people with disabilities. Examples include speech recognition for the deaf, real-time translation services, and AI-driven assistive technologies that enhance mobility and communication for individuals with disabilities.

9.5.2 The Negative Impact of AI

Despite the numerous potential benefits, the widespread adoption of AI also presents several challenges and risks, such as:

- Job Displacement and Skill Obsolescence: As AI automation displaces certain jobs, it can lead to unemployment and the need for extensive upskilling. This can cause economic and social disruption, particularly for vulnerable populations.

- Privacy and Security Risks: The widespread adoption of AI raises concerns about data privacy and security. The collection and processing of personal data by AI systems can lead to potential misuse, data breaches, and erosion of individual privacy.

- Bias and Discrimination: AI models may perpetuate or amplify societal biases, leading to unfair treatment of certain individuals or groups. This can result in discriminatory practices in areas such as hiring, lending, and criminal justice.

- Overreliance and Loss of Autonomy: As individuals become increasingly reliant on AI systems for decision-making and daily tasks, there is a risk of eroding critical thinking skills and personal agency. This can lead to a loss of autonomy and a sense of dependence on AI technologies.

- Ethical Concerns and Lack of Transparency: The development and deployment of AI systems often lack transparency, making it difficult to understand how decisions are made. This can lead to ethical concerns, particularly in sensitive domains such as healthcare and criminal justice.

Additional reading:

Additional reading:

- “A Double-Edged Sword: The Benefits and Risks of AI in Business” by Eckert Seamans (https://www.eckertseamans.com/legal-updates/a-double-edged-sword-the-benefits-and-risks-of-ai-in-business)

- “The Future of Work in the Age of Artificial Intelligence” by Philanthropy Roundtable (https://youtu.be/aG-wUzRxIiI)

- The White House: “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” (https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/)

9.6 Summary

AI technologies offer significant benefits but also pose ethical and security challenges. Understanding the seven key requirements for trustworthy AI—human agency and oversight, technical robustness and safety, privacy and data governance, transparency, diversity and fairness, societal well-being, and accountability—helps mitigate risks and promote responsible AI use. By staying informed and actively participating in ethical discussions, individuals can help shape a beneficial AI future.