Search Engine Myths and Misconceptions

It’s easy to develop misconceptions about how the search engines operate. They don’t provide specific answers to webmaster questions (for fear of disclosing their algorithm) and it seems that every SEO forum has a different opinion of what’s “what.” For the beginner, this lack of information can cause confusion. The truth is – nobody knows for sure what’s “what,” except the search engines themselves.

In this module, I’ll try to clarify the real story behind some of the myths…

Search Engine Submission

In late 1990’s, search engines had “submission” forms that webmasters could use to submit their website to the search engines for inclusion. Webmasters would tag their websites and pages with keyword information and “submit” them to the search engines hoping a search spider would come crawl the webpage and soon be included in the index. This was the normal optimization process – Simple SEO!

This submission process didn’t scale very well, the submissions were often spam, and the practice eventually gave way to purely crawl-based engines. Since 2001, search engines publicly noted that they rarely use “submission” URLs, and that the best practice is to earn links from other sites.

Submission forms are still available online (here’s one for Bing), but they are essentially useless in the modern SEO era. Even if search engines used the submission service to crawl your website, it would be unlikely that your website would be included in the index or earn enough “link juice” for ranking purposes.

Meta Tags

Long ago, meta tags (in particular – the meta keywords tag) were an important element in the SEO process. Webmasters would include the keywords they wanted their website to rank for and, as search query users typed in those terms, their page could come up in the top of the query results. The potential for spamming was wide open and eventually search engines stopped using these indicators as an important ranking signal.

The title tag and meta description tag (often grouped with meta tags) are critically important to SEO best practices. The meta robots tag is also important for controlling search spider access. However, SEO is not “all about meta tags”, at least, not anymore.

Keyword Density

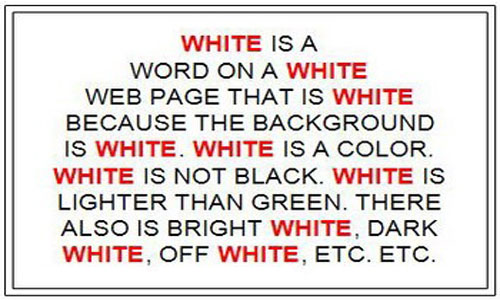

![]() Another persistent myth in the SEO world is keyword density. Have you ever seen a website with keywords mentioned so frequently that the web page just look spammy? Not surprisingly, many SEO still believe that keyword density is an important ranking factor.

Another persistent myth in the SEO world is keyword density. Have you ever seen a website with keywords mentioned so frequently that the web page just look spammy? Not surprisingly, many SEO still believe that keyword density is an important ranking factor.

This myth has been dispelled many times so don’t even consider it. Instead, write content using your keywords naturally and intelligently with your audience in mind. The value from great content that earns editorial links naturally will reward you far greater than using your keyword 20 times on a page.

Keyword density is a mathematical formula that divides the number of words on a page by the number of instances of your keyword.

–>

Do’s and Don’ts

![]() Since the beginning of search, people have abused keywords trying to manipulate the search results. The most common type of abuse involves reusing the same keywords in text, the URL, meta tags and links. This is known as “keyword stuffing” and usually causes more harm to your website.

Since the beginning of search, people have abused keywords trying to manipulate the search results. The most common type of abuse involves reusing the same keywords in text, the URL, meta tags and links. This is known as “keyword stuffing” and usually causes more harm to your website.

The best practice is to use your keywords naturally and strategically. If your page targets the keyword phrase “Las Vegas” then you might naturally include content about Las Vegas including the history of the tower or even recommended hotels. On the other hand, if you simply sprinkle the words “Las Vegas” onto a page with irrelevant content, such as a page about balloon animals, then your efforts to rank for “Las Vegas” will be a long battle.

<–

Paid Search Myths

Of all the SEO myths, this one is the most common, yet, it’s never been proven nor been a probable explanation for the organic results. Imagine the backlash if this were possible… As a result, Google, Yahoo! & Bing have all blocked their advertising programs to prevent any fluctuation in the organic results.

Search Engine Spam

With payouts for top positions in some of the search queries running 10’s of thousands per day, it’s no wonder that manipulating the engines is such a popular activity. Whether it’s creating pages designed to artificially inflate rankings or the pursuit of schemes designed to manipulate the ranking algorithms, search engine spam is here to stay.

For most SEO’s, this path is not the recommended course of action as search engines have made it increasingly difficult for these techniques to work. Your best choice for long term success would be the pursuit of webmaster best practices for two reasons.

1. Not Worth Your Time

Search query users hate spam and search engines make 100’s of millions each year with their search products. Undoubtedly, they want to protect their investment and will spend a great deal of time, effort and resources to block spam and produce great query results.

Occasionally, a spam method works, but they generally take more effort to succeed than producing “good” content and the long term payoff is non-existent. Instead of putting your time and effort into something that search engines will block, why not invest in a long term strategy instead?

2. Search Engines are Evolving

Search engines have come a long way in their fight against spam. Their large scale abilities to identify spam using complex algorithms incorporating concepts like trust, authority, history, and more have made it increasingly difficult for webmasters to spam spam.

Recently, Google introduced sophisticated machine learning algorithms to combat spam and low value web pages at a scale never before witnessed online (Panda update). The result was a 20% shift in their search index as millions of spam web pages dropped in the rankings.

All of these efforts by search engines have made webmaster best practices far more attractive. Manipulative techniques typically do not help webmasters in ranking their pages and more often, they cause search engines to impose penalties against them. For additional details about spam, visit Google’s Webmaster Guidelines and read Bing’s Webmaster FAQs (PDF download).

Page Level Spam

Search engines are able to perform spam analysis across individual pages and entire websites (domains) very efficiently. First, let’s look at how they evaluate manipulative practices on the URL level.

Keyword Stuffing

![]() The thought that increasing the number of times a term is mentioned on a web page can boost a page’s ranking – is generally false. Studies looking at thousands of the top search results across different queries have found that keyword repetitions play an extremely limited role in boosting rankings and have a low overall correlation with top rankings.

The thought that increasing the number of times a term is mentioned on a web page can boost a page’s ranking – is generally false. Studies looking at thousands of the top search results across different queries have found that keyword repetitions play an extremely limited role in boosting rankings and have a low overall correlation with top rankings.

Search engines have very effective ways of fighting this type of spam. Scanning and analyzing pages for stuffed keywords is not challenging and their algorithms are up to the task. You can read more about keyword stuffing from Matt Cutts, head of Google’s spam team.

You can read more about this practice, and Google’s views on the subject, in a blog post from the head of their web spam team – SEO Tip: Avoid Keyword Stuffing.

Back Linking Schemes

Link acquisition is one of the most common forms of web spam since link popularity is a key metric in search engine algorithms. By manipulating link acquisitions, webmasters are attempting to artificially inflate their metrics and receive high rankings and website visibility.

Because there are so many ways to manipulate links, search engines have difficulty in processing link spam. A few of the ways to manipulate links include:

- Reciprocal link exchanges involve the creation of reciprocal links between two (or more) websites. Sites create link pages that point back and forth to one another in an attempt to inflate link popularity. Search engines are very good at spotting and devaluing these links as they fit a very particular pattern.

- Link schemes, including “link farms” and “link networks” involve the creation of fake or low value websites built or maintained purely as link sources to artificially inflate website popularity. Search engines combat these links through analysis of the different connections between site registrations, link overlap and other common factors.

- Paid links involve the practice of purchasing links from websites willing to place a link in exchange for funds in an attempt to earn higher rankings. Even though search engines work hard to stop this practice (Google in particular), paid link schemes continue providing value. Rank Fishkin wrote a relevant post: Paid Links – Can’t Be a White Hat With ’em, Can’t Rank Without ’em.

- Low quality directory links are a frequent source of manipulation. Large numbers of pay-for-placement web directories exist to serve this market and Google takes action against them by reducing their PageRank score.

As search engines become more knowledgeable about linking schemes, they take appropriate actions to reduce the effect. Algorithm adjustments, human reviews, and the collection of spam reports supplied by webmasters & SEOs all contribute to the fight against spam.

Cloaking

Cloaking is the process of showing human visitors one version of a web page and search engine spiders a different version. Cloaking can be accomplished in a variety of ways and for a variety of reasons (positive and negative). In some cases, search engines may allow web pages that are technically “cloaking” for positive user experience reasons. Rand Fishkin wrote this article: White Hat Cloaking, which explains the topic, as well as the levels of risk associated with this tactic.

Low Quality Page Content

![]() Technically not “web spam,” “thin” affiliate content, duplicate content, and dynamically generated content pages can be penalized. Through the use of content and link analysis algorithms to filter out “low value” pages, search engines are able to filter out poor quality pages from appearing in their results.

Technically not “web spam,” “thin” affiliate content, duplicate content, and dynamically generated content pages can be penalized. Through the use of content and link analysis algorithms to filter out “low value” pages, search engines are able to filter out poor quality pages from appearing in their results.

Google has been especially combative against low quality pages. Its 2011 Panda update was one of the most aggressive attempts ever seen in reducing low quality content and Google continues to update this process.

Domain Spam

In addition to watching individual web pages for spam, search engines can also identify traits and properties across entire root domains (or subdomains) that could flag them as potential spam websites. Excluding entire domains is more practical in cases where greater scalability is required.

Shady Practices that can Hurt your Website

Search engines monitor the quality and types of links a website receives. Webmasters engaging in manipulative activities on a consistent or seriously impacting manner may see their search traffic suffer or have their sites removed from the from the search index. You can read about websites that were penalized for manipulative practices in these high profile examples: Widgetbait Gone Wild, the more recent J.C. Penney penalty, or this collect of top brands that received Google penalties.

Trustworthiness

There is a “double standard” that exists for judging “big brand” and high importance sites versus newer independent sites and the websites that have earned trusted status are often treated differently from those who have not.

If someone were to create a website that only published low quality, duplicate content and then bought links from spammy directories – this website would probably have a difficult time ranking. The reason, this site has yet to earn any high quality links and search engines may judge this site stricter from an algorithmic view.

If an important website (like Wikipedia, CNN, WebMd) were to publish the exact same content and buy the exact same links – the site would likely continue ranking very well. A little duplicate content and a few suspicious links are likely to be overlooked as these websites have earned hundreds of links from high quality editorial sources.

This is the power of domain trust and authority.

Content Value

Webmasters need to do more than just rank a website for a search query. They need to prove themselves over and over again as search engines continually try to improve their user experience by modifying the search query results. Today, webmasters must provide unique, relevant, quality content that their audience wants.

Websites that primarily serve non-unique, non-valuable content will find it difficult to rank even if on-page and off-page SEO factors are performed acceptably. Search engines are using algorithmic and manual review methods to prevent thousands of low quality sites from filling up their indexes.

Site Errors, Content Changes, and Duplicate Content

It can be tough to know if your website or page has a penalty or if something has changed, either the search engines’ algorithms or on your site, that has negatively impacted the rankings or inclusion. Before you assume it’s a penalty – rule out other problems:

Rule Out Other Problems

Errors – Errors on the website may be inhibiting search engines from crawling the website. Google’s Search Console is a good place to start.

Changes – Changes to the website or pages may have changed the way search engines view the content (on-page changes, internal link structure changes, content moves, etc.).

Similarity – When search engines update their ranking algorithms, websites that share similar backlink profiles can lose rankings as the websites’ link valuation and importance shift.

Duplicate Content – Modern websites are rife with duplicate content problems especially if the website has grown to a large size. Check out this post on duplicate content to identify common problems.

This chart will not work for every situation, but the logic has been useful in identifying spam penalties or mistaken flagging for spam by search engines versus basic ranking drops.

Getting Penalties Lifted

Requesting re-consideration or re-inclusion in a search engines is often unsuccessful because the denial is rarely accompanied by any feedback to let you know what happened or why.

Here are some recommendations to follow should you find yourself dealing with a penalty or banning:

- If you haven’t already, register your site with the search engine’s Webmaster Tools service (Google’s and Bing’s). By registering the website, you’ve created an additional layer of trust and connection between your site and the webmaster teams.

- Make sure to thoroughly review the data available in the Webmaster Tools account. Often, what’s perceived as a mistaken spam penalty is, in fact, related to accessibility issues like broken pages, server or crawl errors, and/or warnings or spam alert messages.

- Send your request through the search engine’s Webmaster Tools service rather than the public form. Again, creating a greater trust layer and a better chance of hearing back.

- Full disclosure is critical to getting consideration. If you’ve been caught spamming, disclose everything you’ve done – links you’ve acquired, how you got them, who sold them to you, etc. Search engines (especially Google) want the details so they can apply the information to their algorithms.

- Prior to requesting consideration, remove and/or fix everything you can. If you’ve acquired bad links, get them taken down – Google offers a “Disavow Links” tool especially for this purpose. If you’ve done any manipulation on your own site (over-optimized internal linking, keyword stuffing, etc.), fix it before you submit your request.

- Be patient – re-inclusion is a lengthy process. Search engines will take weeks, even months to respond as hundreds (maybe thousands) of websites are penalized every week.

- Long shot – If you run a large powerful brand on the web, you might try approaching an individual source at an industry conference or event. Engineers from all of the search engines regularly participate in search industry events and re-inclusion might go more quickly by speaking with one of them versus the standard request.

Web Page Inclusion is a Privilege – Not a Right

Be aware that search engines have no obligation or responsibility to lift a penalty. Legally, they have the right to include or reject any website or page for any reason. As you apply optimization techniques, be cautious, don’t apply techniques you’re unsure of, otherwise you could find yourself in trouble.

READ: The SEO environment is always in a state of flux – from Google updating their algorithm to the mass confusion spread about the 100’s of SEO blogs, forums and user groups. To help you prepare for life as a digital marketer, here is a list of 116 SEO Myths to Leave Behind in 2017: The Complete List, by Vope.net. You already know several of these and this isn’t really all of them, but it is a good list to read through and ramp up your SEO game.

READ: The SEO environment is always in a state of flux – from Google updating their algorithm to the mass confusion spread about the 100’s of SEO blogs, forums and user groups. To help you prepare for life as a digital marketer, here is a list of 116 SEO Myths to Leave Behind in 2017: The Complete List, by Vope.net. You already know several of these and this isn’t really all of them, but it is a good list to read through and ramp up your SEO game.

READ: As you’re learning about the positive and negative effects of search engine optimization, faster and more efficient ways to manage SEO campaigns, and (as you get busier) the demanding pressures from clients for instant success – sometimes it’s what you don’t do that’s important. Check out this article by Matt McGee: SEO “Don’ts”: 20 Fatal Mistakes You Must Avoid To Succeed. He offers you a great checklist of don’ts: things to avoid whether you’re doing SEO yourself or having an outside firm do it for you.

READ: As you’re learning about the positive and negative effects of search engine optimization, faster and more efficient ways to manage SEO campaigns, and (as you get busier) the demanding pressures from clients for instant success – sometimes it’s what you don’t do that’s important. Check out this article by Matt McGee: SEO “Don’ts”: 20 Fatal Mistakes You Must Avoid To Succeed. He offers you a great checklist of don’ts: things to avoid whether you’re doing SEO yourself or having an outside firm do it for you.