Search Engine Abilities and Limitations

An important aspect of search engine optimization is making your website easy for both users and search engine spiders to understand. Although search engines have become increasingly sophisticated, in many ways they still can’t see or understand a web page the same way a human does. SEO helps the search engines figure out what each page is about and how it’s useful for users.

Let’s say you posted a picture of your family fish on your website. A human might describe it as “a small fish, with white and orange strips with black lines, looks like the cartoon Nemo, and he’s swimming in a tank with white rocks on the bottom.” The best search engine in the world would struggle to understand a photo anywhere near that level of sophistication. How do you make a search engine understand a photograph?

Fortunately, webmasters are able to provide “clues” that search engines can understand what the content is. In fact, adding the proper structure to your content is essential.

All of the major search engines operate basically the same… Automated search bots crawl the web, follow the links, and index content into massive databases. Despite this amazing feat, modern search technology is not invincible. There are technical limitations that cause problems for websites for both inclusion and rankings.

By understanding the abilities and limitations of search engines, you’ll be able to properly build, format and notate your web content in a way that the search spiders can understand. Without proper SEO, many websites would remain invisible to search engines.

Spidering and Indexing Problems

Google leads the pack and can index almost any type of page or file – providing you use the correct filetype: operator, however most search engines are still text based and will have difficulty reading non-HTML text (including text in Flash files, images, photos, video, audio & plug-in content). This doesn’t mean that you can’t include rich media content such as Flash, Silverlight, or videos on your site; it just means that any content you embed should contain proper markup to explain to search engines “what” this content is.

Google Webmaster Tools has provided an excellent resource regarding the limitations of Flash and other rich media formats.

Other indexing problems include:

- Search engines aren’t good at completing online forms (such as a login), and thus any content contained behind them may remain hidden from spiders trying to index content.

- Websites using a CMS (Content Management System) often create duplicate versions of the same page – a major problem for search engines looking for completely original content.

- Errors in a website’s crawling directives (robots.txt) may lead to blocking search engines from your site entirely.

- Poor website linking structures can lead to many problems: preventing search spiders from crawling all of the website’s content or encouraging search spiders to leave the website so content has minimal exposure. These types of of errors could result in your content being deemed “unimportant” by the engine’s index.

Content to Query Matching

Mixed messages send confusing signals to search engines and the results can be penalties for your website.

- Text content that is poorly written, text that vaguely describes complex subjects or text that is not written in common terms that people use to search with can cause the search engines to become confused. For example, writing about “food cooling units” when people actually search for “refrigerators”.

- Language and internationalization subtleties. For example, color vs colour. When in doubt, check what your audience is searching for and use exact matches in your content.

- Location targeting, such as targeting content in English when the majority of the people who would visit your website are located in China.

- Mixed contextual signals. For example, the title of your blog post is “Mexico’s Best Coffee” but the post itself is about a vacation resort in Canada which happens to serve great coffee.

Marketing: Relevance and Importance

There is more to SEO than getting the technical details of “SEO friendly” web design correct – It’s also about marketing.

There is more to SEO than getting the technical details of “SEO friendly” web design correct – It’s also about marketing.

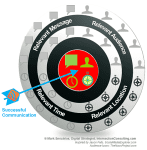

This is the most important concept to grasp about the functionality of search engines. Search engines rely on metrics of relevance and importance in order to display results, but no matter how perfect your website is – it will remain invisible unless you promote it.

Unfortunately, search engines by themselves are unable to gauge the quality of your websites’ content – only humans have the capacity to do this.

They do it by:

- linking to your web pages

- commenting on your website and others sites

- reacting to the calls to action you’ve placed within your content

- and they do it by visiting the wonderful content on your website

Ultimately – It’s About Marketing

Content must be shared and talked about! Search engines are able to do a fantastic job of promoting content on websites that have become popular, BUT search engines are not responsible for generating that popularity – and that’s the part where ‘marketing’ comes in!

The Future of Search – Change is the Only Constant

A lot has changed since search marketing began…

- In the mid-1990’s – manual submission, meta keywords tag and keyword stuffing were all regular tactics used to rank in the search.

- In the early 2000’s – link bombing with anchor text, buying hordes of links from automated blog comment spam and the construction of link farms were the popular methods for leveraging traffic.

- In 2011 – social media marketing, inbound marketing and vertical search inclusion became the mainstream methods for sharing content and building traffic.

- In 2012, blackhat techniques began to emerge in the mainstream in order to rank websites quickly – link spam (automated backlink building), webpage cloaking and paid link building became the fight search engine had to battle.

- 2013 continued with spammy link building tactics but with a twist – paid guest blogging became the newest arsenal in the fight for ranking dominance.

- 2014, as SEO’s watched Google respond to the new wave of blackhat techniques with new penalties and algorithm changes, a new industry was born – Negative SEO.

- 2015, SEO’s, tired of combating Google updates, switched tactics and begin focusing on fundamentals like quality posts, engaging in social media, and PPC advertising.

- 2016 see a resurgence in automation – but this time on social media (and outside of Google reach). Chatbots, auto-publishing, and other blackhat tactics.

- 2017 begins the dawn of AI and digital marketing…

- 2018 returned to the user experience with mobile-first, voice search, and content leading the pack. Another area that increased in popularity is local search with schema, featured snippets, website speed and more technical SEO techniques.

![]() No one knows what the future of search will be – change is the only constant. Until machine learning and AI take over internet marketing, search engine optimization will always remain important to those websites that want to gain the attention of visitors (and ultimately customers). The experts with the best knowledge and experience will always have the advantage. To stay on top of your game and gain a better understanding what the future may include, read this article by Danny Goodwin published on Search Engine Journal: 47 Experts on the Top SEO Trends That Will Matter in 2018.

No one knows what the future of search will be – change is the only constant. Until machine learning and AI take over internet marketing, search engine optimization will always remain important to those websites that want to gain the attention of visitors (and ultimately customers). The experts with the best knowledge and experience will always have the advantage. To stay on top of your game and gain a better understanding what the future may include, read this article by Danny Goodwin published on Search Engine Journal: 47 Experts on the Top SEO Trends That Will Matter in 2018.