Module 8: Hands-on Exercises

This module focuses on applying your knowledge through hands-on exercises and demonstrations. You will work with practical examples and real-world scenarios to strengthen your AI prompting skills.

- Goal: Provide practical experience in using AI prompting techniques for real-world problems. Learners will create, refine, and evaluate prompts for various AI tasks.

- Objective: By the end of this module, you will be familiar with crafting and optimizing prompts for tasks like sentiment analysis, text summarization, question answering, image captioning, and bias reduction. You will also learn about the latest AI applications and strategies to stay current in the field.

This module offers an interactive, hands-on learning experience, building on previous knowledge. You will practice zero-shot, few-shot, chain-of-thought, and multimodal prompting techniques on real-world problems and explore methods to reduce bias in AI through prompt engineering. The module features an AI application showcase, demonstrating cutting-edge technologies across various fields. You will gain practical skills in evaluating and improving prompts for optimal results.

- 8.1 Introduction

- 8.2 Zero-Shot Prompting Exercise

- 8.3 Few-Shot Prompting Exercise

- 8.4 Chain-of-Thought Prompting Exercise

- 8.5 Multimodal Prompting Exercise

- 8.6 Prompt Engineering for Bias Reduction Exercise

- 8.7 Prompt Evaluation

- 8.9 Summary

8.1 Introduction

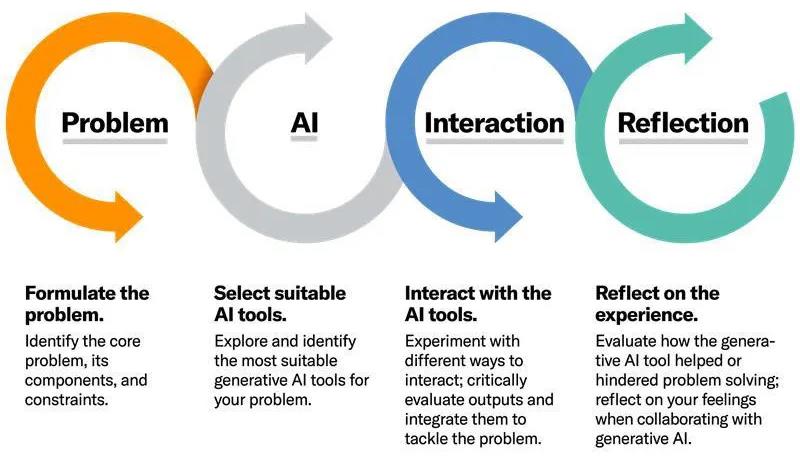

In the previous module, we explored the fundamentals of prompting and learned about various techniques such as zero-shot, few-shot, and chain-of-thought prompting. Now, it’s time to put that knowledge into practice through hands-on exercises and demonstrations.

Hands-on experience is crucial for mastering AI prompting techniques. By engaging in practical exercises, you’ll develop a deeper understanding of how to craft effective prompts and utilize AI platforms to solve real-world problems. Throughout this module, we’ll use relatable examples and analogies to illustrate the concepts behind each prompting technique, making it easier for you to grasp and apply them in your own projects.

8.2 Zero-Shot Prompting Exercise

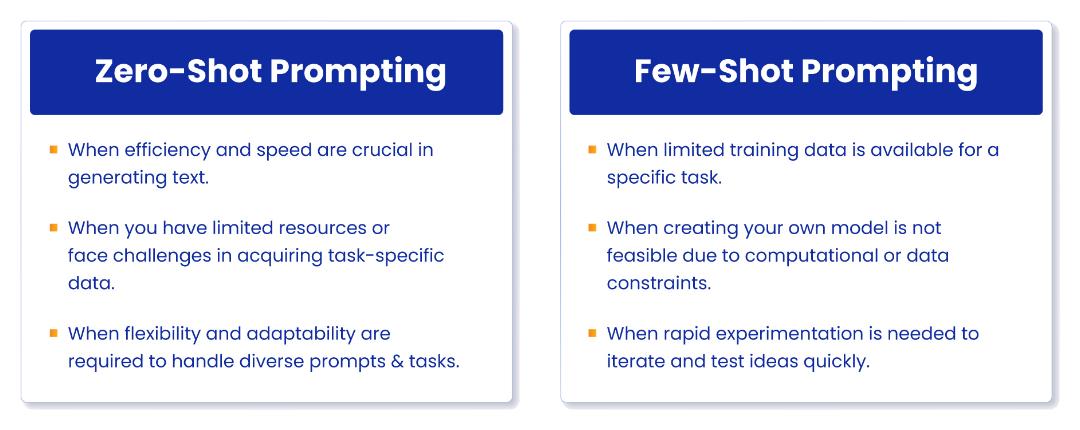

Zero-shot prompting is a technique where you provide an AI model with a task and input data without any examples or training. The model relies on its pre-existing knowledge to generate a response. Let’s explore zero-shot prompting through three practical tasks:

1. Sentiment Analysis

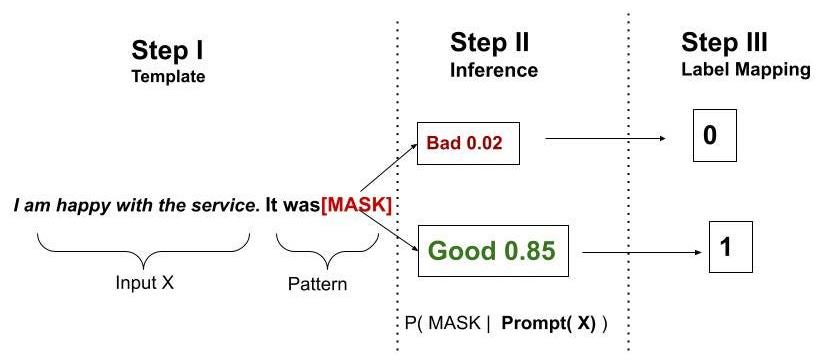

Sentiment analysis involves determining the emotional tone or opinion expressed in a piece of text, such as positive, negative, or neutral. Examples of sentiment analysis tasks include analyzing customer reviews, social media posts, or news articles to gauge public opinion or track brand perception.

Example prompt for sentiment analysis:

“Analyze the sentiment of the following text and classify it as positive, negative, or neutral:

[Insert text snippet to be analyzed]

Sentiment:”

Exercise: Try creating zero-shot prompts for the following text snippets:

- “The movie was fantastic! The acting was brilliant, and the plot kept me engaged from start to finish.”

- “I was disappointed with the service at the restaurant. The food was cold, and the staff was rude.”

Explanation: This prompt explicitly instructs the AI model to analyze the sentiment of the provided text and classify it into one of three categories: positive, negative, or neutral. By specifying the desired output format (“Sentiment:”), the prompt ensures that the model provides a clear and concise response.

Desired outcome: The AI model should accurately identify the sentiment expressed in the given text snippet and provide a classification label (positive, negative, or neutral) based on its analysis.

2. Text Summarization

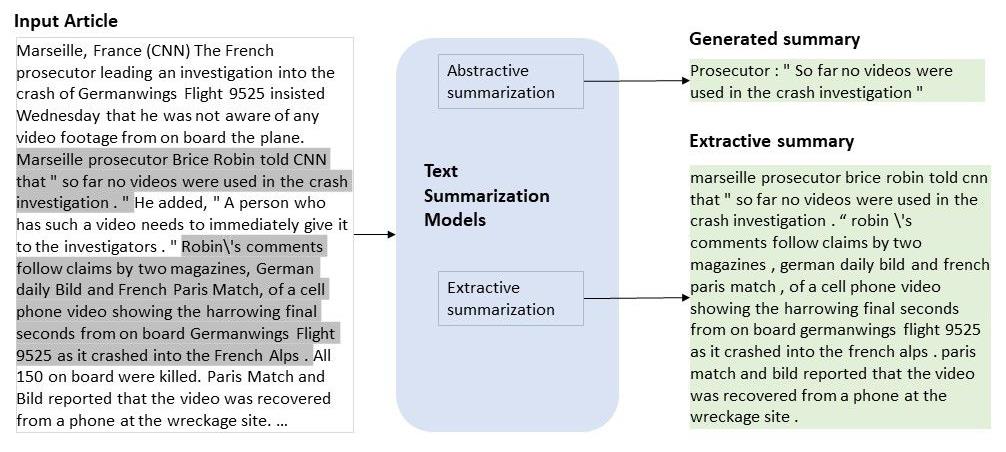

Text summarization is the process of generating a concise version of a longer text while preserving the main ideas and key information. Applications of text summarization include creating article abstracts, generating meeting minutes, or summarizing lengthy reports to quickly understand the essence without reading through the entire content.

Example prompt for text summarization:

“Generate a summary of the following text in 3-5 sentences:

[Insert text passage to summarize]

Summary:”

Exercise: Create zero-shot prompts to summarize the following passages:

- “In recent years, the rapid advancement of artificial intelligence has transformed various industries, from healthcare and finance to transportation and entertainment. AI technologies, such as machine learning and natural language processing, have enabled machines to perform tasks that previously required human intelligence, leading to increased efficiency, accuracy, and innovation. However, the rise of AI has also raised concerns about job displacement, privacy, and the ethical implications of relying on algorithms for decision-making.”

- “Climate change is one of the most pressing issues facing our planet today. The increasing levels of greenhouse gases in the atmosphere, primarily due to human activities such as burning fossil fuels and deforestation, have led to rising global temperatures, melting polar ice caps, and more frequent and intense natural disasters. To mitigate the impacts of climate change, it is crucial for individuals, businesses, and governments to take action by reducing carbon emissions, investing in renewable energy, and promoting sustainable practices.”

Explanation: This prompt asks the AI model to generate a summary of the provided text passage in a specific length range (3-5 sentences). By setting this constraint, the prompt encourages the model to focus on the most important information and convey it concisely. The “Summary:” output indicator ensures that the model provides a clear and structured response.

Desired outcome: The AI model should generate a brief and informative summary that captures the main points and key ideas of the original text passage, allowing readers to quickly grasp the essential information without reading the entire content.

3. Question-Answering

Question-answering involves providing accurate and relevant answers to user queries based on the available information. Examples of question-answering tasks include answering factual questions, retrieving specific information from a document, or providing definitions of terms.

Example prompt for question-answering:

“Answer the following question based on the provided context:

[Insert context]

Question: [Insert question]

Answer:”

Exercise: Create zero-shot prompts for the following questions:

- Context: “France is a country located in Western Europe. It is known for its rich history, art, and cuisine. The capital of France is a city that is famous for its iconic landmarks, such as the Eiffel Tower and the Louvre Museum.”

Question: “What is the capital of France?” - Context: “From the conversion of sunlight into chemical energy to the synthesis of glucose and the release of oxygen, a detailed exploration of photosynthesis provides insights into the intricate workings of plant biology and its significance in sustaining life on Earth.”

Question: “How does photosynthesis work in plants?”

Explanation: This prompt provides the AI model with a specific question and the necessary context to answer it. By including the context, the prompt ensures that the model has the relevant information to generate an accurate and informative response. The “Answer:” output indicator prompts the model to provide a direct and concise answer to the given question.

Desired outcome: The AI model should generate a clear, accurate, and relevant answer to the specified question, taking into account the provided context. The answer should address the question directly and provide the requested information in a concise manner.

Prompt Creation Guidelines:

- Be clear and specific in your instructions to the AI model. Clearly state the task you want it to perform and provide any necessary context.

- Use simple and concise language to ensure the model understands your intent.

- If applicable, provide examples of the desired output format to guide the model’s response.

- Avoid ambiguity and vague terms that may confuse the model or lead to irrelevant responses.

AI Platform Exploration:

- OpenAI ChatGPT: ChatGPT is a powerful language model developed by OpenAI. It can engage in conversational interactions and perform various natural language tasks, including zero-shot prompting. To use ChatGPT for zero-shot prompting, simply provide your prompt in the chat interface and await its response.

- Anthropic: Anthropic offers a suite of AI models, including Claude, which can be used for zero-shot prompting. To access Anthropic’s models, you’ll need to sign up for an API key and integrate it into your application or use their web interface.

- Google Gemini: Google Gemini is a smart composing platform that assists with writing tasks. While it doesn’t offer direct zero-shot prompting capabilities, you can use its features to generate text based on your input and refine it further.

Results Comparison and Discussion: After creating zero-shot prompts for the given tasks and testing them on different AI platforms, compare the results. Consider the following:

- How accurate and relevant were the generated responses?

- Did the models capture the intended sentiment, summarize the text effectively, or provide correct answers to the questions?

- Were there any notable differences in performance between the AI platforms?

- Discuss your observations and insights with your peers to gain a deeper understanding of zero-shot prompting.

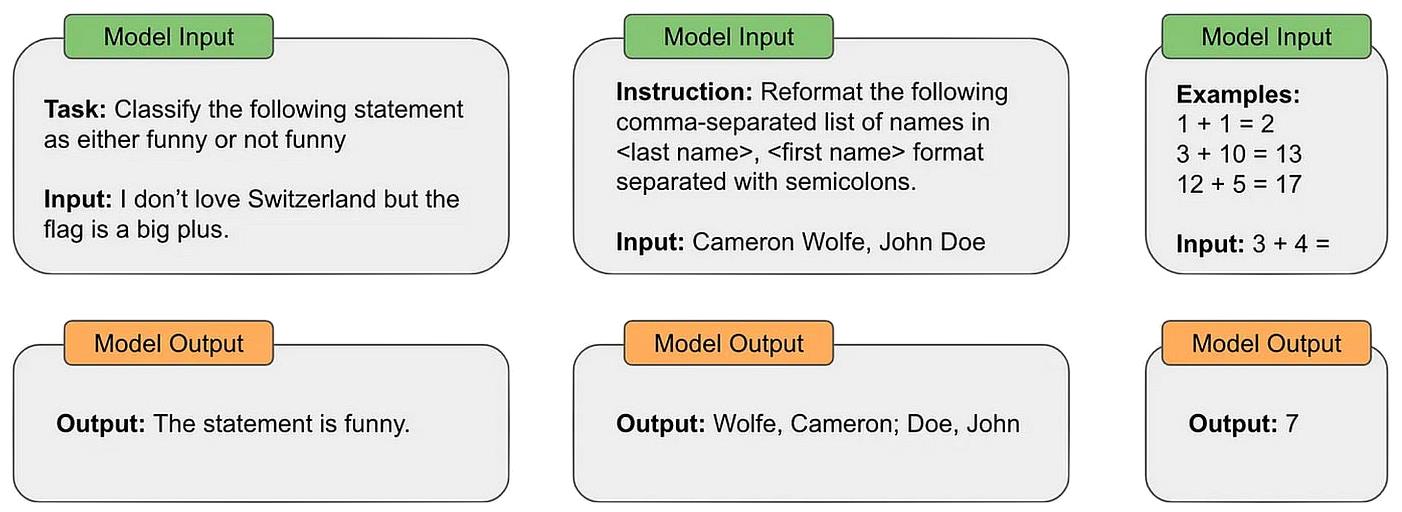

8.3 Few-Shot Prompting Exercise

Few-shot prompting involves providing an AI model with a small number of examples to guide its understanding of a task. By learning from these examples, the model can generate more accurate and relevant responses. In this exercise, we’ll explore few-shot prompting through two tasks:

1. Text Classification

Text classification involves assigning predefined categories or labels to a given piece of text based on its content. Examples of text classification tasks include classifying emails as spam or not spam, categorizing news articles by topic, or determining the sentiment of customer reviews.

Example prompt for text classification:

“Classify the following email as either ‘Spam’ or ‘Not Spam’:

[Insert email content]

Classification:”

Exercise: Create few-shot prompts for the following text classification task:

- Dataset1: A collection of movie reviews labeled as “positive” or “negative.”

- Task: Classify the sentiment of the following review: “The film had its moments, but overall, it fell short of expectations. The pacing was slow, and the characters lacked depth.”

1: In this prompt exercise, “[the movie review]” should be associated with a specific movie title you want the model to classify. The model will then analyze the input review and provide a classification of either “positive” or “negative” based on the patterns it has learned from the labeled dataset.

The dataset itself typically comes from a pre-existing source or is created specifically for the purpose of training and evaluating the text classification model. The dataset itself is not directly shared in the prompt but rather serves as the foundation for the model’s training and evaluation process.

The model learns from the patterns and features present in the labeled reviews and develops the ability to classify new, unseen reviews based on the learned patterns.

In the prompt practice exercise, the focus is on using the prompt to input a specific movie review and get the model’s classification prediction.

Explanation: This prompt instructs the AI model to classify the provided email content into one of two categories: “Spam” or “Not Spam.” By specifying the classification labels, the prompt sets clear expectations for the model’s output. The “Classification:” output indicator ensures that the model provides a direct and unambiguous response.

Desired outcome: The AI model should accurately classify the given email content as either “Spam” or “Not Spam” based on its analysis of the text. The classification should be based on relevant features and patterns typically associated with spam emails, such as suspicious subject lines, promotional language, or misleading content.

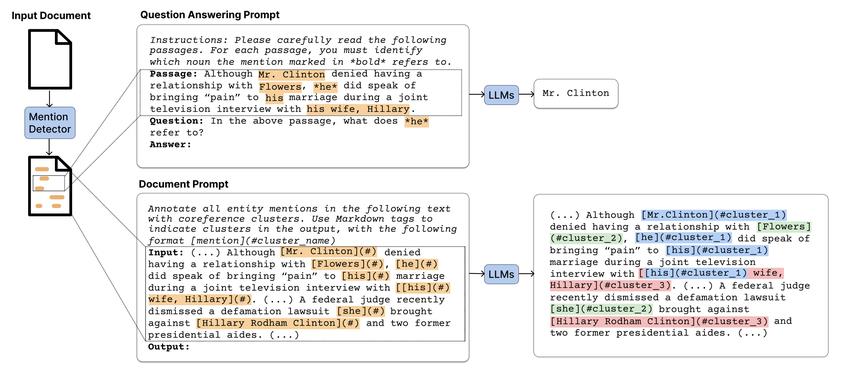

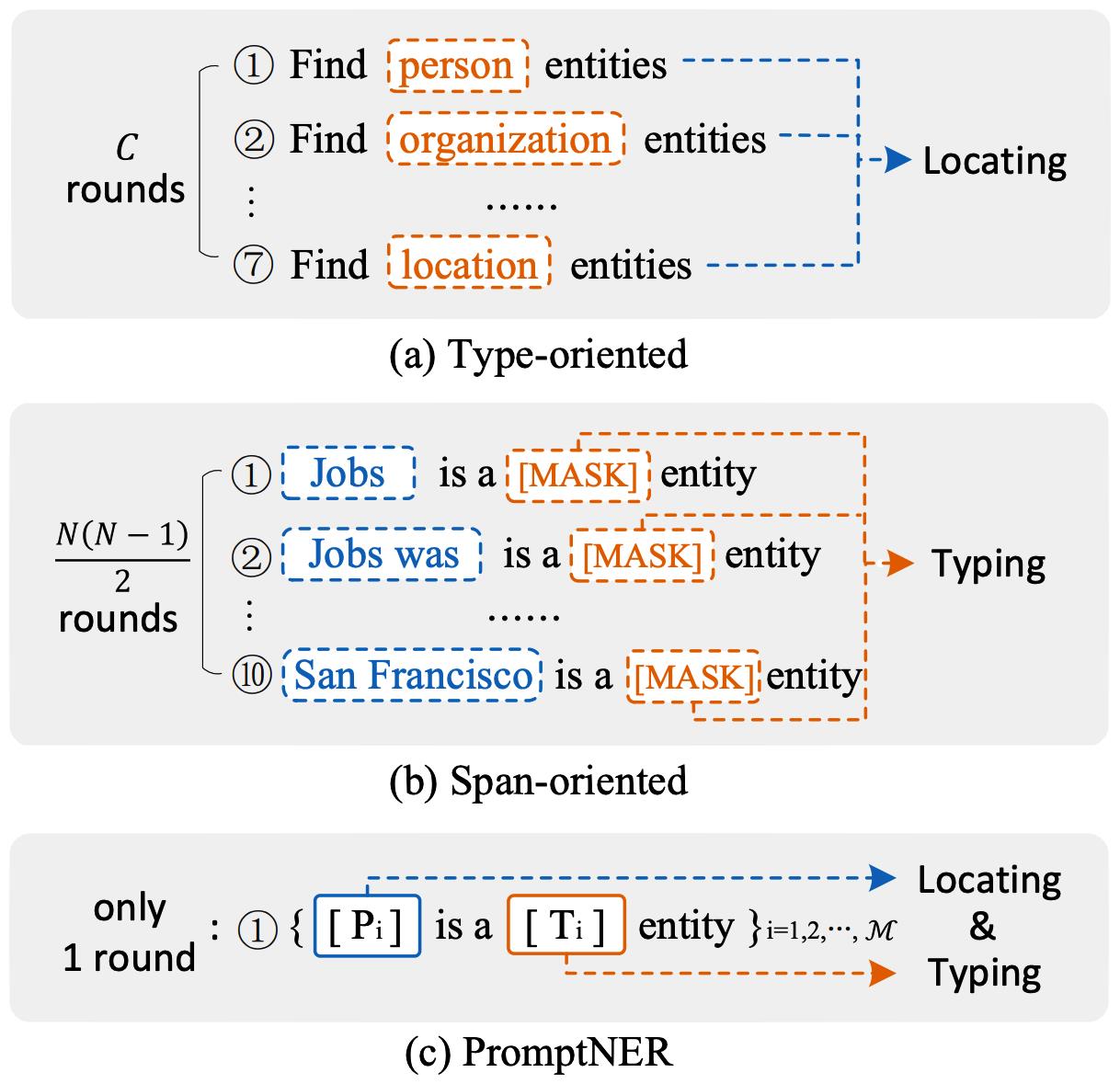

2. Named Entity Recognition

Named Entity Recognition (NER) involves identifying and classifying named entities, such as person names, organizations, locations, dates, or quantities, within a given text. It is a fundamental task in natural language processing and has applications in information extraction, text mining, and knowledge discovery.

Example prompt for named entity recognition:

“Identify and classify the named entities in the following text:

[Insert text]

Entities:”

Exercise: Create few-shot prompts for the following NER task:

- Dataset1: A set of news articles with annotated named entities (person, organization, location).

- Task: Identify and classify the named entities in the following sentence: “Apple CEO Tim Cook met with President Joe Biden at the White House to discuss privacy and technology issues.”

1: The [Dataset] is a collection of text documents with annotated named entities (person, organization, location, etc.). The model learns from the labeled examples to identify and classify named entities in new, unseen text.

Explanation: This prompt asks the AI model to identify and classify the named entities present in the provided text. By specifying “Entities:” as the output indicator, the prompt expects the model to list the identified entities along with their corresponding classifications (e.g., person, organization, location). This prompt allows for a more detailed and structured output compared to a simple binary classification.

Desired outcome: The AI model should accurately identify and classify the named entities within the given text. It should recognize entities such as person names, organizations, locations, dates, or quantities and provide their corresponding classifications. The output should be presented in a clear and structured format, enabling easy interpretation and further analysis of the extracted entities.

Prompt Creation Guidelines:

- Include a few representative examples from the labeled dataset in your prompt to demonstrate the desired input-output mapping.

- Ensure that the examples cover different scenarios or variations of the task to help the model generalize better.

- Provide clear instructions on how the model should format its output, such as using specific labels or delimiters.

- Experiment with different numbers of examples to find the optimal balance between providing sufficient guidance and not overloading the model.

AI Platform Exploration and Testing:

- OpenAI ChatGPT: Use the ChatGPT interface to provide your few-shot prompts and labeled examples. Observe how the model generates responses based on the provided examples.

- Anthropic: Integrate Anthropic’s API into your application or use their web interface to test few-shot prompting with Claude. Evaluate the model’s performance and compare it with other platforms.

Experimenting with Different Numbers of Examples: Try varying the number of examples provided in your few-shot prompts to see how it affects the model’s performance. Start with a small number of examples (e.g., 2-3) and gradually increase it. Observe the following:

- Does increasing the number of examples lead to better accuracy and relevance in the generated responses?

- Is there a point of diminishing returns, where adding more examples doesn’t significantly improve performance?

- How does the model handle edge cases or examples that deviate from the provided patterns?

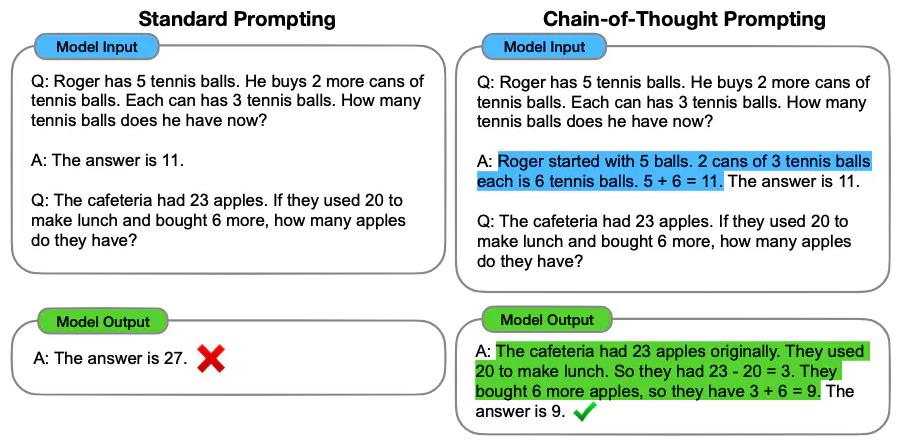

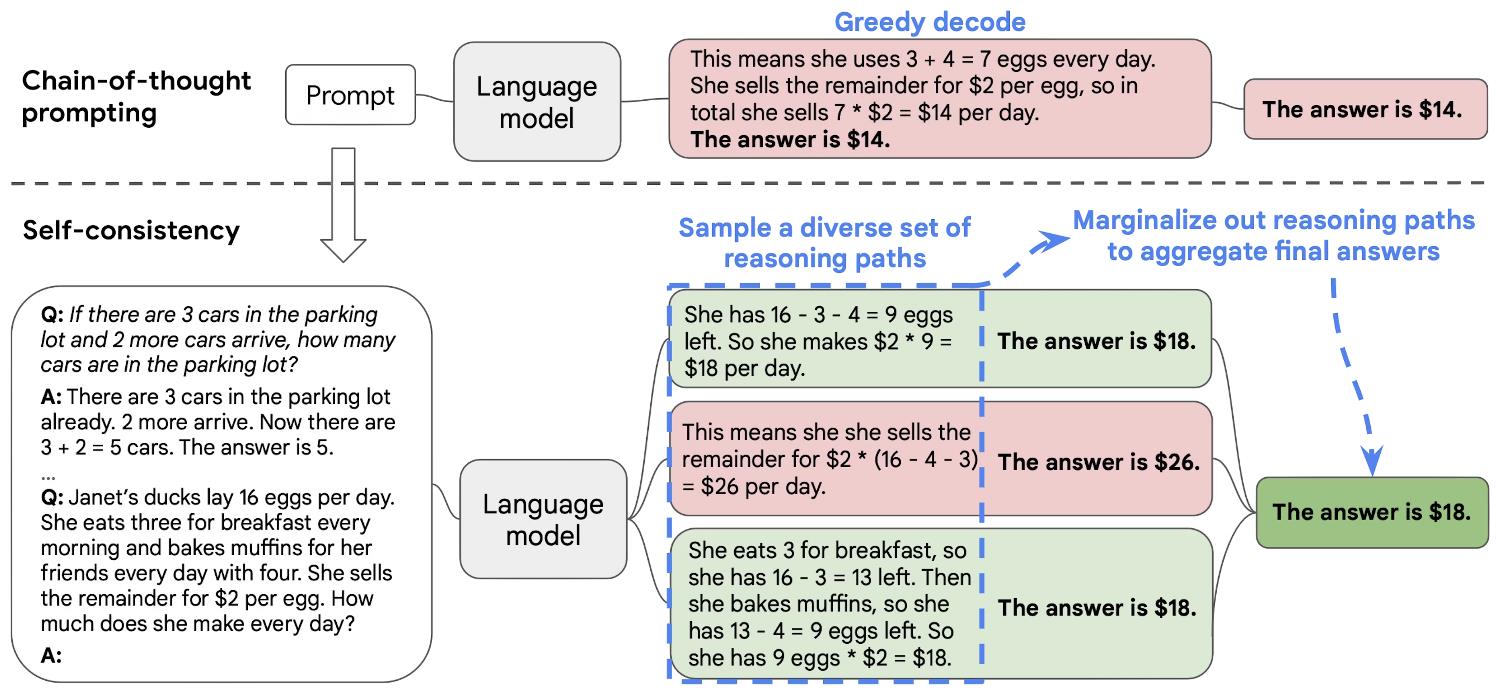

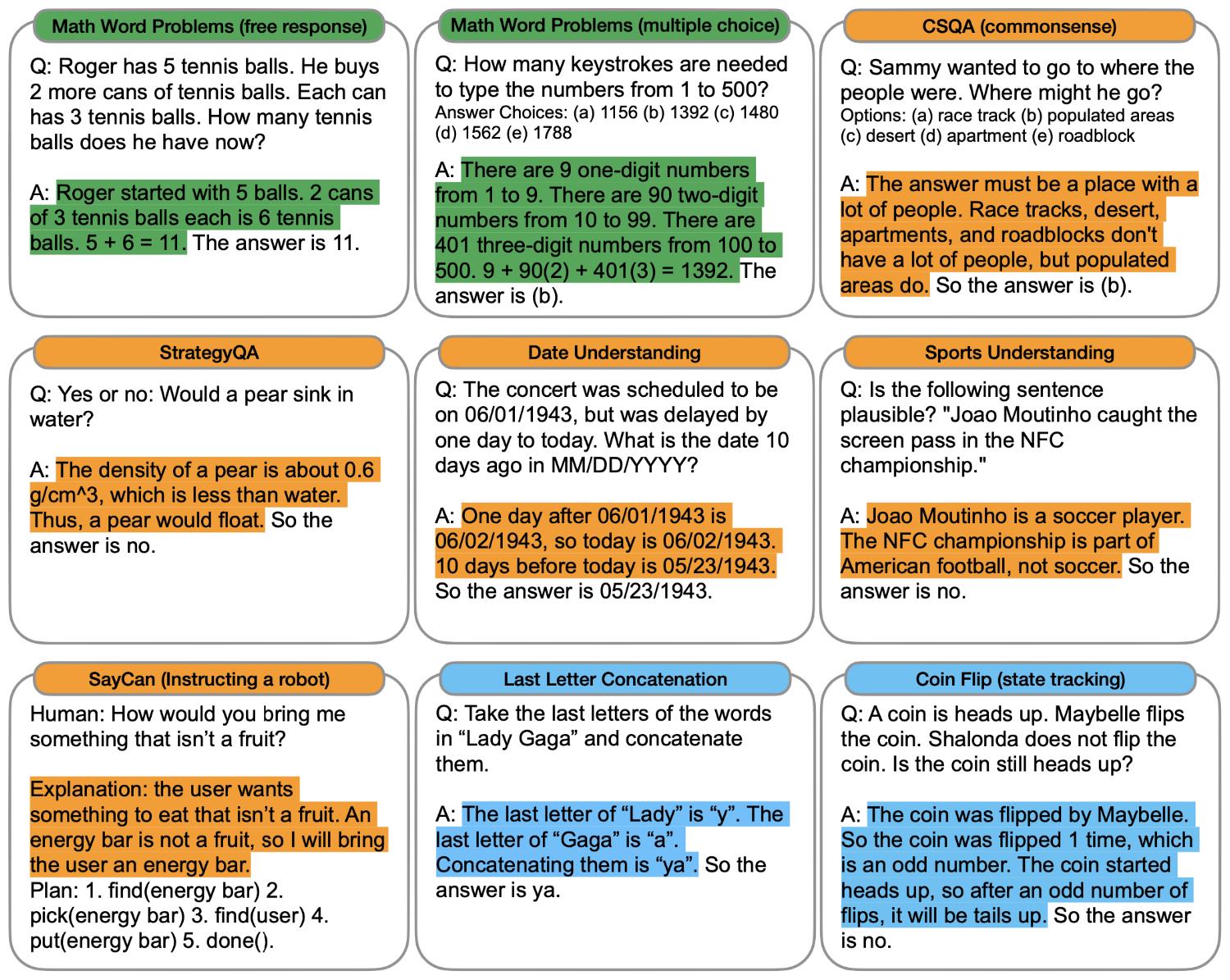

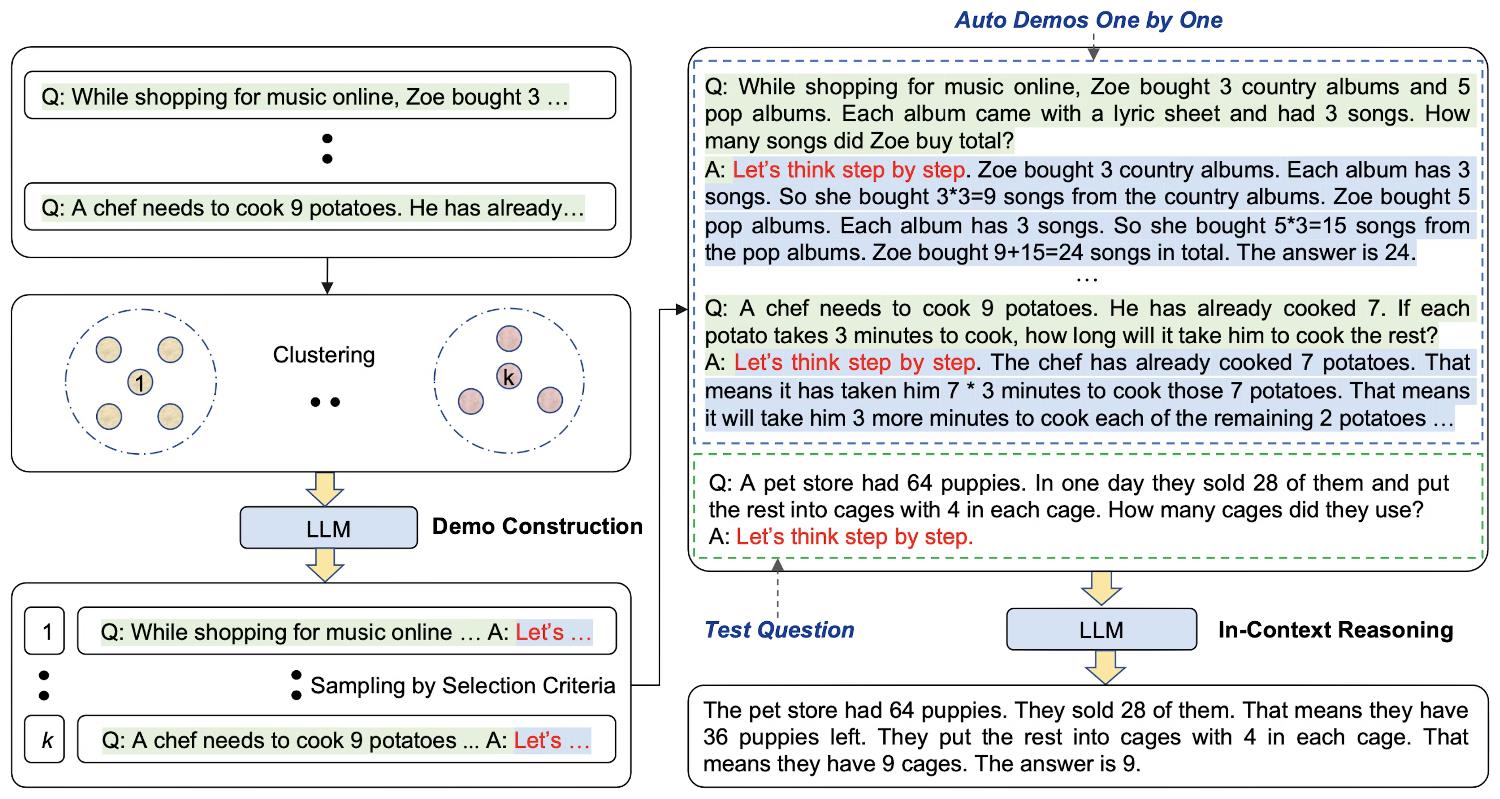

8.4 Chain-of-Thought Prompting Exercise

Chain-of-thought prompting is a technique that encourages the AI model to break down a complex problem into smaller steps and provide a step-by-step reasoning process. By guiding the model to think through the problem systematically, chain-of-thought prompting can lead to more accurate and interpretable results. Let’s explore this technique through two problem types:

1. Mathematical Word Problem

Mathematical word problems require the AI model to understand the problem statement, identify the relevant information, and apply mathematical concepts to solve the problem.

This problem involves translating textual descriptions of real-world scenarios into mathematical expressions, the AI must solve them to obtain numerical answers. This requires a combination of language understanding and mathematical reasoning skills.

Example prompt for a mathematical word problem:

“Solve the following problem step by step:

A bakery sells cookies in boxes of 12. If the bakery has 180 cookies, how many full boxes can be filled, and how many cookies will be left over?

Step 1: Identify the total number of cookies the bakery has.

Step 2: Determine the number of cookies in each box.

Step 3: Divide the total number of cookies by the number of cookies per box to find the number of full boxes.

Step 4: Calculate the remainder to find the number of cookies left over.

Step 5: Provide the final answer.”

Explanation: This prompt presents a mathematical word problem and asks the AI model to solve it step by step. By breaking down the problem-solving process into individual steps, the prompt encourages the model to provide a clear and logical explanation of its reasoning. The numbered steps guide the model to present its solution in a structured and easy-to-follow manner, while the “Answer:” output indicator prompts the model to provide the final numerical result.

Desired outcome: The AI model should generate a step-by-step solution to the given mathematical word problem. Each step should represent a logical progression in the problem-solving process, demonstrating the model’s understanding of the problem and its ability to apply relevant mathematical concepts. The final answer should be accurate and clearly stated, providing the number of full cookie boxes and the remaining cookies.

Additional Examples of Math Word Problems

2. Logical Reasoning Task

Logical reasoning tasks involve evaluating the validity of arguments, drawing conclusions from given premises, and analyzing the logical structure of statements. They require critical thinking skills and the ability to identify logical fallacies or inconsistencies.

Example prompt for a logical reasoning task:

“Evaluate the logical validity of the following argument:

Premise 1: All birds have feathers.

Premise 2: Penguins are birds.

Conclusion: Therefore, penguins have feathers.

Step 1: Identify the logical structure of the argument.

Step 2: Determine the relationship between the premises.

Step 3: Evaluate the validity of the conclusion based on the premises.

Step 4: Provide a justification for your evaluation.

Evaluation:”

Explanation: This prompt presents a logical argument and asks the AI model to evaluate its validity step by step. By breaking down the evaluation process into distinct steps, the prompt guides the model to analyze the logical structure of the argument, assess the relationship between the premises, and determine the validity of the conclusion. The “Evaluation:” output indicator prompts the model to provide a clear assessment of the argument’s validity along with a justification for its reasoning.

Desired outcome: The AI model should generate a step-by-step evaluation of the given logical argument. Each step should demonstrate the model’s understanding of the argument’s structure and its ability to assess the logical relationship between the premises and the conclusion. The final evaluation should provide a clear judgment of the argument’s validity (e.g., valid, invalid) along with a well-reasoned justification supporting the model’s assessment.

AI Platform Demonstration:

- OpenAI ChatGPT: Use the ChatGPT interface to provide the chain-of-thought prompts for the mathematical word problem and logical reasoning task. Observe how the model generates step-by-step solutions and explanations.

- Anthropic: Integrate Anthropic’s API or use their web interface to test chain-of-thought prompting with Claude. Compare the generated responses with those from other platforms.

- Google Gemini: While Google Gemini doesn’t explicitly support chain-of-thought prompting, you can experiment with providing step-by-step instructions and observe how the model responds.

Results Analysis and Benefits Discussion: After testing chain-of-thought prompting on different AI platforms, analyze the results and discuss the benefits of this technique:

- How does breaking down the problem into smaller steps impact the accuracy and clarity of the generated responses?

- Does chain-of-thought prompting lead to more interpretable and transparent reasoning processes?

- Are there any limitations or challenges in applying chain-of-thought prompting to certain types of problems?

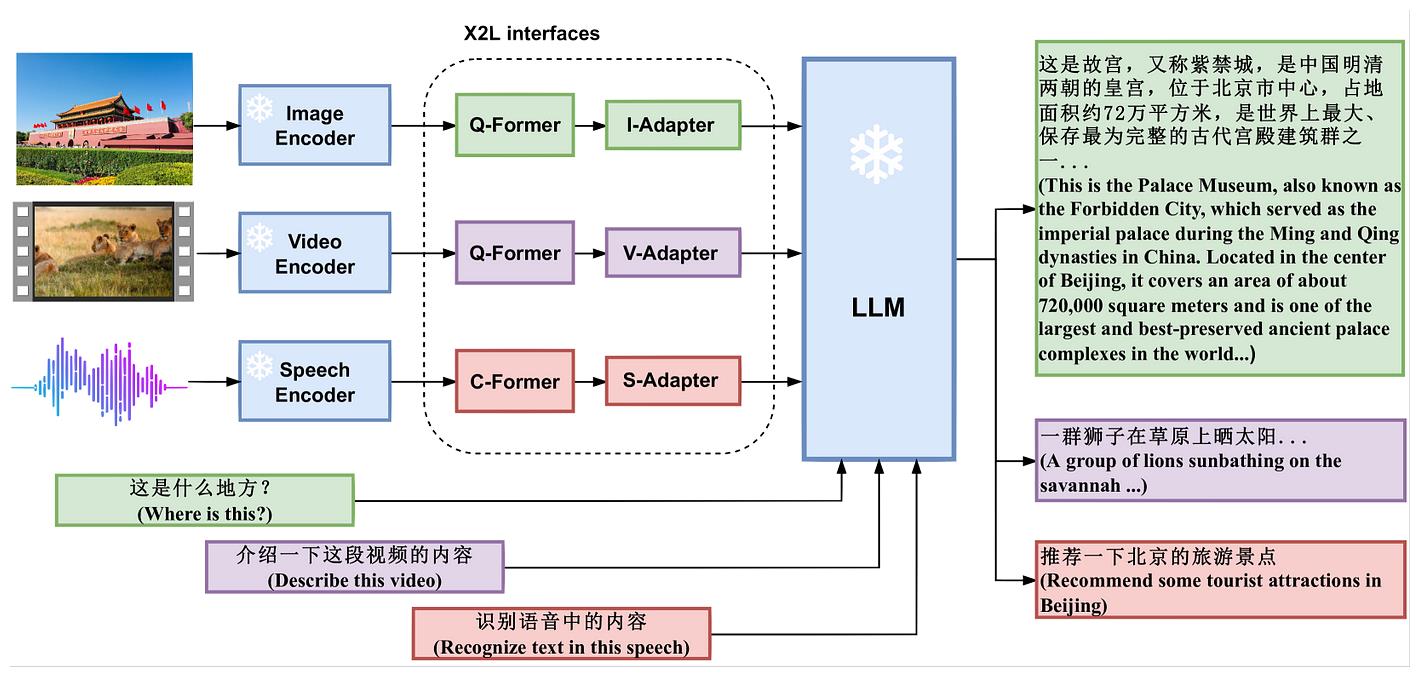

8.5 Multimodal Prompting Exercise

Multimodal projects are simply projects that have multiple “modes” of communicating a message. For example, while traditional papers typically only have one mode (text), a multimodal project would include a combination of text, images, motion, or audio.

By combining information from different modalities, multimodal prompting can lead to more contextually rich and accurate outputs. In this exercise, we’ll explore two multimodal prompting tasks:

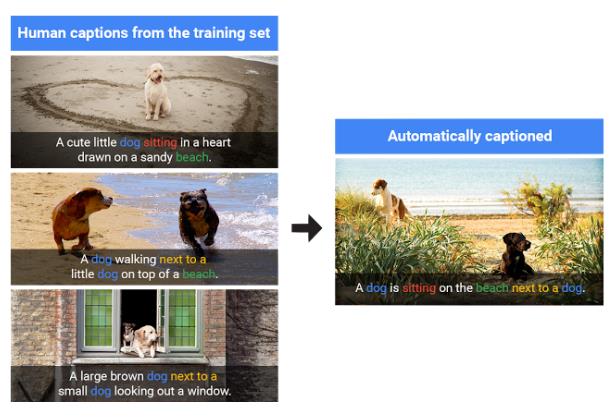

1. Image Captioning

Image captioning involves generating descriptive text based on the visual content of an image. It requires understanding the objects, actions, and relationships depicted in the image and translating that information into coherent and meaningful sentences.

Example prompt for image captioning:

“Generate a descriptive caption for the following image:

[Insert image]

Caption:”

Exercise: Create multimodal prompts for the following image captioning task:

- Dataset1: A collection of images depicting various scenes and objects.

- Task: Generate a caption for an image of a person riding a bicycle in a park.

1: The [Dataset] is a collection of images paired with their corresponding descriptive captions. The model learns from the image-caption pairs to generate descriptive captions for new, unseen images.

Explanation: This prompt presents an image to the AI model and asks it to generate a descriptive caption. By providing the visual input and specifying the “Caption:” output indicator, the prompt directs the model to analyze the image and generate a textual description that captures the key elements and relationships within the image. The prompt encourages the model to provide a concise and informative summary of the image’s content.

Desired outcome: The AI model should generate a descriptive caption that accurately represents the visual content of the given image. The caption should identify the main objects, actions, and relationships present in the image and convey them in a clear and concise manner. The generated caption should be grammatically correct, coherent, and provide a meaningful description of the image that can be easily understood by humans.

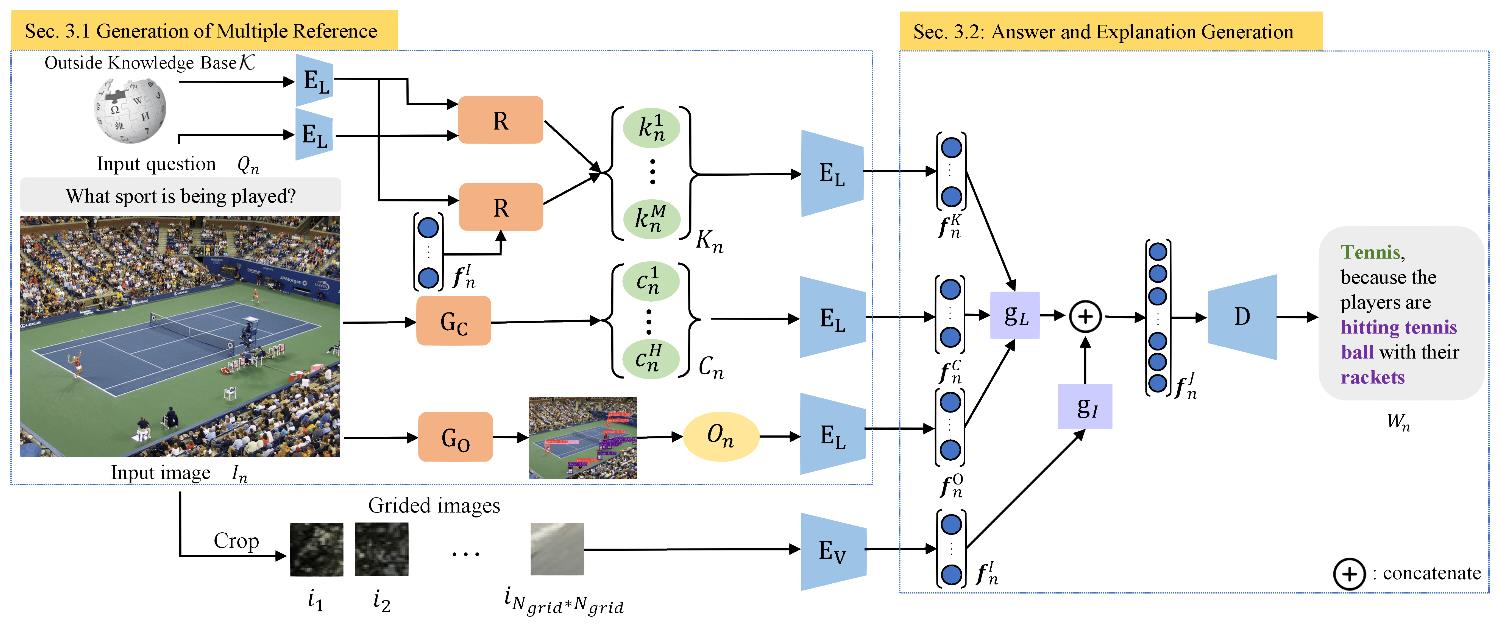

2. Visual Question Answering

Visual Question Answering (VQA) involves providing accurate answers to questions based on the visual information present in an image. It requires a combination of image understanding and language comprehension skills to interpret the question and determine the relevant information from the image to generate an appropriate answer.

Example prompt for visual question answering:

“Answer the following question based on the provided image:

[Insert image]

Question: [Insert question]

Answer:”

Exercise: Create multimodal prompts for the following visual question-answering task:

- Dataset1: A set of images along with corresponding questions and answers.

- Task: Answer the question “What color is the car in the image?” based on an image of a red car parked on a street.

1: The [Dataset] is a collection of images paired with questions and their corresponding answers. The model learns from the image-question-answer triplets to answer questions based on the visual information in new, unseen images.

Explanation: This prompt presents an image along with a specific question and asks the AI model to provide an answer based on the visual information. By including both the image and the question in the prompt, the model is guided to analyze the visual content and interpret the question in the context of the image. The “Answer:” output indicator prompts the model to generate a direct and concise response to the given question.

Desired outcome: The AI model should generate an accurate and relevant answer to the provided question based on the visual information present in the given image. The answer should demonstrate the model’s understanding of the image content and its ability to relate it to the specific question being asked. The generated answer should be clear, concise, and directly address the question, providing the requested information based on the visual evidence.

Prompt Creation Guidelines:

- For image captioning, provide a clear and concise description of the image content in your prompt, highlighting the key elements and their relationships.

- For visual question answering, include the image and the specific question you want the model to answer based on the visual information.

- If available, provide examples of image-text pairs or image-question-answer triplets to guide the model’s understanding of the task.

- Experiment with different prompt formats and structures to find the most effective way of combining textual and visual information.

AI Platform Exploration:

- OpenAI’s DALL-E: DALL-E is a powerful image generation model developed by OpenAI. While it doesn’t directly support multimodal prompting, you can use it to generate images based on textual descriptions and then use those images for captioning or visual question-answering tasks.

- Leonardo.ai: Leonardo.ai offers a suite of AI tools for multimodal tasks, including image captioning and visual question answering. Explore their platform and experiment with creating multimodal prompts to generate captions or answer questions based on images.

Results Analysis and Discussion: After testing multimodal prompting on different AI platforms, analyze the results and discuss your findings:

- How well do the models capture the relevant information from both the textual and visual modalities?

- Are the generated captions or answers accurate, descriptive, and contextually appropriate?

- What are the challenges or limitations of multimodal prompting, and how can they be addressed?

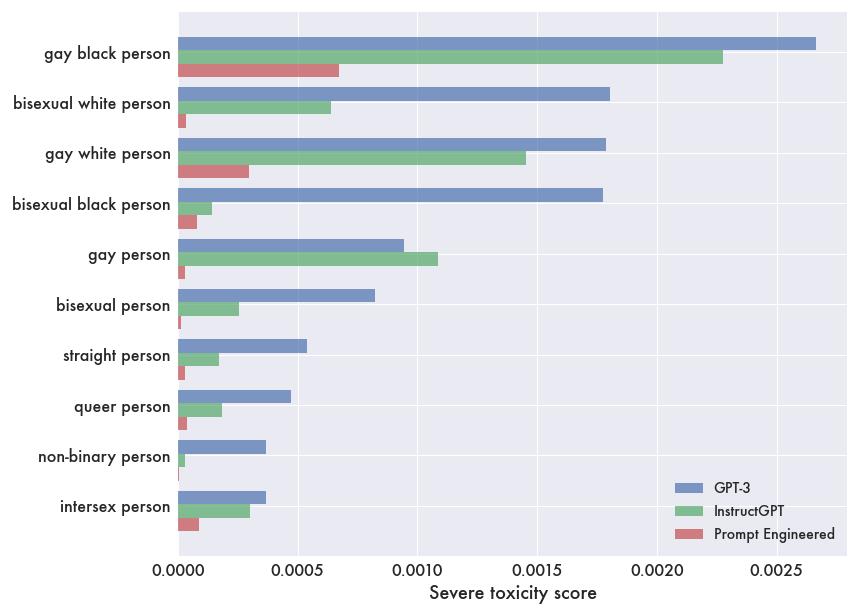

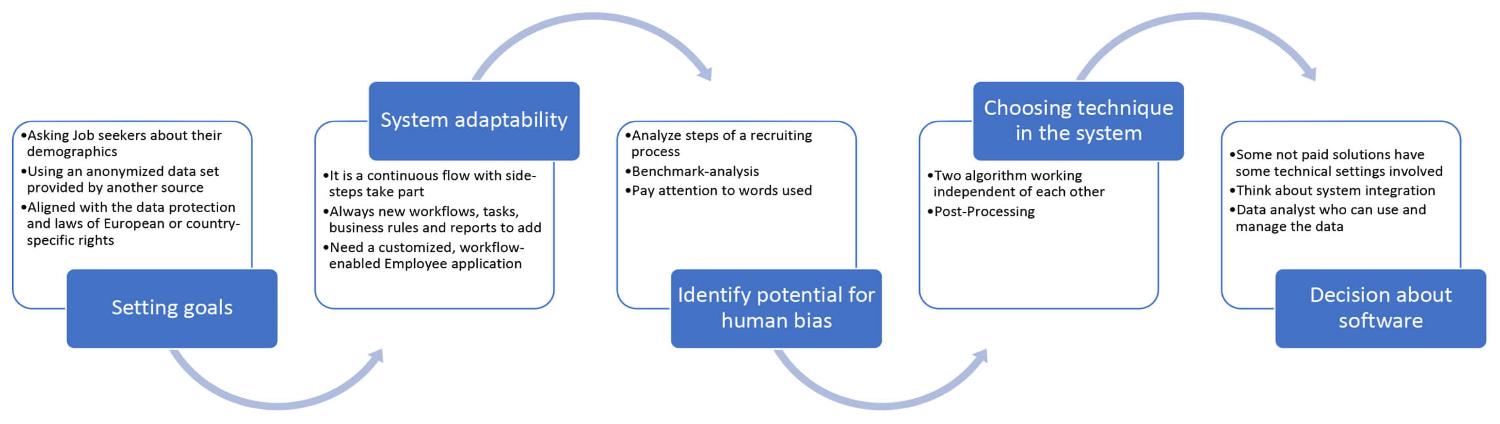

8.6 Prompt Engineering for Bias Reduction Exercise

AI models can sometimes exhibit biases based on the data they were trained on, leading to unfair or discriminatory outputs. Prompt engineering techniques can be used to mitigate these biases and promote more equitable results. In this exercise, we’ll explore prompt engineering for bias reduction in two sensitive domains:

1. Job Recommendations

Job recommendation systems aim to match job seekers with suitable job opportunities based on their skills, experience, and preferences. However, these systems can sometimes exhibit biases based on factors such as gender, race, or age, leading to unfair or discriminatory recommendations.

Example prompt for bias reduction in job recommendations:

“Generate job recommendations for a [Insert job seeker’s profile] without any gender, race, or age bias. Focus on the candidate’s skills, experience, and qualifications relevant to the job.

Recommendations:”

Exercise: Create prompts that aim to reduce bias in job recommendations:

- Dataset1: A collection of job postings and candidate profiles.

- Task: Generate job recommendations for a female candidate with a background in software engineering, ensuring the suggestions are free from gender bias.

1: The [Dataset] is a collection of job postings and job seeker profiles. The model learns from the dataset to generate job recommendations while aiming to reduce biases based on gender, race, or age.

Explanation: This prompt instructs the AI model to generate job recommendations for a specific job seeker profile while explicitly emphasizing the need to avoid biases based on gender, race, or age. By directing the model to focus on the candidate’s skills, experience, and qualifications, the prompt aims to ensure that the recommendations are based on merit and job-related factors rather than personal attributes. The “Recommendations:” output indicator prompts the model to provide a list of suitable job suggestions.

Desired outcome: The AI model should generate job recommendations that are free from gender, race, or age biases. The recommendations should be based solely on the job seeker’s relevant skills, experience, and qualifications, ensuring equal opportunities for all candidates regardless of their personal attributes. The generated recommendations should be diverse, inclusive, and aligned with the candidate’s profile and job preferences.

2. Sentiment Analysis

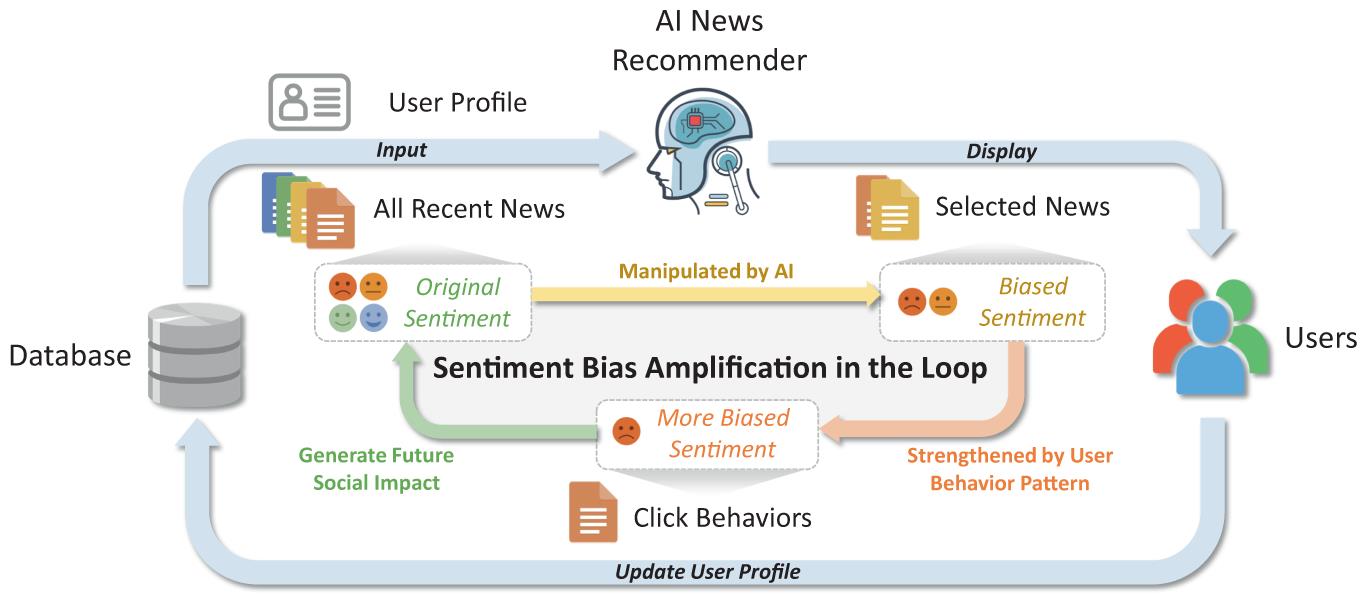

Sentiment analysis models can sometimes exhibit biases based on the language, dialect, demographic factors, or cultural context of the text being analyzed. The model learns from the patterns present in the training data, which may contain biases inherent in the language, dialect, demographics, or cultural context represented in that data. These biases can lead to inaccurate or unfair sentiment classifications, particularly for underrepresented or marginalized groups.

Example prompt for bias reduction in sentiment analysis:

“Analyze the sentiment of the following text without any language or cultural bias. Consider the context and meaning of the words used.

[Insert text]

Sentiment:”

Exercise: Create prompts that mitigate bias in sentiment analysis:

- Dataset1: A set of text reviews from diverse demographic groups.

- Task: Analyze the sentiment of a review written in African American Vernacular English (AAVE), ensuring the model’s output is unbiased and culturally sensitive.

1: The [Dataset] is a collection of text documents labeled with sentiment (positive, negative, or neutral). The model learns from the labeled examples to analyze the sentiment of new, unseen text while aiming to reduce language or cultural biases.

Explanation: This prompt asks the AI model to analyze the sentiment of a given text while explicitly instructing it to avoid language or cultural biases. By emphasizing the need to consider the context and meaning of the words used, the prompt encourages the model to focus on the actual sentiment expressed in the text rather than relying on surface-level features or stereotypes associated with specific languages or cultures. The “Sentiment:” output indicator prompts the model to provide a sentiment classification.

Desired outcome: The AI model should generate sentiment classifications that are unbiased and accurate, regardless of the language, dialect, or cultural context of the text being analyzed. The model should consider the nuances and meaning behind the words used, taking into account the specific context in which they appear. The generated sentiment should reflect the true emotional tone of the text, free from any preconceived notions or stereotypes associated with particular languages or cultures.

Prompt Creation Guidelines for Bias Reduction:

- Be aware of the potential biases that may exist in the training data or the AI model’s outputs.

- Use neutral and inclusive language in your prompts, avoiding terms or phrases that may reinforce stereotypes or biases.

- Explicitly instruct the model to generate outputs that are fair, unbiased, and respectful of diversity.

- Include examples of unbiased or bias-mitigated outputs in your prompts to guide the model’s behavior.

- Continuously monitor and assess the model’s outputs for any signs of bias and refine your prompts accordingly.

AI Platform Testing and Results Analysis:

- Test your bias-reduction prompts on different AI platforms, such as OpenAI ChatGPT, Anthropic, or Google Gemini.

- Analyze the generated outputs for any indications of bias, such as skewed recommendations or inconsistent sentiment classifications across different demographic groups.

- Compare the results across platforms and identify any patterns or differences in their handling of biased inputs.

Discussion on the Effectiveness of Bias Reduction Strategies:

- Share your findings and observations with your peers and discuss the effectiveness of your bias-reduction prompts.

- Reflect on the challenges and limitations of mitigating bias through prompt engineering alone.

- Explore additional strategies, such as data diversification or model fine-tuning, that can complement prompt engineering efforts in reducing bias.

8.7 Prompt Evaluation

Developing effective prompts is an iterative process that involves evaluating the generated outputs and refining the prompts based on the results. By systematically assessing the quality and relevance of the AI-generated responses, you can identify areas for improvement and make targeted adjustments to your prompts. This section provides guidance on how to evaluate the effectiveness of your prompts and iterate on them to achieve better outcomes.

8.9.1 Defining Evaluation Criteria

To evaluate the effectiveness of your prompts, start by defining clear criteria that align with your desired outcomes. These criteria may include:

- Relevance: How well does the generated output address the specific task or question outlined in the prompt?

- Accuracy: Is the information provided in the output factually correct and consistent with the given context?

- Coherence: Does the output maintain a logical flow and structure, with ideas that are well-connected and easy to follow?

- Completeness: Does the output cover all the necessary aspects or components required by the prompt?

- Specificity: Is the output sufficiently detailed and specific to the given context, avoiding generic or vague statements?

- Language Quality: Is the output well-written, grammatically correct, and free of errors or inconsistencies?

By establishing these evaluation criteria upfront, you can systematically assess the strengths and weaknesses of your prompts and the generated outputs.

8.9.2 Conducting Qualitative Assessments

Once you have generated outputs from your prompts, conduct a qualitative assessment by carefully reviewing the responses against your evaluation criteria. Consider the following approaches:

- Manual Review: Read through the generated outputs and assess them based on the defined criteria. Take notes on what works well and what needs improvement.

- Comparative Analysis: Generate outputs from different prompts or variations of the same prompt and compare them side by side. Identify the elements that contribute to better-quality outputs and those that lead to suboptimal results.

- Blind Evaluation: Have someone else review the outputs without knowing the specific prompts used to generate them. This unbiased feedback can provide valuable insights into the effectiveness of your prompts.

- User Feedback: If applicable, gather feedback from the intended audience or users of the AI-generated content. Their perspectives can help you understand how well the outputs meet their needs and expectations.

Based on your qualitative assessments, identify patterns or recurring issues in the generated outputs that can be addressed through prompt refinement.

8.9.3 Iterative Prompt Refinement

Armed with insights from your qualitative assessments, iterate on your prompts to improve the quality and relevance of the generated outputs. Consider the following strategies:

- Clarify Instructions: If the outputs indicate confusion or misinterpretation, clarify the instructions in your prompts. Use concise and specific language to guide the AI model towards the desired outcome.

- Provide More Context: If the outputs lack relevance or specificity, consider providing more context in your prompts. Include additional details, examples, or constraints that can help the AI model generate more focused and accurate responses.

- Adjust Formatting: Experiment with different formatting techniques, such as using bullet points, numbered lists, or specific delimiters, to structure your prompts. Clear and well-organized prompts can lead to more coherent and complete outputs.

- Modify Output Length: If the outputs are too brief or too lengthy, adjust the desired output length in your prompts. Specify the expected word count, number of sentences, or any other relevant constraints to control the level of detail in the generated content.

- Incorporate Examples: If the outputs deviate from the desired style, tone, or format, include examples in your prompts that demonstrate the expected output. By providing concrete illustrations, you can guide the AI model to generate content that aligns with your requirements.

Iterate your prompts based on these strategies and re-evaluate the generated outputs. Repeat the process until you achieve satisfactory results that meet your evaluation criteria.

8.9.4 Continuous Monitoring and Improvement

Evaluating and iterating on prompts is an ongoing process. As you apply your prompts to different contexts or as the AI models evolve, regularly monitor the generated outputs to ensure they maintain the desired level of quality and relevance. Be proactive in identifying and addressing any new issues or opportunities for improvement that arise over time.

By continuously refining your prompts based on evaluation and iteration, you can develop a more systematic and effective approach to prompt engineering. This iterative process helps you create prompts that consistently generate high-quality outputs, saving time and effort in the long run.

Additional reading:

Additional reading:

- “Guide to Refining Prompts & AI Prompt Terms” by George Weiner (https://www.wholewhale.com/tips/guide-to-refining-prompts-ai-prompts-terms/)

- “The Art of Prompt Refinement: A Deep Dive into Crafting Better Queries” by Sravani Thota (https://medium.com/@sravani.thota/the-art-of-prompt-refinement-a-deep-dive-into-crafting-better-queries-9f4660113fd2)

8.9 Summary

In this module, we explored various hands-on exercises and demonstrations to reinforce the concepts and techniques covered in the previous modules.

Key Learnings:

- Applying zero-shot, few-shot, and chain-of-thought prompting techniques to real-world tasks such as sentiment analysis, text summarization, and question answering.

- Understanding the process of creating effective prompts and experimenting with different prompt structures and formats.

- Exploring multimodal prompting and its applications in image captioning and visual question answering.

- Addressing bias in AI systems through prompt engineering techniques and discussing strategies for mitigating bias.

- Showcasing impressive AI applications across text, image, video, music, and speech domains.

Hands-on experience is crucial for mastering AI prompting techniques. By actively engaging in the exercises and demonstrations provided in this module, you have gained practical skills and insights that will enable you to leverage AI technologies effectively in your own projects and applications.

As you continue your AI learning journey, it’s essential to stay updated with the latest developments and advancements in the field. AI is a rapidly evolving domain, with new models, techniques, and applications emerging regularly. By following relevant news sites, blogs, and online communities, you can keep abreast of cutting-edge research and innovations in AI.

Looking Ahead: In the next module, Module 9, we will delve into the ethical considerations and challenges associated with AI development and deployment. We will explore topics such as algorithmic bias, privacy concerns, transparency, and accountability in AI systems. Understanding these ethical implications is crucial for responsible and trustworthy AI adoption.