Module 3: Natural Language Processing (NLP) Fundamentals

Natural Language Processing represents a frontier in artificial intelligence, enabling machines to understand and communicate using human language. This module explores the core concepts and techniques that drive this transformative technology.

- Goal: Introduce learners to the core concepts, techniques, and applications of natural language processing (NLP) and its role in enabling machines to understand and generate human language.

- Objective: By the end of this module, learners will be able to define NLP, explain its key components and techniques, and recognize its applications in various domains.

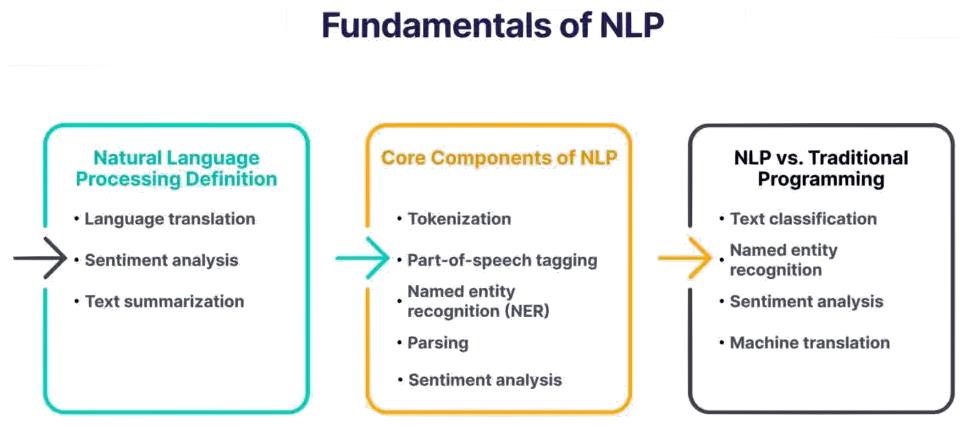

This module provides insights into the foundations of Natural Language Processing (NLP). We begin by defining NLP and its primary goals of enabling language understanding by machines and facilitating human-machine communication.

You’ll learn the key components and techniques central to NLP, including tokenization, part-of-speech tagging, named entity recognition, parsing, and semantic analysis. Understanding how these work is crucial for grasping NLP systems.

The module covers language models like n-grams and neural networks that allow systems to understand and generate natural language. You’ll see their applications as well as the limitations of traditional models.

Sentiment analysis is highlighted as a major use case, focusing on techniques to determine the underlying sentiment in text data. Applications in areas like business intelligence and social media monitoring are discussed.

Finally, the module showcases NLP’s real-world impact across various domains – chatbots, machine translation, text summarization, information retrieval, and healthcare. This demonstrates NLP’s potential to transform human-computer interaction.

By the end, you’ll understand NLP fundamentals, grasp core techniques, see key applications, and appreciate the technology’s growing importance across industries.

- 3.1 Introduction to Natural Language Processing (NLP)

- 3.2 Core Concepts in NLP

- 3.3 Language Models and their Applications

- 3.4 Sentiment Analysis and Opinion Mining

- 3.5 NLP in Practice: Real-World Applications

- 3.6 Summary

3.1 Introduction to Natural Language Processing (NLP)

Natural Language Processing (NLP) is a critical component of artificial intelligence that focuses on enabling machines to understand, interpret, and generate human language. By bridging the gap between human communication and computer understanding, NLP opens up a wide range of possibilities for more intuitive and efficient human-machine interaction.

3.1.1 Definition and goals of NLP

NLP can be defined as a branch of artificial intelligence that deals with the interaction between computers and humans using natural language.

The primary goals of NLP are to:

- Enable machines to understand and interpret human language in its various forms, such as text and speech

- Develop systems that can generate human-like language output, facilitating more natural communication between humans and machines

- Automate language-related tasks and processes, such as translation, summarization, and sentiment analysis

Additional reading:

Additional reading:

- “Natural Language Processing (NLP): What Is It & Why Is It Important?” by IBM (https://www.ibm.com/cloud/learn/natural-language-processing)

3.1.2 Importance of NLP in AI and human-machine interaction

NLP plays a crucial role in making AI systems more accessible, user-friendly, and adaptable to human needs. By allowing machines to understand and respond to human language, NLP enables the development of more sophisticated and intuitive AI applications, such as:

- Virtual assistants and chatbots that can engage in human-like conversations

- Intelligent search engines that can understand the intent behind a user’s query

- Automated customer support systems that can handle a wide range of inquiries

- Language translation tools that can break down communication barriers

As AI becomes increasingly integrated into our daily lives, NLP will continue to be a key driver in shaping the future of human-machine interaction.

3.1.3 Overview of NLP tasks and applications

NLP encompasses a wide array of tasks and applications, each focusing on a specific aspect of language understanding or generation. Some of the most common NLP tasks include:

- Text classification: Assigning predefined categories to a given text based on its content

- Named entity recognition: Identifying and classifying named entities, such as people, organizations, and locations, within a text

- Sentiment analysis: Determining the sentiment or emotional tone of a piece of text

- Machine translation: Automatically translating text from one language to another

- Text summarization: Generating a concise summary of a longer text while retaining its key information

- Question answering: Providing accurate answers to questions posed in natural language

3.2 Core Concepts in NLP

To effectively understand and work with NLP systems, it is essential to grasp the core concepts and techniques that underpin the field. This section will introduce some of the fundamental building blocks of NLP, including tokenization, part-of-speech tagging, named entity recognition, syntactic parsing, and semantic analysis.

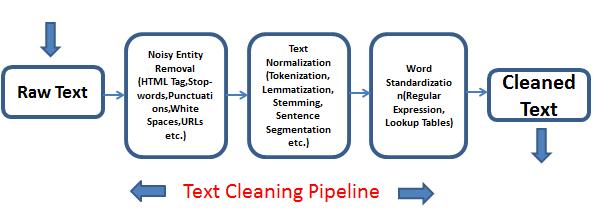

3.2.1 Tokenization and text preprocessing

Tokenization is the process of breaking down a piece of text into smaller units called tokens, which can be individual words, phrases, or even characters. This is a crucial step in many NLP tasks, as it helps to standardize the input text and make it more manageable for further processing.

Text preprocessing involves cleaning and normalizing the tokenized text to remove any noise or inconsistencies that could affect the performance of NLP algorithms.

Common preprocessing steps include:

- Lowercasing: Converting all characters to lowercase to ensure consistency

- Removing punctuation and special characters: Eliminating non-alphanumeric characters that may not contribute to the meaning of the text

- Stemming and lemmatization: Reducing words to their base or dictionary forms to handle inflectional variations

Example:

Original text: “The quick brown fox jumps over the lazy dog.”

Tokenized and preprocessed text: [“the”, “quick”, “brown”, “fox”, “jump”, “over”, “the”, “lazy”, “dog”]

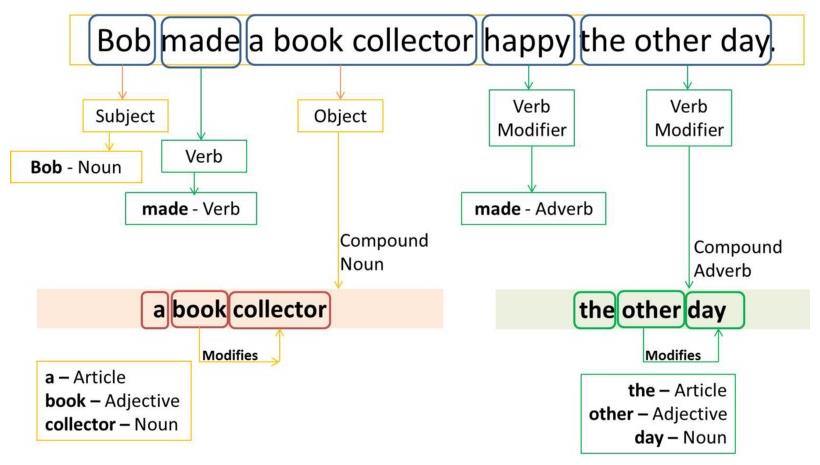

3.2.2 Part-of-speech (POS) tagging

Part-of-speech tagging is the process of assigning a grammatical category (e.g., noun, verb, adjective) to each word in a text based on its definition and context. POS tagging helps to disambiguate words that may have different meanings depending on their usage, and it provides valuable information for downstream NLP tasks, such as syntactic parsing and named entity recognition.

Example:

Original text: “The quick brown fox jumps over the lazy dog.”

POS-tagged text: [(“The”, DET), (“quick”, ADJ), (“brown”, ADJ), (“fox”, NOUN), (“jumps”, VERB), (“over”, ADP), (“the”, DET), (“lazy”, ADJ), (“dog”, NOUN)]

Additional reading:

Additional reading:

- “Part-of-Speech Tagging with NLTK” by Real Python (https://realpython.com/nltk-nlp-python/#part-of-speech-tagging-with-nltk)

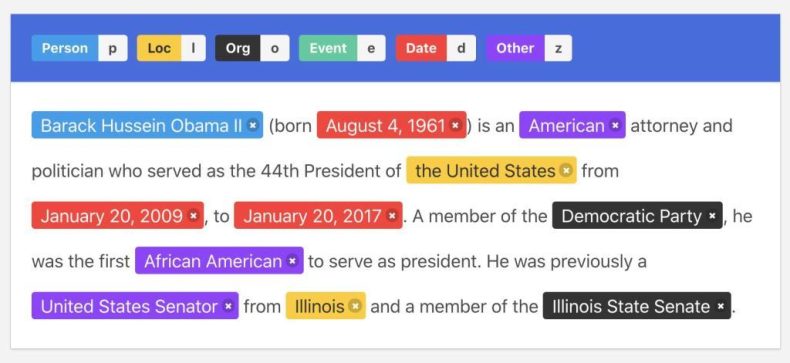

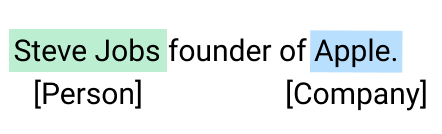

3.2.3 Named entity recognition (NER)

Named entity recognition is the task of identifying and classifying named entities mentioned in a text into predefined categories, such as person names, organizations, locations, dates, and quantities. NER is essential for information extraction and understanding the semantic content of a text.

Example:

Original text: “Apple Inc. is headquartered in Cupertino, California.”

NER-tagged text: [(“Apple Inc.”, ORGANIZATION), (“Cupertino”, LOCATION), (“California”, LOCATION)]

Additional reading:

Additional reading:

- “Named Entity Recognition: A Practitioners’ Guide to NLP” by Towards Data Science (https://towardsdatascience.com/named-entity-recognition-a-practitioners-guide-to-nlp-7b9eeb92c8d3)

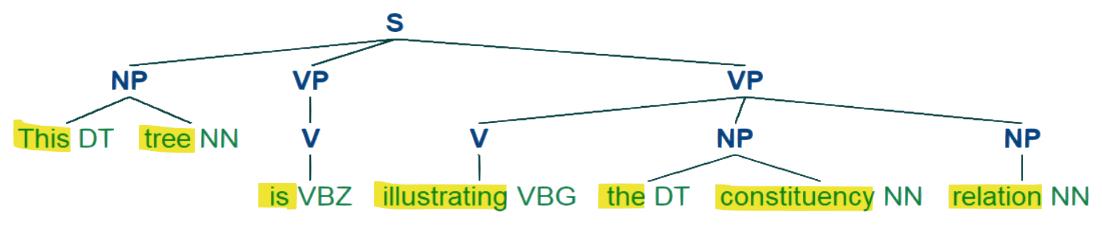

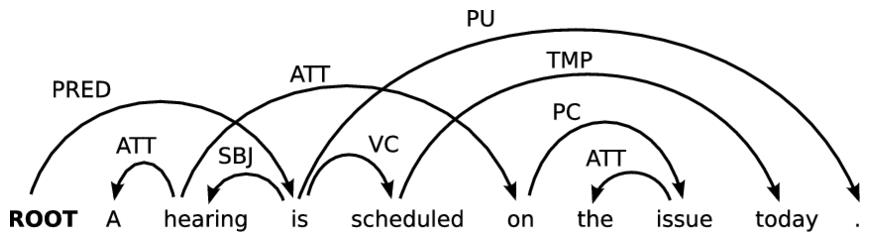

3.2.4 Syntactic parsing and dependency parsing

Syntactic parsing, also known as constituency parsing, is the process of analyzing the grammatical structure of a sentence and representing it as a tree-like hierarchy of phrases. This helps to uncover the underlying relationships between words and phrases in a sentence.

Dependency parsing, on the other hand, focuses on the direct relationships between words in a sentence, representing them as a graph of dependencies. This approach emphasizes the functional roles of words and how they relate to each other, which can be useful for tasks like information extraction and question answering.

Example:

Original text: “The quick brown fox jumps over the lazy dog.”

Syntactic parse tree: (S (NP (DET The) (ADJ quick) (ADJ brown) (NOUN fox)) (VP (VERB jumps) (PP (ADP over) (NP (DET the) (ADJ lazy) (NOUN dog)))))

Dependency parse graph: jumps -> fox fox -> The fox -> quick fox -> brown jumps -> over over -> dog dog -> the dog -> lazy

Additional reading:

Additional reading:

- “Syntactic Parsing and Dependency Parsing” by Analytics Vidhya (https://www.analyticsvidhya.com/blog/2021/06/syntactic-parsing-and-dependency-parsing/)

3.2.5 Semantic analysis and word embeddings

Semantic analysis focuses on understanding the meaning of words, phrases, and sentences in a text. This involves identifying the relationships between words, disambiguating polysemous words (words with multiple meanings), and determining the overall sentiment or emotion expressed in the text.

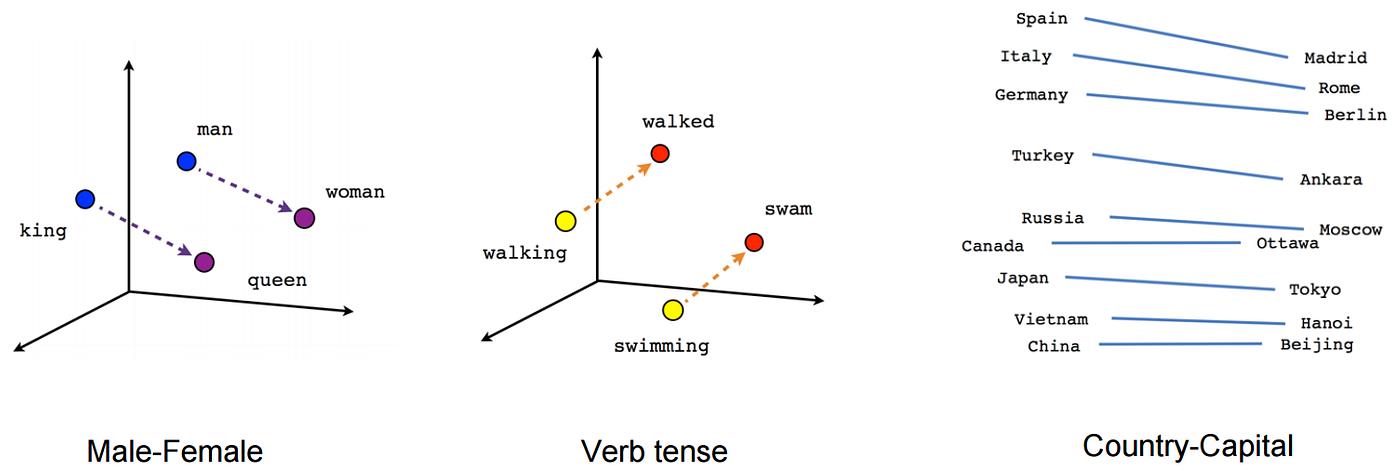

Word embeddings are a powerful technique for representing words as dense vectors in a high-dimensional space, capturing their semantic and syntactic properties. By mapping words to vectors, word embeddings enable NLP systems to perform mathematical operations on words and understand their relationships, such as analogies and similarities.

Example:

Word embeddings can capture relationships like:

-

- king – man + woman = queen

- paris – france + italy = rome

Popular word embedding models include Word2Vec, GloVe, and FastText, which learn word vectors from large corpora of text data.

Additional reading:

Additional reading:

- “Introduction to Word Embeddings” by Machine Learning Mastery (https://machinelearningmastery.com/introduction-to-word-embeddings/)

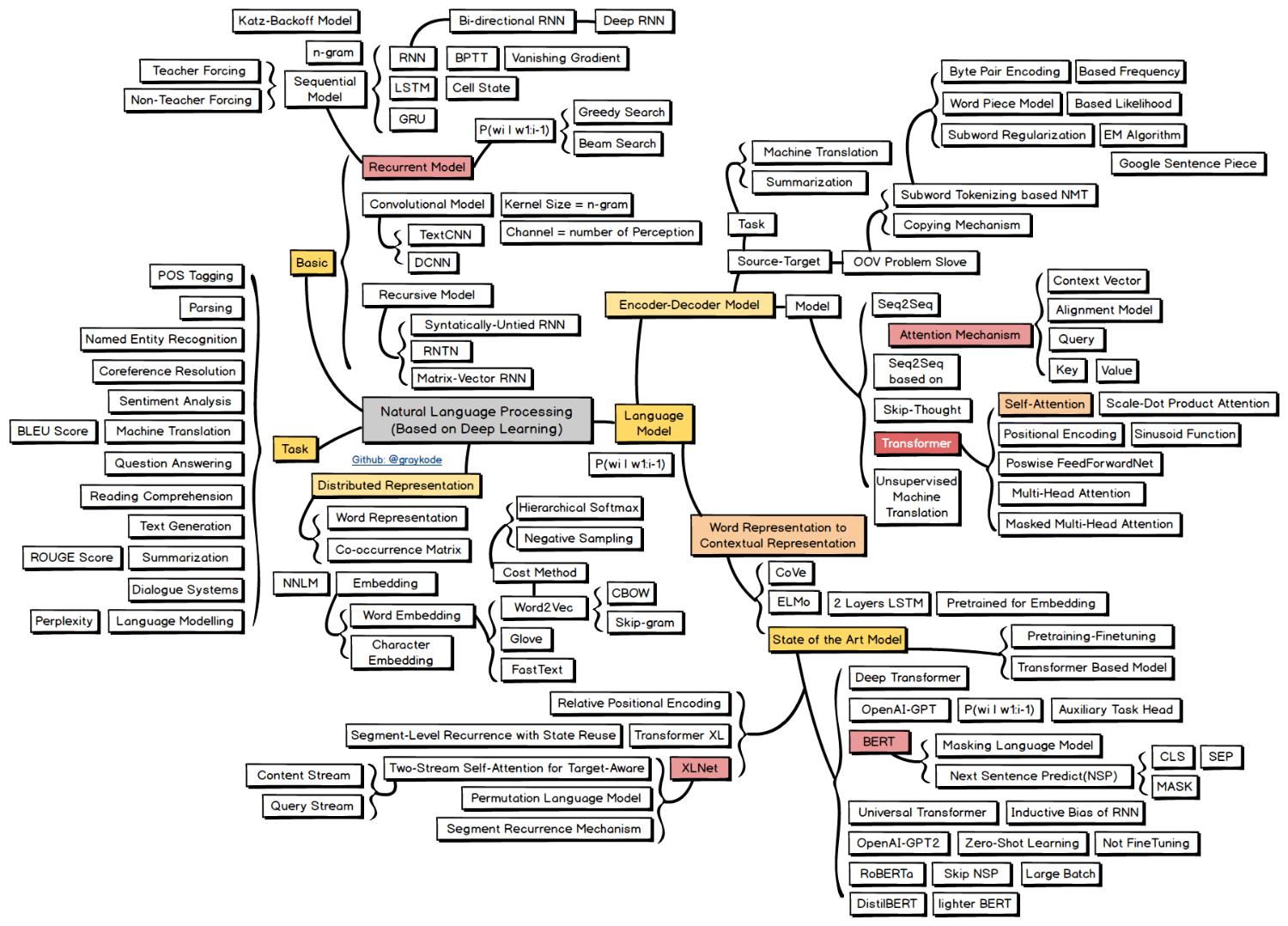

3.3 Language Models and their Applications

Language models are a crucial component of many NLP systems, as they enable machines to understand and generate human-like language. This section will introduce the concept of language models, discuss their applications in various NLP tasks, and explore the limitations and challenges of traditional language models.

3.3.1 Introduction to language models (e.g., n-gram models, neural language models)

A language model is a probability distribution over sequences of words, assigning a likelihood to each possible sequence based on the patterns observed in the training data. The goal of a language model is to capture the statistical properties of a language, enabling it to predict the most likely next word in a sequence or generate coherent text.

Two main types of language models are:

- N-gram models

These models estimate the probability of a word occurring based on the preceding n-1 words in a sequence. N-gram models are computationally efficient and straightforward, but they have limitations in capturing long-range dependencies and generating diverse textual outputs.

-

- N-grams represent contiguous sequences of n-words extracted from a given text corpus. They are constructed by sliding a window of length n across the text, capturing the co-occurring words within that window at each step. The window can move forward by one or more words (k) at a time.

- The sequences of co-occurring words are called “n-grams,” where “n” denotes the number of consecutive words within a given window computed by basically moving the window some k words forward (k can be from 1 or more than 1).

- Common examples of n-grams include unigrams (single words), bigrams (two-word sequences), trigrams (three-word sequences), four-grams (four-word sequences), and five-grams (five-word sequences). Higher-order n-grams, such as six-grams, seven-grams, and beyond, can also be constructed, but they become increasingly sparse and computationally expensive as the value of n increases.

- Neural language models

A neural language model (NLM) is a language model that uses neural networks to predict the likelihood of a sequence of words. NLMs are trained on a large amount of text data and can learn the language’s underlying structure. They are a fundamental part of many systems that try to solve natural language processing tasks, such as machine translation and speech recognition. Neural language models can capture more complex patterns and generate more coherent text than n-gram models.Here are some types of neural language models:

-

- Word embeddings: A category of NLP model that maps words to numerical vectors. This allows for finding similar vectors and vector clusters, and then reversing the mapping to get relevant linguistic information.

- Recurrent neural network (RNN): A type of Artificial Neural Network (ANN) that recognizes sequential data characteristics and uses patterns to predict future scenarios. RNNs are used in natural language processing (NLP) and speech recognition.

- Bidirectional Encoder Representations from Transformers (BERT): A natural language processing model that uses transformers to perform a variety of NLP tasks, including question answering, natural language inference, and named entity recognition.

- Long Short Term Memory (LSTM): A type of recurrent neural network that is well-suited for modeling sequential data. LSTMs are a type of deep learning model, which are able to learn complex patterns in data that are hard to learn using shallower models.

- Neural machine translation: A form of machine translation that uses deep learning models to deliver more accurate and natural sounding translation than traditional statistical and rule-based translation algorithms.

- Language model: A neural network that trains by predicting blanked-out words in texts, and then adjusts the strength of connections between their layered computing elements to reduce prediction error.

- Convolutional neural network: A type of neural network, which are a special kind of deep learning model.

- Transformer encoder–decoder architecture: Dedicated to natural language generation (NLG) tasks, which aim to generate a coherent, meaningful, and human-like natural language expression according to specific inputs.

- Probabilistic models: A type of statistical model that use probabilities and statistical data to make predictions and understand complex relationships in data. Probabilistic models are used in a variety of fields, including natural language processing, computer vision, and machine learning.

Additional reading:

Additional reading:

- “Language Modeling with Deep Learning” by Lil’Log (https://lilianweng.github.io/lil-log/2019/01/31/generalized-language-models.html)

3.3.2 Applications of language models in NLP tasks (e.g., text generation, machine translation)

Language models have a wide range of applications in NLP tasks, including:

- Text generation: Language models can be used to generate human-like text, such as product descriptions, news articles, or creative writing. By sampling from the learned probability distribution, language models can produce novel sequences that resemble the patterns observed in the training data.

- Machine translation: Language models are a key component of machine translation systems, helping to ensure the fluency and coherence of the translated text. By incorporating language models into the translation process, systems can generate more natural-sounding translations that better capture the structure and style of the target language.

- Speech recognition: Language models help to improve the accuracy of speech recognition systems by providing context and constraining the search space of possible word sequences. By assigning higher probabilities to more likely word sequences, language models can disambiguate phonetically similar words and enhance the overall performance of speech recognition.

- Text classification and sentiment analysis: Language models can be used as feature extractors for text classification and sentiment analysis tasks. By learning a dense representation of the input text, language models can capture semantic and syntactic information that can be used as input to downstream classification models, improving their accuracy and generalization.

Additional reading:

Additional reading:

- “Applications of Language Models” by Hugging Face (https://huggingface.co/course/chapter1/3?fw=pt)

3.3.3 Limitations and challenges of traditional language models

Despite their usefulness in many NLP tasks, traditional language models face several limitations and challenges:

- Limited context: N-gram models and early neural language models struggle to capture long-range dependencies and global context, as they rely on local word sequences to make predictions. This can lead to inconsistencies and lack of coherence in generated text.

- Data sparsity: As the size of the vocabulary and the length of the sequences increase, traditional language models require exponentially more training data to estimate the probabilities accurately. This can be particularly challenging for languages with rich morphology or complex syntactic structures.

- Lack of common sense and world knowledge: Traditional language models are trained solely on text data and do not have access to real-world knowledge or understanding. This can result in generated text that is grammatically correct but semantically nonsensical or inconsistent with common sense.

- Bias and fairness: Language models can inherit and amplify biases present in the training data, leading to generated text that reflects and perpetuates social stereotypes and discriminatory language. Addressing these biases and ensuring fairness in language models is an ongoing challenge in NLP research.

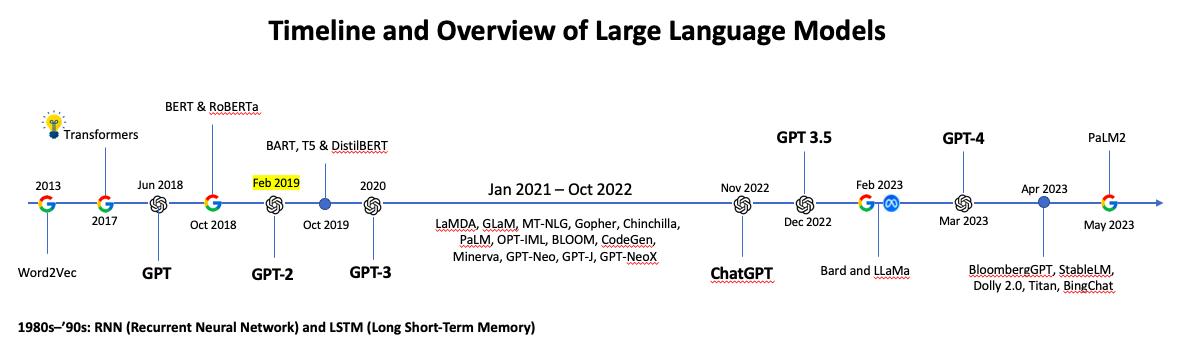

To overcome these limitations, researchers have developed more advanced language models, such as transformer-based models like BERT and GPT, which will be discussed in the next module on large language models (LLMs).

Additional reading:

Additional reading:

- “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?” by Bender et al. (https://dl.acm.org/doi/10.1145/3442188.3445922)

3.4 Sentiment Analysis and Opinion Mining

Sentiment analysis, also known as opinion mining, is a key application of NLP that focuses on determining the sentiment, emotion, or opinion expressed in a piece of text. This section will discuss the importance of sentiment analysis, explore various techniques for performing sentiment analysis, and highlight its applications in business and social media.

3.4.1 Understanding sentiment analysis and its importance

Sentiment analysis involves classifying a given text as positive, negative, or neutral based on the overall sentiment expressed in it. This can be done at various levels of granularity, such as:

- Document-level: Assigning a sentiment label to an entire document or review

- Sentence-level: Determining the sentiment of each sentence within a document

- Aspect-level: Identifying the sentiment towards specific aspects or features mentioned in the text

Sentiment analysis is important because it enables businesses, organizations, and individuals to gain valuable insights from large volumes of unstructured text data, such as:

- Customer reviews and feedback: Analyzing the sentiment of customer reviews can help businesses understand the strengths and weaknesses of their products or services, identify areas for improvement, and make data-driven decisions.

- Social media monitoring: Tracking the sentiment of social media posts and conversations can help organizations gauge public opinion, detect potential crises, and measure the impact of their marketing campaigns.

- Market research and competitive analysis: Sentiment analysis can be used to analyze the sentiment towards specific brands, products, or topics, providing valuable insights for market research and competitive analysis.

3.4.2 Techniques for sentiment analysis (e.g., rule-based, machine learning-based)

There are two main approaches to performing sentiment analysis:

- Rule-based approaches: These methods rely on manually crafted rules and sentiment lexicons to determine the sentiment of a text. Rules can be based on the presence of specific keywords, phrases, or linguistic patterns that are associated with positive, negative, or neutral sentiment. While rule-based approaches are interpretable and can be effective for domain-specific applications, they require significant manual effort and may struggle with complex or ambiguous expressions of sentiment.

- Machine learning-based approaches: These methods use supervised learning algorithms, such as Naive Bayes, Support Vector Machines (SVM), or deep learning models, to learn the patterns and features associated with different sentiment labels from labeled training data. Machine learning-based approaches can automatically learn more complex and nuanced expressions of sentiment and adapt to new domains or languages with sufficient training data. However, they require a large amount of labeled data and may be less interpretable than rule-based methods.

Hybrid approaches that combine rule-based and machine learning-based techniques are also common, leveraging the strengths of both methods to improve the accuracy and robustness of sentiment analysis systems.

Additional reading:

Additional reading:

- “Sentiment Analysis: A Definitive Guide” by MonkeyLearn (https://monkeylearn.com/sentiment-analysis/)

3.5 NLP in Practice: Real-World Applications

Natural Language Processing has numerous real-world applications across various domains, demonstrating its potential to transform industries and improve human-machine interaction. This section will explore some of the most prominent applications of NLP, including chatbots and virtual assistants, machine translation, text summarization, information retrieval, and healthcare and biomedical NLP applications.

The following sections provide an overview of NLP applications in various domains, illustrating the versatility and potential impact of these techniques. These examples will be explored in greater depth, with specific case studies and tools, in Module 5: Real-World Applications of AI and Language-Based Systems.

3.5.1 Chatbots and virtual assistants

Chatbots and virtual assistants are AI-powered systems that use NLP to understand and respond to user queries in natural language. These systems can be integrated into websites, messaging platforms, or stand-alone applications to provide 24/7 customer support, answer frequently asked questions, or assist users with various tasks.

Key advantages of chatbots and virtual assistants include:

- Improved customer experience: Chatbots can provide instant and personalized support to customers, reducing wait times and improving satisfaction.

- Cost savings: Automating customer support with chatbots can significantly reduce labor costs and enable human agents to focus on more complex issues.

- Scalability: Chatbots can handle a large volume of queries simultaneously, making them ideal for businesses with high customer interaction.

Examples of chatbots and virtual assistants include:

- Customer service chatbots: Many companies use chatbots to handle common customer inquiries, such as order status, returns, and product information.

- Personal assistants: Virtual assistants like Apple’s Siri, Amazon’s Alexa, and Google Assistant use NLP to understand and respond to user commands, perform tasks, and provide information.

- Mental health chatbots: Some organizations use chatbots to provide initial mental health support, offer coping strategies, and connect users with professional help when needed.

Additional reading:

Additional reading:

- “Chatbots: A Comprehensive Guide” by IBM (https://www.ibm.com/watson/assistant/chatbot)

3.5.2 Machine translation and multilingual NLP

Machine translation involves using NLP techniques to automatically translate text or speech from one language to another. This has become increasingly important in today’s globalized world, enabling communication and information sharing across language barriers.

Machine translation systems can be classified into three main types:

- Rule-based machine translation (RBMT): These systems use manually crafted linguistic rules and dictionaries to analyze the source language and generate the target language. While RBMT systems can produce grammatically correct translations, they often struggle with capturing context and idiomatic expressions.

- Statistical machine translation (SMT): These systems learn the probability of word or phrase mappings between the source and target languages from large parallel corpora. SMT systems can handle more complex translations than RBMT but still face challenges with long-range dependencies and rare words.

- Neural machine translation (NMT): These systems use deep learning models, such as sequence-to-sequence models or transformers, to learn the mapping between the source and target languages. NMT systems can capture more context and produce more fluent translations than previous approaches but require large amounts of training data and computational resources.

Multilingual NLP involves developing NLP systems that can handle multiple languages, either by training separate models for each language or by using language-agnostic models that can process any language. This is particularly important for low-resource languages that may not have sufficient labeled data for traditional NLP approaches.

Applications of machine translation and multilingual NLP include:

- Global business communication: Machine translation enables companies to communicate with customers, partners, and employees across different languages, facilitating international trade and collaboration.

- Cross-lingual information retrieval: Multilingual NLP techniques allow users to search for information in one language and retrieve relevant documents in other languages, expanding access to knowledge and resources.

- Language learning and education: Machine translation can be used to create bilingual learning materials, assist language learners, and facilitate cross-cultural exchange in educational settings.

Additional reading:

Additional reading:

- “Machine Translation: A Review” by Poibeau et al. (https://doi.org/10.1016/j.csl.2019.101055)

3.5.3 Text summarization and content generation

Text summarization involves using NLP techniques to generate a concise summary of a longer text while preserving the most important information. This is particularly useful for quickly digesting large amounts of information, such as news articles, research papers, or legal documents.

There are two main approaches to text summarization:

- Extractive summarization: This method selects the most relevant sentences or phrases from the original text and combines them to form a summary. Extractive methods rely on techniques like sentence scoring, keyword extraction, and graph-based ranking to identify the most informative parts of the text.

- Abstractive summarization: This method generates a summary by understanding the content of the original text and creating new sentences that capture the main ideas. Abstractive methods often use deep learning models, such as sequence-to-sequence models or transformers, to generate fluent and coherent summaries.

Content generation involves using NLP techniques to automatically create written content, such as articles, reports, or product descriptions. This can be done using language models that are trained on large corpora of text data and can generate human-like text based on a given prompt or context.

Applications of text summarization and content generation include:

- News aggregation and summarization: Text summarization can be used to generate concise summaries of news articles or events, helping users stay informed without having to read lengthy articles.

- Meeting and lecture notes: Automatic summarization can be applied to transcripts of meetings, lectures, or conference calls to generate key takeaways and action items.

- Content creation and curation: NLP-powered content generation can assist writers, journalists, and marketers in creating draft articles, product descriptions, or social media posts, saving time and effort in the content creation process.

Additional reading:

Additional reading:

- “Automatic Text Summarization: A Comprehensive Review” by Gupta and Gupta (https://doi.org/10.1016/j.eswa.2019.05.032)

3.5.4 Information retrieval and search engines

Information retrieval (IR) is the process of finding relevant information within a large collection of unstructured data, such as documents or web pages. NLP plays a crucial role in modern IR systems, enabling them to understand user queries, analyze document content, and rank search results based on relevance.

Key components of an NLP-powered IR system include:

- Query understanding: NLP techniques like named entity recognition, part-of-speech tagging, and semantic analysis help IR systems understand the intent and context behind user queries, improving the accuracy and relevance of search results.

- Document processing: NLP methods such as tokenization, stemming, and topic modeling are used to analyze and index the content of documents, making them searchable and retrievable based on their semantic content.

- Relevance ranking: NLP-based relevance models, such as TF-IDF, BM25, or learning-to-rank algorithms, are used to score and rank search results based on their relevance to the user query, taking into account factors like keyword frequency, document structure, and semantic similarity.

Search engines are the most prominent application of information retrieval, enabling users to find relevant web pages, images, videos, or other content based on their queries. NLP is a key enabler of modern search engines, powering features like:

- Query suggestion and auto-completion: NLP models predict and suggest relevant queries based on user input, helping users find information more quickly and easily.

- Synonym expansion and query reformulation: NLP techniques identify synonyms and related terms to expand the user query, improving the recall and diversity of search results.

- Featured snippets and knowledge panels: NLP methods extract and summarize relevant information from top-ranked pages to provide direct answers to user queries, enhancing the search experience and reducing the need for users to click through to individual pages.

Additional reading:

Additional reading:

- “Information Retrieval: A Survey” by Baeza-Yates and Ribeiro-Neto (https://www.pearson.com/us/higher-education/program/Baeza-Yates-Modern-Information-Retrieval-The-Concepts-and-Technology-behind-Search-2nd-Edition/PGM320237.html)

3.5.5 Healthcare and biomedical NLP applications

The NLP applications in the healthcare and biomedical domains range from research and clinical decision support to patient safety monitoring and administrative tasks including enabling the extraction of valuable insights from unstructured medical data, such as clinical notes, patient records, and scientific literature. Some key applications include:

- Clinical decision support: NLP systems can analyze patient data and provide evidence-based recommendations to physicians, assisting in diagnosis, treatment planning, and risk assessment.

- Medical language processing: NLP techniques are used to process and normalize medical terminology, enabling the integration and analysis of data from different sources and formats.

- Patient cohort identification: NLP methods can be used to identify patients with specific conditions or eligibility criteria from large clinical databases, facilitating clinical research and trial recruitment.

- Pharmacovigilance and drug safety: NLP systems can monitor and extract adverse drug events from clinical notes, social media, and patient forums, helping to identify potential safety issues and improve drug safety.

- Literature mining and knowledge discovery: NLP techniques can be applied to large collections of biomedical literature to extract entities, relationships, and hypotheses, facilitating the discovery of new knowledge and the generation of research insights.

Examples of NLP applications in healthcare and biomedicine include:

- cTAKES (Clinical Text Analysis and Knowledge Extraction System): An open-source NLP system developed by the Mayo Clinic to extract information from clinical notes and support clinical research and quality improvement.

- PubMed: A search engine for biomedical literature that uses NLP techniques to process and index millions of scientific articles, enabling researchers to find relevant papers and stay up-to-date with the latest research.

- MedLEE (Medical Language Extraction and Encoding System): Developed by Columbia University, MedLEE is an NLP system that extracts structured information from clinical notes, radiology reports, and other medical documents. It has been used to support clinical decision-making, quality assessment, and research.

- MIMIC-III (Medical Information Mart for Intensive Care): A large, publically-available database comprising deidentified health data associated with over 40,000 patients who stayed in critical care units of the Beth Israel Deaconess Medical Center between 2001 and 2012. MIMIC-III includes a wide range of data, including clinical notes, which have been used to develop and evaluate NLP systems for various healthcare applications.

- Amazon Comprehend Medical: A HIPAA-eligible NLP service that uses machine learning to extract relevant medical information from unstructured text, such as clinical notes, prescriptions, and radiology reports. It can identify medical entities, relationships, and patient sentiment, enabling healthcare providers and researchers to gain insights from their data.

- Linguamatics Health: An NLP platform that uses a combination of rule-based and machine learning techniques to extract insights from healthcare data, including clinical notes, patient surveys, and social media. It has been used for applications such as patient safety monitoring, clinical trial matching, and population health management.

- 3M 360 Encompass: An NLP-powered platform that integrates with electronic health records (EHRs) to automatically extract and codify patient information, enabling more accurate clinical documentation, billing, and quality reporting.

As the volume of healthcare data continues to grow, NLP will play an increasingly important role in unlocking the value of this data and driving advances in personalized medicine, clinical decision support, and biomedical research.

3.5.6 Business and social media applications

Sentiment analysis has numerous applications in business and social media, including:

- Brand monitoring and reputation management: Companies can use sentiment analysis to track the sentiment towards their brand, products, or services on social media and online platforms, identifying potential issues and opportunities for improvement.

- Customer feedback analysis: Analyzing the sentiment of customer reviews, surveys, and support interactions can help businesses understand the drivers of customer satisfaction and loyalty, as well as identify areas for product or service enhancement.

- Social media marketing: Sentiment analysis can be used to measure the success of social media marketing campaigns, track the sentiment towards specific hashtags or topics, and identify influencers or brand advocates.

- Public opinion and trend analysis: Governments, organizations, and researchers can use sentiment analysis to monitor public opinion on various issues, detect emerging trends, and inform policy decisions.

- Financial market analysis: Sentiment analysis can be applied to financial news, social media, and analyst reports to gauge market sentiment and predict stock price movements or other financial indicators.

As the volume of user-generated content continues to grow, sentiment analysis will become increasingly important for organizations to make sense of the vast amounts of unstructured text data and derive actionable insights.

3.6 Summary

This module provided a comprehensive overview of Natural Language Processing (NLP) fundamentals and their applications. Here are the key takeaways:

- Core NLP Concepts: We learned about the building blocks of NLP systems, including tokenization, part-of-speech tagging, named entity recognition, parsing, and semantic analysis. Understanding these techniques is crucial for grasping how machines process and interpret human language.

- Language Models: We explored language models like n-grams and neural networks that enable systems to understand and generate natural language. You saw their real-world applications as well as the limitations of traditional models.

- Sentiment Analysis: This key NLP application focuses on determining the underlying sentiment or emotion in text data. We covered rule-based and machine learning approaches, along with use cases in business intelligence and social media monitoring.

- Real-World NLP Applications: The module showcased NLP’s transformative impact across various domains, such as chatbots, machine translation, text summarization, information retrieval, and healthcare. These applications demonstrate NLP’s potential to revolutionize human-computer interaction.

- Rapid Evolution: NLP is a rapidly evolving field, with new techniques and applications emerging continuously. Staying updated on the latest research and industry trends is essential.

- Interdisciplinary Nature: NLP draws from computer science, linguistics, psychology, and other fields. Collaborating across disciplines and considering social and ethical implications is crucial for responsible NLP development.

- Data Quality and Fairness: The effectiveness of NLP systems heavily relies on the quality, diversity, and representativeness of the training data. Ensuring fairness and addressing biases in NLP models is an ongoing challenge.

By understanding these fundamentals and real-world applications, you are well-equipped to contribute to the development of intelligent language systems that improve human-machine interactions.

In the next module, we will explore Large Language Models (LLMs), which are pushing the boundaries of NLP and enabling new possibilities in language understanding and generation.